I was reading the TF performance guide for Data Loading section. For prefetch it says,

The tf.data API provides a software pipelining mechanism through the tf.data.Dataset.prefetch transformation, which can be used to decouple the time when data is produced from the time when data is consumed. In particular, the transformation uses a background thread and an internal buffer to prefetch elements from the input dataset ahead of the time they are requested. The number of elements to prefetch should be equal to (or possibly greater than) the number of batches consumed by a single training step. You could either manually tune this value, or set it to tf.data.experimental.AUTOTUNE which will prompt the tf.data runtime to tune the value dynamically at runtime.

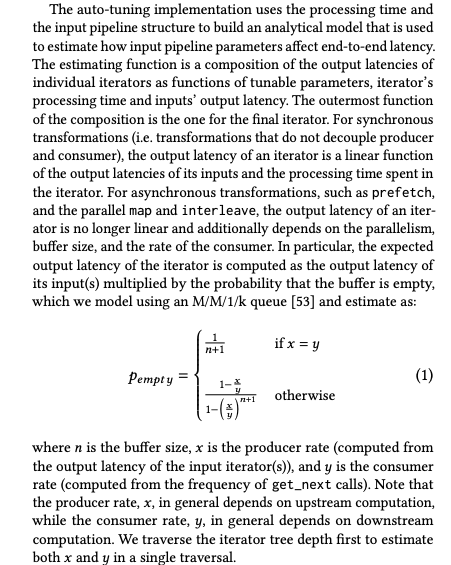

What is AUTOTUNE doing internally? Which algorithm, heuristics are being applied?

Additionally, in practice, what kind of manual tuning is done?

AUTOTUNE:/ – Mathieu