TLDR: Try: file = open(filename, encoding='cp437')

Why? When one uses:

file = open(filename)

text = file.read()

Python assumes the file uses the same codepage as current environment (cp1252 in case of the opening post) and tries to decode it to its own default UTF-8. If the file contains characters of values not defined in this codepage (like 0x90) we get UnicodeDecodeError. Sometimes we don't know the encoding of the file, sometimes the file's encoding may be not handled by Python (like e.g. cp790), sometimes the file can contain mixed encodings.

If such characters are unneeded, one may decide to replace them by question marks, with:

file = open(filename, errors='replace')

Another workaround is to use:

file = open(filename, errors='ignore')

The characters are then left intact, but other errors will be masked too.

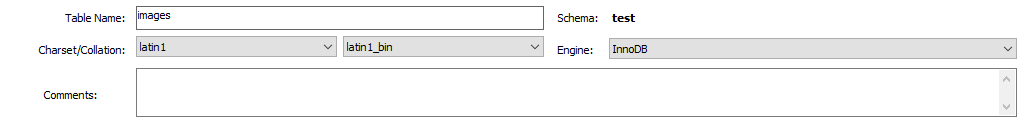

A very good solution is to specify the encoding, yet not any encoding (like cp1252), but the one which maps every single-byte value (0..255) to a character (like cp437 or latin1):

file = open(filename, encoding='cp437')

Codepage 437 is just an example. It is the original DOS encoding. All codes are mapped, so there are no errors while reading the file, no errors are masked out, the characters are preserved (not quite left intact but still distinguishable) and one can check their ord() values.

Please note that this advice is just a quick workaround for a nasty problem. Proper solution is to use binary mode, although it is not so quick.