First of all: Many opponents of changing default enc argue that its dumb because its even changing ascii comparisons

I think its fair to make clear that, compliant with the original question, I see nobody advocating anything else than deviating from Ascii to UTF-8.

The setdefaultencoding('utf-16') example seems to be always just brought forward by those who oppose changing it ;-)

With m = {'a': 1, 'é': 2} and the file 'out.py':

# coding: utf-8

print u'é'

Then:

+---------------+-----------------------+-----------------+

| DEF.ENC | OPERATION | RESULT (printed)|

+---------------+-----------------------+-----------------+

| ANY | u'abc' == 'abc' | True |

| (i.e.Ascii | str(u'abc') | 'abc' |

| or UTF-8) | '%s %s' % ('a', u'a') | u'a a' |

| | python out.py | é |

| | u'a' in m | True |

| | len(u'a'), len(a) | (1, 1) |

| | len(u'é'), len('é') | (1, 2) [*] |

| | u'é' in m | False (!) |

+---------------+-----------------------+-----------------+

| UTF-8 | u'abé' == 'abé' | True [*] |

| | str(u'é') | 'é' |

| | '%s %s' % ('é', u'é') | u'é é' |

| | python out.py | more | 'é' |

+---------------+-----------------------+-----------------+

| Ascii | u'abé' == 'abé' | False, Warning |

| | str(u'é') | Encoding Crash |

| | '%s %s' % ('é', u'é') | Decoding Crash |

| | python out.py | more | Encoding Crash |

+---------------+-----------------------+-----------------+

[*]: Result assumes the same é. See below on that.

While looking at those operations, changing the default encoding in your program might not look too bad, giving you results 'closer' to having Ascii only data.

Regarding the hashing ( in ) and len() behaviour you get the same then in Ascii (more on the results below). Those operations also show that there are significant differences between unicode and byte strings - which might cause logical errors if ignored by you.

As noted already: It is a process wide option so you just have one shot to choose it - which is the reason why library developers should really never ever do it but get their internals in order so that they do not need to rely on python's implicit conversions.

They also need to clearly document what they expect and return and deny input they did not write the lib for (like the normalize function, see below).

=> Writing programs with that setting on makes it risky for others to use the modules of your program in their code, at least without filtering input.

Note: Some opponents claim that def.enc. is even a system wide option (via sitecustomize.py) but latest in times of software containerisation (docker) every process can be started in its perfect environment w/o overhead.

Regarding the hashing and len() behaviour:

It tells you that even with a modified def.enc. you still can't be ignorant about the types of strings you process in your program. u'' and '' are different sequences of bytes in the memory - not always but in general.

So when testing make sure your program behaves correctly also with non Ascii data.

Some say the fact that hashes can become unequal when data values change - although due to implicit conversions the '==' operations remain equal - is an argument against changing def.enc.

I personally don't share that since the hashing behaviour just remains the same as w/o changing it. Have yet to see a convincing example of undesired behaviour due to that setting in a process I 'own'.

All in all, regarding setdefaultencoding("utf-8"): The answer regarding if its dumb or not should be more balanced.

It depends.

While it does avoid crashes e.g. at str() operations in a log statement - the price is a higher chance for unexpected results later since wrong types make it longer into code whose correct functioning depends on a certain type.

In no case it should be the alternative to learning the difference between byte strings and unicode strings for your own code.

Lastly, setting default encoding away from Ascii does not make your life any easier for common text operations like len(), slicing and comparisons - should you assume than (byte)stringyfying everything with UTF-8 on resolves problems here.

Unfortunately it doesn't - in general.

The '==' and len() results are far more complex problem than one might think - but even with the same type on both sides.

W/o def.enc. changed, "==" fails always for non Ascii, like shown in the table. With it, it works - sometimes:

Unicode did standardise around a million symbols of the world and gave them a number - but there is unfortunately NOT a 1:1 bijection between glyphs displayed to a user in output devices and the symbols they are generated from.

To motivate you research this: Having two files, j1, j2 written with the same program using the same encoding, containing user input:

>>> u1, u2 = open('j1').read(), open('j2').read()

>>> print sys.version.split()[0], u1, u2, u1 == u2

Result: 2.7.9 José José False (!)

Using print as a function in Py2 you see the reason: Unfortunately there are TWO ways to encode the same character, the accented 'e':

>>> print (sys.version.split()[0], u1, u2, u1 == u2)

('2.7.9', 'Jos\xc3\xa9', 'Jose\xcc\x81', False)

What a stupid codec you might say but its not the fault of the codec. Its a problem in unicode as such.

So even in Py3:

>>> u1, u2 = open('j1').read(), open('j2').read()

>>> print sys.version.split()[0], u1, u2, u1 == u2

Result: 3.4.2 José José False (!)

=> Independent of Py2 and Py3, actually independent of any computing language you use: To write quality software you probably have to "normalise" all user input. The unicode standard did standardise normalisation.

In Python 2 and 3 the unicodedata.normalize function is your friend.

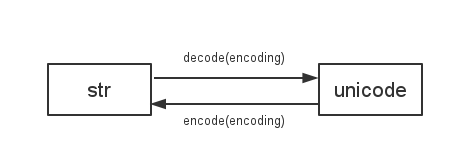

s = u'é' str(s). You should work with one type either string or unicode and handle the encoding explicitly. – ReclinerUTF-8string is a not a Unicode object yet, and regardless of the encoding such string objects won't compare equal if they have different contents. Unless there is a bug in Python hash function, – Rifesys.setdefaultencodingisn’t the solution.) And lastly, if you want to see a bug it causes, look no further: https://mcmap.net/q/37496/-will-a-unicode-string-just-containing-ascii-characters-always-be-equal-to-the-ascii-string – Alessandrosys.setdefaultencoding('utf-8'). Here's a blog post of someone else that got screwed by this with some more details and further links. – Potion