I have some hundreds of images (scanned documents), most of them are skewed. I wanted to de-skew them using Python.

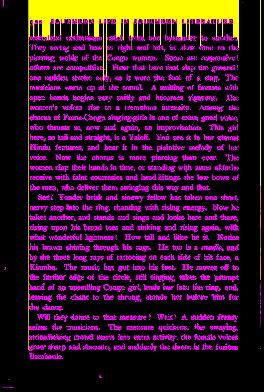

Here is the code I used:

import numpy as np

import cv2

from skimage.transform import radon

filename = 'path_to_filename'

# Load file, converting to grayscale

img = cv2.imread(filename)

I = cv2.cvtColor(img, COLOR_BGR2GRAY)

h, w = I.shape

# If the resolution is high, resize the image to reduce processing time.

if (w > 640):

I = cv2.resize(I, (640, int((h / w) * 640)))

I = I - np.mean(I) # Demean; make the brightness extend above and below zero

# Do the radon transform

sinogram = radon(I)

# Find the RMS value of each row and find "busiest" rotation,

# where the transform is lined up perfectly with the alternating dark

# text and white lines

r = np.array([np.sqrt(np.mean(np.abs(line) ** 2)) for line in sinogram.transpose()])

rotation = np.argmax(r)

print('Rotation: {:.2f} degrees'.format(90 - rotation))

# Rotate and save with the original resolution

M = cv2.getRotationMatrix2D((w/2,h/2),90 - rotation,1)

dst = cv2.warpAffine(img,M,(w,h))

cv2.imwrite('rotated.jpg', dst)

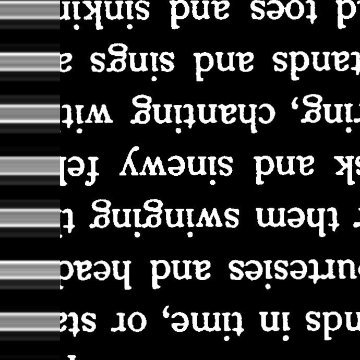

This code works well with most of the documents, except with some angles: (180 and 0) and (90 and 270) are often detected as the same angle (i.e it does not make difference between (180 and 0) and (90 and 270)). So I get a lot of upside-down documents.

The resulted image that I get is the same as the input image.

Is there any suggestion to detect if an image is upside down using Opencv and Python?

PS: I tried to check the orientation using EXIF data, but it didn't lead to any solution.

EDIT:

It is possible to detect the orientation using Tesseract (pytesseract for Python), but it is only possible when the image contains a lot of characters.

For anyone who may need this:

import cv2

import pytesseract

print(pytesseract.image_to_osd(cv2.imread(file_name)))

If the document contains enough characters, it is possible for Tesseract to detect the orientation. However, when the image has few lines, the orientation angle suggested by Tesseract is usually wrong. So this can not be a 100% solution.