I am having issues using pandas groupby with categorical data. Theoretically, it should be super efficient: you are grouping and indexing via integers rather than strings. But it insists that, when grouping by multiple categories, every combination of categories must be accounted for.

I sometimes use categories even when there's a low density of common strings, simply because those strings are long and it saves memory / improves performance. Sometimes there are thousands of categories in each column. When grouping by 3 columns, pandas forces us to hold results for 1000^3 groups.

My question: is there a convenient way to use groupby with categories while avoiding this untoward behaviour? I'm not looking for any of these solutions:

- Recreating all the functionality via

numpy. - Continually converting to strings/codes before

groupby, reverting to categories later. - Making a tuple column from group columns, then group by the tuple column.

I'm hoping there's a way to modify just this particular pandas idiosyncrasy. A simple example is below. Instead of 4 categories I want in the output, I end up with 12.

import pandas as pd

group_cols = ['Group1', 'Group2', 'Group3']

df = pd.DataFrame([['A', 'B', 'C', 54.34],

['A', 'B', 'D', 61.34],

['B', 'A', 'C', 514.5],

['B', 'A', 'A', 765.4],

['A', 'B', 'D', 765.4]],

columns=(group_cols+['Value']))

for col in group_cols:

df[col] = df[col].astype('category')

df.groupby(group_cols, as_index=False).sum()

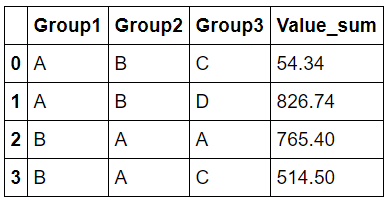

Group1 Group2 Group3 Value

# A A A NaN

# A A C NaN

# A A D NaN

# A B A NaN

# A B C 54.34

# A B D 826.74

# B A A 765.40

# B A C 514.50

# B A D NaN

# B B A NaN

# B B C NaN

# B B D NaN

Bounty update

The issue is poorly addressed by pandas development team (cf github.com/pandas-dev/pandas/issues/17594). Therefore, I am looking for responses that address any of the following:

- Why, with reference to pandas source code, is categorical data treated differently in groupby operations?

- Why would the current implementation be preferred? I appreciate this is subjective, but I am struggling to find any answer to this question. Current behaviour is prohibitive in many situations without cumbersome, potentially expensive, workarounds.

- Is there a clean solution to override pandas treatment of categorical data in groupby operations? Note the 3 no-go routes (dropping down to numpy; conversions to/from codes; creating and grouping by tuple columns). I would prefer a solution that is "pandas-compliant" to minimise / avoid loss of other pandas categorical functionality.

- A response from pandas development team to support and clarify existing treatment. Also, why should considering all category combinations not be configurable as a Boolean parameter?

Bounty update #2

To be clear, I'm not expecting answers to all of the above 4 questions. The main question I am asking is whether it's possible, or advisable, to overwrite pandas library methods so that categories are treated in a way that facilitates groupby / set_index operations.

groupbyto specify whether we want to calculate all category combinations. – Posthasteignore_missing=Booleanfor missing category combination in groupby. Someone might add that in future. Lets us try to ask for a feature request in github – Yanez