I was following this tutorial to use tensorflow serving using my object detection model. I am using tensorflow object detection for generating the model. I have created a frozen model using this exporter (the generated frozen model works using python script).

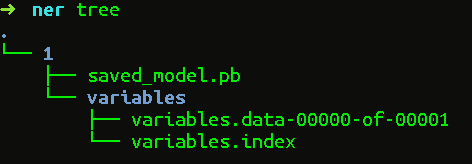

The frozen graph directory has following contents ( nothing on variables directory)

variables/

saved_model.pb

Now when I try to serve the model using the following command,

tensorflow_model_server --port=9000 --model_name=ssd --model_base_path=/serving/ssd_frozen/

It always shows me

...

tensorflow_serving/model_servers/server_core.cc:421] (Re-)adding model: ssd 2017-08-07 10:22:43.892834: W tensorflow_serving/sources/storage_path/file_system_storage_path_source.cc:262] No versions of servable ssd found under base path /serving/ssd_frozen/ 2017-08-07 10:22:44.892901: W tensorflow_serving/sources/storage_path/file_system_storage_path_source.cc:262] No versions of servable ssd found under base path /serving/ssd_frozen/

...