Short answer: there isn't one. Write the number the way you would write it on paper.

Long answer:

Endianness is never exposed directly in the code unless you really try to get it out (such as using pointer tricks). 0b0111 is 7, it's the same rules as hex, writing

int i = 0xAA77;

doesn't mean 0x77AA on some platforms because that would be absurd. Where would the extra 0s that are missing go anyway with 32-bit ints? Would they get padded on the front, then the whole thing flipped to 0x77AA0000, or would they get added after? I have no idea what someone would expect if that were the case.

The point is that C++ doesn't make any assumptions about the endianness of the machine*, if you write code using primitives and the literals it provides, the behavior will be the same from machine to machine (unless you start circumventing the type system, which you may need to do).

To address your update: the number will be the way you write it out. The bits will not be reordered or any such thing, the most significant bit is on the left and the least significant bit is on the right.

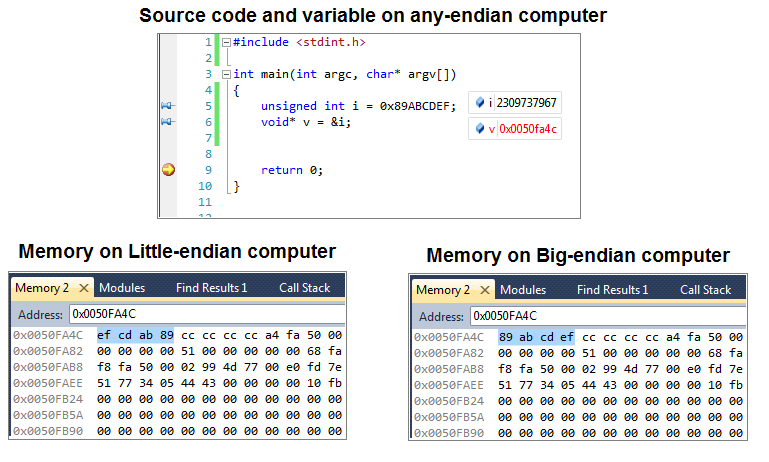

There seems to be a misunderstanding here about what endianness is. Endianness refers to how bytes are ordered in memory and how they must be interpretted. If I gave you the number "4172" and said "if this is four-thousand one-hundred seventy-two, what is the endianness" you can't really give an answer because the question doesn't make sense. (some argue that the largest digit on the left means big endian, but without memory addresses the question of endianness is not answerable or relevant). This is just a number, there are no bytes to interpret, there are no memory addresses. Assuming 4 byte integer representation, the bytes that correspond to it are:

low address ----> high address

Big endian: 00 00 10 4c

Little endian: 4c 10 00 00

so, given either of those and told "this is the computer's internal representation of 4172" you could determine if its little or big endian.

So now consider your binary literal 0b0111 these 4 bits represent one nybble, and can be stored as either

low ---> high

Big endian: 00 00 00 07

Little endian: 07 00 00 00

But you don't have to care because this is also handled by the hardware, the language dictates that the compiler reads from left to right, most significant bit to least significant bit

Endianness is not about individual bits. Given that a byte is 8 bits, if I hand you 0b00000111 and say "is this little or big endian?" again you can't say because you only have one byte (and no addresses). Endianness doesn't pertain to the order of bits in a byte, it refers to the ordering of entire bytes with respect to address(unless of course you have one-bit bytes).

You don't have to care about what your computer is using internally. 0b0111 just saves you the time from having to write stuff like

unsigned int mask = 7; // only keep the lowest 3 bits

by writing

unsigned int mask = 0b0111;

Without needing to comment explaining the significance of the number.

* In c++20 you can check the endianness using std::endian.

3412unordered sequences) – Rayburn