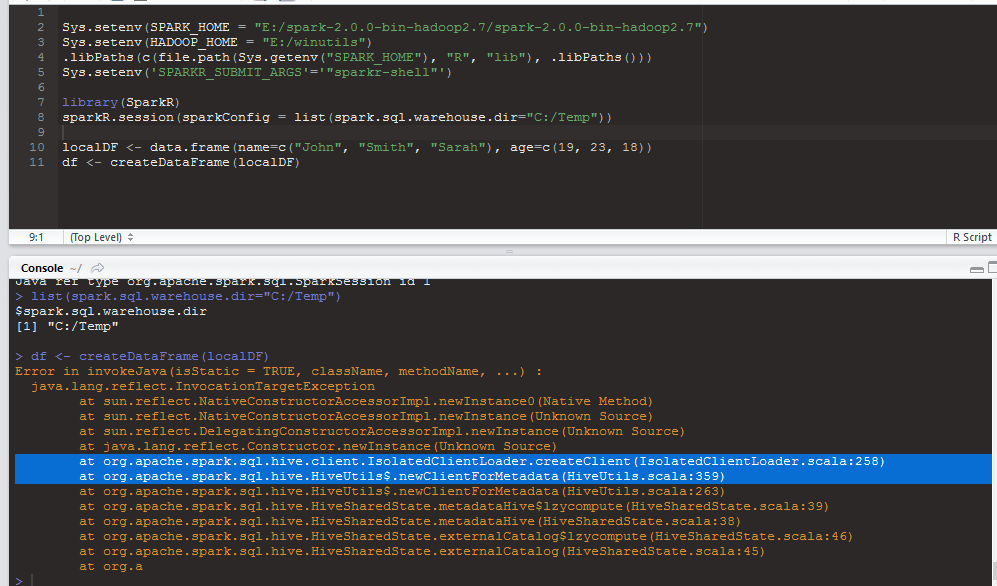

I am using RStudio.

After creating session if i try to create dataframe using R data it gives error.

Sys.setenv(SPARK_HOME = "E:/spark-2.0.0-bin-hadoop2.7/spark-2.0.0-bin-hadoop2.7")

Sys.setenv(HADOOP_HOME = "E:/winutils")

.libPaths(c(file.path(Sys.getenv("SPARK_HOME"), "R", "lib"), .libPaths()))

Sys.setenv('SPARKR_SUBMIT_ARGS'='"sparkr-shell"')

library(SparkR)

sparkR.session(sparkConfig = list(spark.sql.warehouse.dir="C:/Temp"))

localDF <- data.frame(name=c("John", "Smith", "Sarah"), age=c(19, 23, 18))

df <- createDataFrame(localDF)

ERROR :

Error in invokeJava(isStatic = TRUE, className, methodName, ...) :

java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at org.apache.spark.sql.hive.client.IsolatedClientLoader.createClient(IsolatedClientLoader.scala:258)

at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:359)

at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:263)

at org.apache.spark.sql.hive.HiveSharedState.metadataHive$lzycompute(HiveSharedState.scala:39)

at org.apache.spark.sql.hive.HiveSharedState.metadataHive(HiveSharedState.scala:38)

at org.apache.spark.sql.hive.HiveSharedState.externalCatalog$lzycompute(HiveSharedState.scala:46)

at org.apache.spark.sql.hive.HiveSharedState.externalCatalog(HiveSharedState.scala:45)

at org.a

>

TIA.