I can push some content to an S3 bucket with my credentials through S3cmd tool with s3cmd put contentfile S3://test_bucket/test_file

I am required to download the content from this bucket in other computers that don't have s3cmd installed on them, BUT they have wget installed.

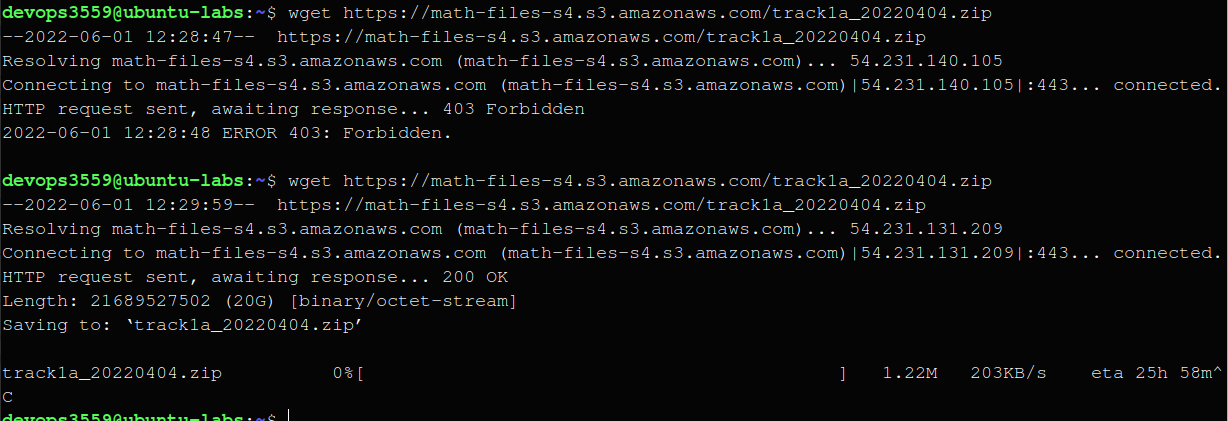

when I try to download some content from my bucket with wget I get this:

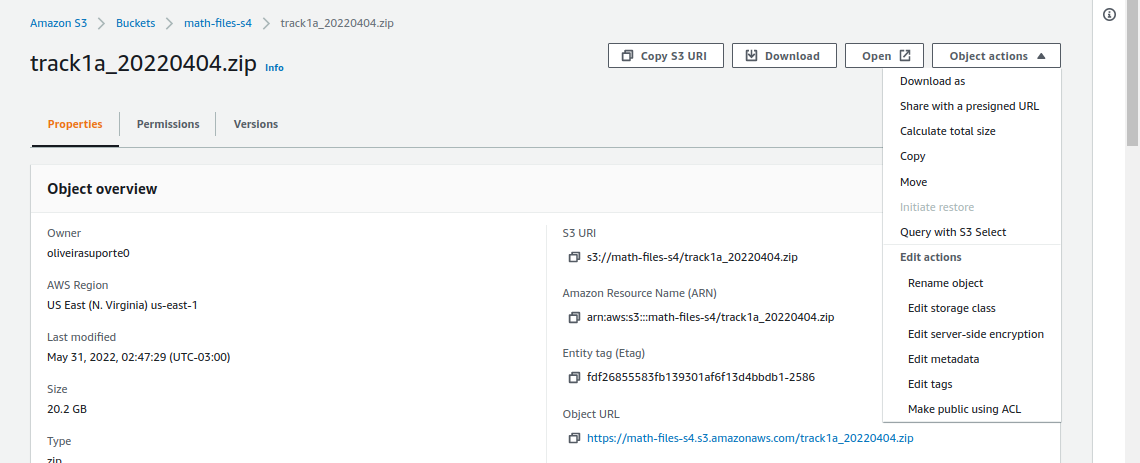

https://s3.amazonaws.com/test_bucket/test_file

--2013-08-14 18:17:40-- `https`://s3.amazonaws.com/test_bucket/test_file

Resolving s3.amazonaws.com (s3.amazonaws.com)... [ip_here]

Connecting to s3.amazonaws.com (s3.amazonaws.com)|ip_here|:port... connected.

HTTP request sent, awaiting response... 403 Forbidden

`2013`-08-14 18:17:40 ERROR 403: Forbidden.

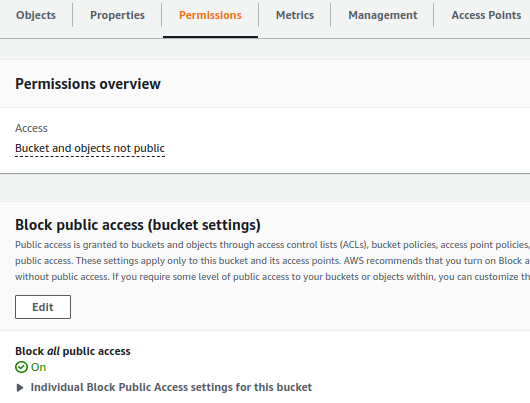

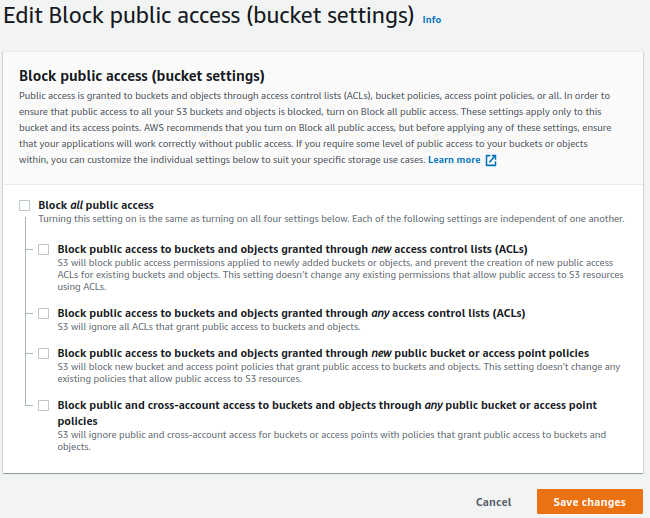

I have manually made this bucket public through the Amazon AWS web console.

How can I download content from an S3 bucket with wget into a local txt file?

wget "https://s3.amazonaws.com/test_bucket/test_file". Our URLs are expiring and have some trickery in there to authenticate. – Streptothricin