It's not cool enough having to change your source code for profiling. Let's see what your code is supposed to be like:

import multiprocessing

import time

def worker(num):

time.sleep(3)

print('Worker:', num)

if __name__ == '__main__':

processes = []

for i in range(5):

p = multiprocessing.Process(target=worker, args=(i,))

p.start()

processes.append(p)

for p in processes:

p.join()

I added join here so your main process will wait for your workers before quitting.

Instead of cProfile, try viztracer.

Install it by pip install viztracer. Then use the multiprocess feature

viztracer --log_multiprocess your_script.py

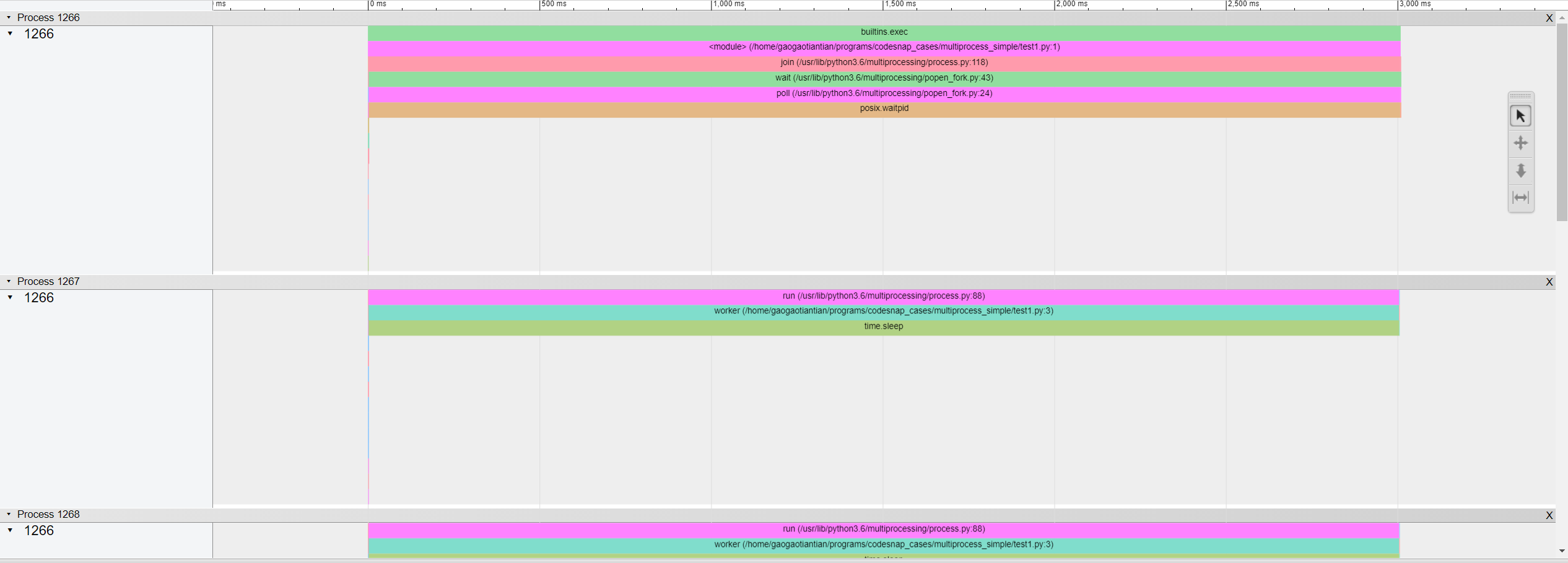

It will generate an html file showing every process on a timeline. (use AWSD to zoom/navigate)

![result of script]()

Of course this includes some info that you are not interested in(like the structure of the actual multiprocessing library). If you are already satisfied with this, you are good to go. However, if you want a clearer graph for only your function worker(). Try log_sparse feature.

First, decorate the function you want to log with @log_sparse

from viztracer import log_sparse

@log_sparse

def worker(num):

time.sleep(3)

print('Worker:', num)

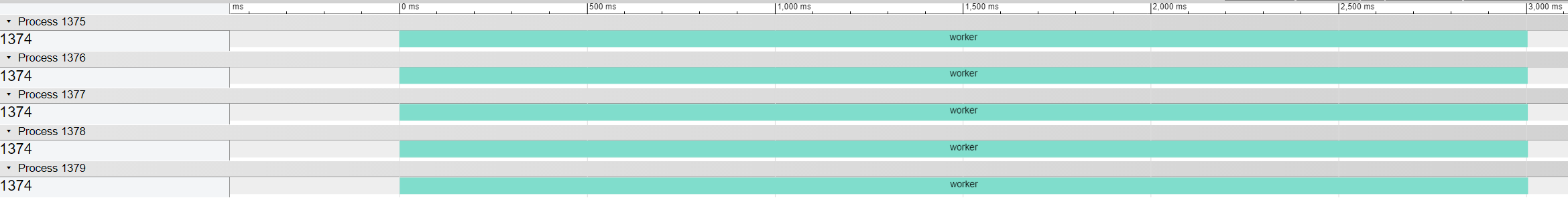

Then run viztracer --log_multiprocess --log_sparse your_script.py

![sparse log]()

Only your worker function, taking 3s, will be displayed on the timeline.