Yes There is!

Credit:

It was hard to find the information and get it working but here is an example copying from the principles and code found here and here.

Requirements:

Before we start, there are two requirement for this to be able to succeed. First you need to be able to write your activation as a function on numpy arrays. Second you have to be able to write the derivative of that function either as a function in Tensorflow (easier) or in the worst case scenario as a function on numpy arrays.

Writing Activation function:

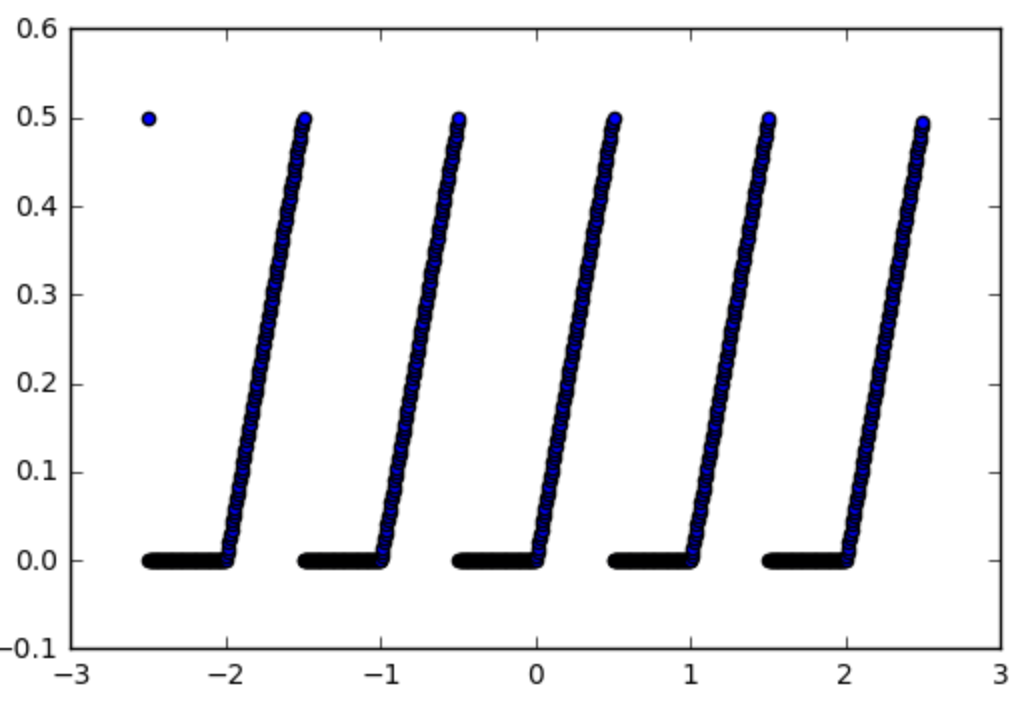

So let's take for example this function which we would want to use an activation function:

def spiky(x):

r = x % 1

if r <= 0.5:

return r

else:

return 0

Which look as follows:

![Spiky Activation]()

The first step is making it into a numpy function, this is easy:

import numpy as np

np_spiky = np.vectorize(spiky)

Now we should write its derivative.

Gradient of Activation:

In our case it is easy, it is 1 if x mod 1 < 0.5 and 0 otherwise. So:

def d_spiky(x):

r = x % 1

if r <= 0.5:

return 1

else:

return 0

np_d_spiky = np.vectorize(d_spiky)

Now for the hard part of making a TensorFlow function out of it.

Making a numpy fct to a tensorflow fct:

We will start by making np_d_spiky into a tensorflow function. There is a function in tensorflow tf.py_func(func, inp, Tout, stateful=stateful, name=name) [doc] which transforms any numpy function to a tensorflow function, so we can use it:

import tensorflow as tf

from tensorflow.python.framework import ops

np_d_spiky_32 = lambda x: np_d_spiky(x).astype(np.float32)

def tf_d_spiky(x,name=None):

with tf.name_scope(name, "d_spiky", [x]) as name:

y = tf.py_func(np_d_spiky_32,

[x],

[tf.float32],

name=name,

stateful=False)

return y[0]

tf.py_func acts on lists of tensors (and returns a list of tensors), that is why we have [x] (and return y[0]). The stateful option is to tell tensorflow whether the function always gives the same output for the same input (stateful = False) in which case tensorflow can simply the tensorflow graph, this is our case and will probably be the case in most situations. One thing to be careful of at this point is that numpy used float64 but tensorflow uses float32 so you need to convert your function to use float32 before you can convert it to a tensorflow function otherwise tensorflow will complain. This is why we need to make np_d_spiky_32 first.

What about the Gradients? The problem with only doing the above is that even though we now have tf_d_spiky which is the tensorflow version of np_d_spiky, we couldn't use it as an activation function if we wanted to because tensorflow doesn't know how to calculate the gradients of that function.

Hack to get Gradients: As explained in the sources mentioned above, there is a hack to define gradients of a function using tf.RegisterGradient [doc] and tf.Graph.gradient_override_map [doc]. Copying the code from harpone we can modify the tf.py_func function to make it define the gradient at the same time:

def py_func(func, inp, Tout, stateful=True, name=None, grad=None):

# Need to generate a unique name to avoid duplicates:

rnd_name = 'PyFuncGrad' + str(np.random.randint(0, 1E+8))

tf.RegisterGradient(rnd_name)(grad) # see _MySquareGrad for grad example

g = tf.get_default_graph()

with g.gradient_override_map({"PyFunc": rnd_name}):

return tf.py_func(func, inp, Tout, stateful=stateful, name=name)

Now we are almost done, the only thing is that the grad function we need to pass to the above py_func function needs to take a special form. It needs to take in an operation, and the previous gradients before the operation and propagate the gradients backward after the operation.

Gradient Function: So for our spiky activation function that is how we would do it:

def spikygrad(op, grad):

x = op.inputs[0]

n_gr = tf_d_spiky(x)

return grad * n_gr

The activation function has only one input, that is why x = op.inputs[0]. If the operation had many inputs, we would need to return a tuple, one gradient for each input. For example if the operation was a-bthe gradient with respect to a is +1 and with respect to b is -1 so we would have return +1*grad,-1*grad. Notice that we need to return tensorflow functions of the input, that is why need tf_d_spiky, np_d_spiky would not have worked because it cannot act on tensorflow tensors. Alternatively we could have written the derivative using tensorflow functions:

def spikygrad2(op, grad):

x = op.inputs[0]

r = tf.mod(x,1)

n_gr = tf.to_float(tf.less_equal(r, 0.5))

return grad * n_gr

Combining it all together: Now that we have all the pieces, we can combine them all together:

np_spiky_32 = lambda x: np_spiky(x).astype(np.float32)

def tf_spiky(x, name=None):

with tf.name_scope(name, "spiky", [x]) as name:

y = py_func(np_spiky_32,

[x],

[tf.float32],

name=name,

grad=spikygrad) # <-- here's the call to the gradient

return y[0]

And now we are done. And we can test it.

Test:

with tf.Session() as sess:

x = tf.constant([0.2,0.7,1.2,1.7])

y = tf_spiky(x)

tf.initialize_all_variables().run()

print(x.eval(), y.eval(), tf.gradients(y, [x])[0].eval())

[ 0.2 0.69999999 1.20000005 1.70000005] [ 0.2 0. 0.20000005 0.] [ 1. 0. 1. 0.]

Success!