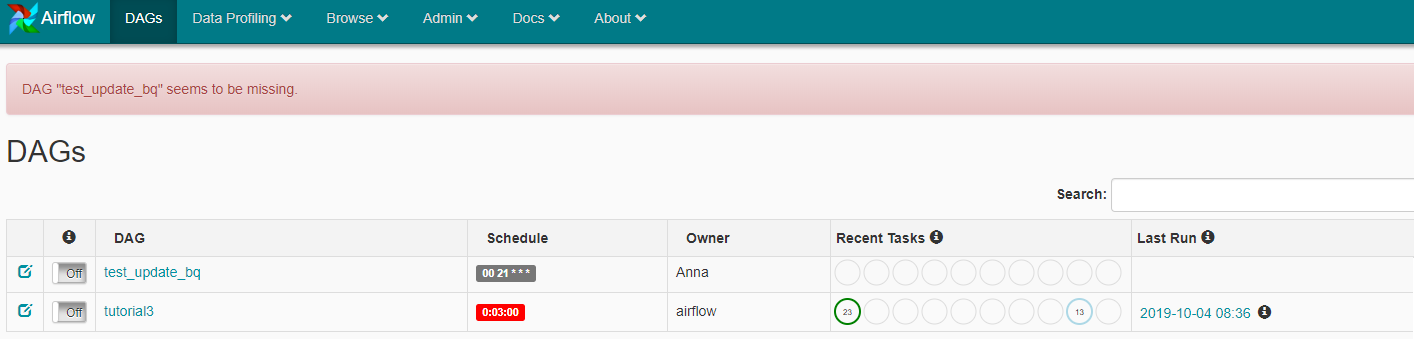

I want to run a simple Dag "test_update_bq", but when I go to localhost I see this: DAG "test_update_bq" seems to be missing.

There are no errors when I run "airflow initdb", also when I run test airflow test test_update_bq update_table_sql 2015-06-01, It was successfully done and the table was updated in BQ. Dag:

from airflow import DAG

from airflow.contrib.operators.bigquery_operator import BigQueryOperator

from datetime import datetime, timedelta

default_args = {

'owner': 'Anna',

'depends_on_past': True,

'start_date': datetime(2017, 6, 2),

'email': ['[email protected]'],

'email_on_failure': True,

'email_on_retry': False,

'retries': 5,

'retry_delay': timedelta(minutes=5),

}

schedule_interval = "00 21 * * *"

# Define DAG: Set ID and assign default args and schedule interval

dag = DAG('test_update_bq', default_args=default_args, schedule_interval=schedule_interval, template_searchpath = ['/home/ubuntu/airflow/dags/sql_bq'])

update_task = BigQueryOperator(

dag = dag,

allow_large_results=True,

task_id = 'update_table_sql',

sql = 'update_bq.sql',

use_legacy_sql = False,

bigquery_conn_id = 'test'

)

update_task

I would be grateful for any help.

/logs/scheduler

[2019-10-10 11:28:53,308] {logging_mixin.py:95} INFO - [2019-10-10 11:28:53,308] {dagbag.py:90} INFO - Filling up the DagBag from /home/ubuntu/airflow/dags/update_bq.py

[2019-10-10 11:28:53,333] {scheduler_job.py:1532} INFO - DAG(s) dict_keys(['test_update_bq']) retrieved from /home/ubuntu/airflow/dags/update_bq.py

[2019-10-10 11:28:53,383] {scheduler_job.py:152} INFO - Processing /home/ubuntu/airflow/dags/update_bq.py took 0.082 seconds

[2019-10-10 11:28:56,315] {logging_mixin.py:95} INFO - [2019-10-10 11:28:56,315] {settings.py:213} INFO - settings.configure_orm(): Using pool settings. pool_size=5, max_overflow=10, pool_recycle=3600, pid=11761

[2019-10-10 11:28:56,318] {scheduler_job.py:146} INFO - Started process (PID=11761) to work on /home/ubuntu/airflow/dags/update_bq.py

[2019-10-10 11:28:56,324] {scheduler_job.py:1520} INFO - Processing file /home/ubuntu/airflow/dags/update_bq.py for tasks to queue

[2019-10-10 11:28:56,325] {logging_mixin.py:95} INFO - [2019-10-10 11:28:56,325] {dagbag.py:90} INFO - Filling up the DagBag from /home/ubuntu/airflow/dags/update_bq.py

[2019-10-10 11:28:56,350] {scheduler_job.py:1532} INFO - DAG(s) dict_keys(['test_update_bq']) retrieved from /home/ubuntu/airflow/dags/update_bq.py

[2019-10-10 11:28:56,399] {scheduler_job.py:152} INFO - Processing /home/ubuntu/airflow/dags/update_bq.py took 0.081 seconds