In one sentence, regularization makes the model perform worse on training data so that it may perform better on holdout data.

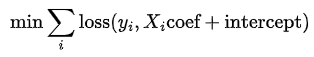

Logistic regression is an optimization problem where the following objective function is minimized.

![func1]()

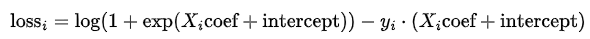

where loss function looks like (at least for solver='lbfgs') the following.

![loss func]()

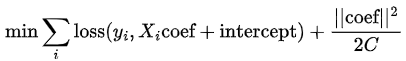

Regularization adds a norm of the coefficients to this function. The following implements the L2 penalty.

![func2]()

From the equation, it's clear that the regularization term is there to penalize large coefficients (the minimization problem is solving for the coefficients that minimize the objective function). Since the size of each coefficient depends on the scale of its corresponding variable, scaling the data is required so that the regularization penalizes each variable equally. The regularization strength is determined by C and as C increases, the regularization term becomes smaller (and for extremely large C values, it's as if there is no regularization at all).

If the initial model is overfit (as in, it fits the training data too well), then adding a strong regularization term (with small C value) makes the model perform worse for the training data, but introducing such "noise" improves the model's performance on unseen (or test) data.

An example with 1000 samples and 200 features shown below. As can be seen from the plot of accuracy over different values of C, if C is large (with very little regularization), there is a big gap between how the model performs on training data and test data. However, as C decreases, the model performs worse on training data but performs better on test data (test accuracy increases). However, when C becomes too small (or the regularization becomes too strong), the model begins performing worse again because now the regularization term completely dominates the objective function.

![C vs accuracy]()

Code used to to produce the graph:

import pandas as pd

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

# make sample data

X, y = make_classification(1000, 200, n_informative=195, random_state=2023)

# split into train-test datasets

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=2023)

# normalize the data

sc = StandardScaler()

X_train = sc.fit_transform(X_train)

X_test = sc.transform(X_test)

# train Logistic Regression models for different values of C

# and collect train and test accuracies

scores = {}

for C in (10**k for k in range(-6, 6)):

lr = LogisticRegression(C=C)

lr.fit(X_train, y_train)

scores[C] = {'train accuracy': lr.score(X_train, y_train),

'test accuracy': lr.score(X_test, y_test)}

# plot the accuracy scores for different values of C

pd.DataFrame.from_dict(scores, 'index').plot(logx=True, xlabel='C', ylabel='accuracy');