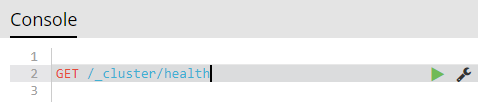

The _cluster/health API can do far more than the typical output that most see with it:

$ curl -XGET 'localhost:9200/_cluster/health?pretty'

Most APIs within Elasticsearch can take a variety of arguments to augment their output. This applies to Cluster Health API as well.

Examples

all the indices health

$ curl -XGET 'localhost:9200/_cluster/health?level=indices&pretty' | head -50

{

"cluster_name" : "rdu-es-01",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 9,

"number_of_data_nodes" : 6,

"active_primary_shards" : 1106,

"active_shards" : 2213,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0,

"indices" : {

"filebeat-6.5.1-2019.06.10" : {

"status" : "green",

"number_of_shards" : 3,

"number_of_replicas" : 1,

"active_primary_shards" : 3,

"active_shards" : 6,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0

},

"filebeat-6.5.1-2019.06.11" : {

"status" : "green",

"number_of_shards" : 3,

"number_of_replicas" : 1,

"active_primary_shards" : 3,

"active_shards" : 6,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0

},

"filebeat-6.5.1-2019.06.12" : {

"status" : "green",

"number_of_shards" : 3,

"number_of_replicas" : 1,

"active_primary_shards" : 3,

"active_shards" : 6,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0

},

"filebeat-6.5.1-2019.06.13" : {

"status" : "green",

"number_of_shards" : 3,

all shards health

$ curl -XGET 'localhost:9200/_cluster/health?level=shards&pretty' | head -50

{

"cluster_name" : "rdu-es-01",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 9,

"number_of_data_nodes" : 6,

"active_primary_shards" : 1106,

"active_shards" : 2213,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0,

"indices" : {

"filebeat-6.5.1-2019.06.10" : {

"status" : "green",

"number_of_shards" : 3,

"number_of_replicas" : 1,

"active_primary_shards" : 3,

"active_shards" : 6,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"shards" : {

"0" : {

"status" : "green",

"primary_active" : true,

"active_shards" : 2,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0

},

"1" : {

"status" : "green",

"primary_active" : true,

"active_shards" : 2,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0

},

"2" : {

"status" : "green",

"primary_active" : true,

"active_shards" : 2,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0

The API also has a variety of wait_* options where it'll wait for various state changes before returning immediately or after some specified timeout.

localhostis neither. – Kindless