"Column Tetris"

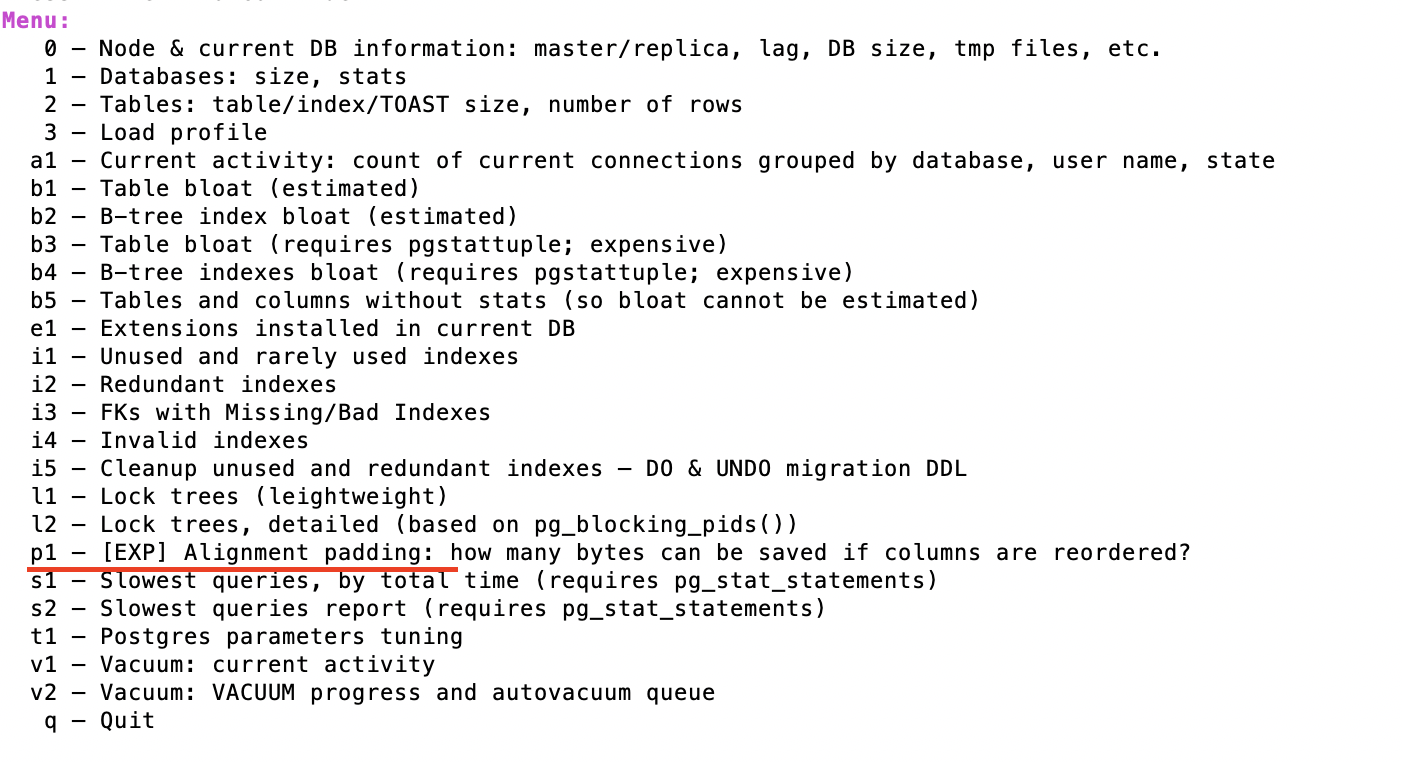

Actually, you can do something, but this needs deeper understanding. The keyword is alignment padding. Every data type has specific alignment requirements.

You can minimize space lost to padding between columns by ordering them favorably. The following (extreme) example would waste a lot of physical disk space:

CREATE TABLE t (

e int2

-- 6 bytes of padding after int2

, a int8

, f int2

-- 6 bytes of padding after int2

, b int8

, g int2

-- 6 bytes of padding after int2

, c int8

, h int2

-- 6 bytes of padding after int2

, d int8)

To save 24 bytes per row, use instead:

CREATE TABLE t (

a int8

, b int8

, c int8

, d int8

, e int2

, f int2

, g int2

, h int2) -- 4 int2 occupy 8 byte (MAXALIGN), no padding at the end

db<>fiddle here

Old sqlfiddle

As a rule of thumb, if you put 8-byte columns first, then 4-bytes, 2-bytes and 1-byte columns last you can't go wrong.

boolean, uuid (!) and a few other types need no alignment padding. text, varchar and other "varlena" (variable length) types nominally require "int" alignment (4 bytes on most machines). But I observed no alignment padding in disk format (unlike in RAM). Eventually, I found the explanation in a note in the source code:

Note also that we allow the nominal alignment to be violated when storing "packed" varlenas; the TOAST mechanism takes care of hiding that from most code.

So "int" alignment is only enforced when the (possibly compressed) datum including a single leading length-byte exceeds 127 bytes. Then varlena storage switches to four leading bytes and requires "int" alignment.

Normally, you may save a couple of bytes per row at best playing "column tetris". None of this is necessary in most cases. But with billions of rows it can mean a couple of gigabytes easily.

You can test the actual column / row size with the function pg_column_size().

Some types occupy more space in RAM than on disk (compressed or "packed" format). You can get bigger results for constants (RAM format) than for table columns when testing the same value (or row of values vs. table row) with pg_column_size().

Finally, some types can be compressed or "toasted" (stored out of line) or both.

Where possible, move NOT NULL columns to the front, and columns with many NULL values to the rear. NULL values are served from the null bitmap directly, so their position in the row has no bearing on the access cost of NULL values, but they add a tiny cost for computing the offset of columns positioned to the right (further back in the row).

Overhead per tuple (row)

4 bytes per row for the item identifier - not subject to above considerations.

And at least 24 bytes (23 + padding) for the tuple header. The manual on Database Page Layout:

There is a fixed-size header (occupying 23 bytes on most machines),

followed by an optional null bitmap, an optional object ID field, and

the user data.

For the padding between header and user data, you need to know MAXALIGN on your server - typically 8 bytes on a 64-bit OS (or 4 bytes on a 32-bit OS). If you are not sure, check out pg_controldata.

Run the following in your Postgres binary dir to get a definitive answer:

./pg_controldata /path/to/my/dbcluster

The manual:

The actual user data (columns of the row) begins at the offset

indicated by t_hoff, which must always be a multiple of the MAXALIGN

distance for the platform.

So you typically get the storage optimum by packing data in multiples of 8 bytes.

There is nothing to gain in the example you posted. It's already packed tightly. 2 bytes of padding after the last int2, 4 bytes at the end. You could consolidate the padding to 6 bytes at the end, which wouldn't change anything.

Overhead per data page

Data page size is typically 8 KB. Some overhead / bloat at this level, too: Remainders not big enough to fit another tuple, and more importantly dead rows or a percentage reserved with the FILLFACTOR setting.

There are a couple of other factors for size on disk to take into account:

Array types?

With an array type like you were evaluating, you would add 24 bytes of overhead for the type. Plus, array elements occupy space as usual. Nothing to gain there.

realanddouble precisiontypes for exact floating point number representation (for example to store accounting values). Use instead thenumericdata type. Just a tip in case thoserealvalues were to be used for accounting or exact result operations. – Scrope