Benchmarks

I tested the most interesting candidates:

- Initially with Postgres 9.4 and 9.5.

- Added accented tests for Postgres 13 later.

Basic test setup

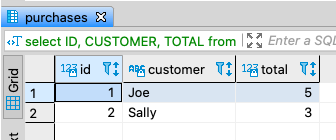

Main table: purchases:

CREATE TABLE purchases (

id serial -- PK constraint added below

, customer_id int -- REFERENCES customer

, total int -- could be amount of money in Cent

, some_column text -- to make the row bigger, more realistic

);

Dummy data (with some dead tuples), PK, index:

INSERT INTO purchases (customer_id, total, some_column) -- 200k rows

SELECT (random() * 10000)::int AS customer_id -- 10k distinct customers

, (random() * random() * 100000)::int AS total

, 'note: ' || repeat('x', (random()^2 * random() * random() * 500)::int)

FROM generate_series(1,200000) g;

ALTER TABLE purchases ADD CONSTRAINT purchases_id_pkey PRIMARY KEY (id);

DELETE FROM purchases WHERE random() > 0.9; -- some dead rows

INSERT INTO purchases (customer_id, total, some_column)

SELECT (random() * 10000)::int AS customer_id -- 10k customers

, (random() * random() * 100000)::int AS total

, 'note: ' || repeat('x', (random()^2 * random() * random() * 500)::int)

FROM generate_series(1,20000) g; -- add 20k to make it ~ 200k

CREATE INDEX purchases_3c_idx ON purchases (customer_id, total DESC, id);

VACUUM ANALYZE purchases;

customer table - used for optimized query:

CREATE TABLE customer AS

SELECT customer_id, 'customer_' || customer_id AS customer

FROM purchases

GROUP BY 1

ORDER BY 1;

ALTER TABLE customer ADD CONSTRAINT customer_customer_id_pkey PRIMARY KEY (customer_id);

VACUUM ANALYZE customer;

In my second test for 9.5 I used the same setup, but with 100000 distinct customer_id to get few rows per customer_id.

Object sizes for table purchases

Basic setup: 200k rows in purchases, 10k distinct customer_id, avg. 20 rows per customer.

For Postgres 9.5 I added a 2nd test with 86446 distinct customers - avg. 2.3 rows per customer.

Generated with a query taken from here:

Gathered for Postgres 9.5:

what | bytes/ct | bytes_pretty | bytes_per_row

-----------------------------------+----------+--------------+---------------

core_relation_size | 20496384 | 20 MB | 102

visibility_map | 0 | 0 bytes | 0

free_space_map | 24576 | 24 kB | 0

table_size_incl_toast | 20529152 | 20 MB | 102

indexes_size | 10977280 | 10 MB | 54

total_size_incl_toast_and_indexes | 31506432 | 30 MB | 157

live_rows_in_text_representation | 13729802 | 13 MB | 68

------------------------------ | | |

row_count | 200045 | |

live_tuples | 200045 | |

dead_tuples | 19955 | |

Queries

WITH cte AS (

SELECT id, customer_id, total

, row_number() OVER (PARTITION BY customer_id ORDER BY total DESC) AS rn

FROM purchases

)

SELECT id, customer_id, total

FROM cte

WHERE rn = 1;

2. row_number() in subquery (my optimization)

SELECT id, customer_id, total

FROM (

SELECT id, customer_id, total

, row_number() OVER (PARTITION BY customer_id ORDER BY total DESC) AS rn

FROM purchases

) sub

WHERE rn = 1;

SELECT DISTINCT ON (customer_id)

id, customer_id, total

FROM purchases

ORDER BY customer_id, total DESC, id;

4. rCTE with LATERAL subquery (see here)

WITH RECURSIVE cte AS (

( -- parentheses required

SELECT id, customer_id, total

FROM purchases

ORDER BY customer_id, total DESC

LIMIT 1

)

UNION ALL

SELECT u.*

FROM cte c

, LATERAL (

SELECT id, customer_id, total

FROM purchases

WHERE customer_id > c.customer_id -- lateral reference

ORDER BY customer_id, total DESC

LIMIT 1

) u

)

SELECT id, customer_id, total

FROM cte

ORDER BY customer_id;

5. customer table with LATERAL (see here)

SELECT l.*

FROM customer c

, LATERAL (

SELECT id, customer_id, total

FROM purchases

WHERE customer_id = c.customer_id -- lateral reference

ORDER BY total DESC

LIMIT 1

) l;

SELECT (array_agg(id ORDER BY total DESC))[1] AS id

, customer_id

, max(total) AS total

FROM purchases

GROUP BY customer_id;

Results

Execution time for above queries with EXPLAIN (ANALYZE, TIMING OFF, COSTS OFF, best of 5 runs to compare with warm cache.

All queries used an Index Only Scan on purchases2_3c_idx (among other steps). Some only to benefit from the smaller size of the index, others more effectively.

A. Postgres 9.4 with 200k rows and ~ 20 per customer_id

1. 273.274 ms

2. 194.572 ms

3. 111.067 ms

4. 92.922 ms -- !

5. 37.679 ms -- winner

6. 189.495 ms

B. Same as A. with Postgres 9.5

1. 288.006 ms

2. 223.032 ms

3. 107.074 ms

4. 78.032 ms -- !

5. 33.944 ms -- winner

6. 211.540 ms

C. Same as B., but with ~ 2.3 rows per customer_id

1. 381.573 ms

2. 311.976 ms

3. 124.074 ms -- winner

4. 710.631 ms

5. 311.976 ms

6. 421.679 ms

Retest with Postgres 13 on 2021-08-11

Simplified test setup: no deleted rows, because VACUUM ANALYZE cleans the table completely for the simple case.

Important changes for Postgres:

- General performance improvements.

- CTEs can be inlined since Postgres 12, so query 1. and 2. now perform mostly identical (same query plan).

D. Like B. ~ 20 rows per customer_id

1. 103 ms

2. 103 ms

3. 23 ms -- winner

4. 71 ms

5. 22 ms -- winner

6. 81 ms

db<>fiddle here

E. Like C. ~ 2.3 rows per customer_id

1. 127 ms

2. 126 ms

3. 36 ms -- winner

4. 620 ms

5. 145 ms

6. 203 ms

db<>fiddle here

Accented tests with Postgres 13

1M rows, 10.000 vs. 100 vs. 1.6 rows per customer.

F. with ~ 10.000 rows per customer

1. 526 ms

2. 527 ms

3. 127 ms

4. 2 ms -- winner !

5. 1 ms -- winner !

6. 356 ms

db<>fiddle here

G. with ~ 100 rows per customer

1. 535 ms

2. 529 ms

3. 132 ms

4. 108 ms -- !

5. 71 ms -- winner

6. 376 ms

db<>fiddle here

H. with ~ 1.6 rows per customer

1. 691 ms

2. 684 ms

3. 234 ms -- winner

4. 4669 ms

5. 1089 ms

6. 1264 ms

db<>fiddle here

Conclusions

DISTINCT ON uses the index effectively and typically performs best for few rows per group. And it performs decently even with many rows per group.

For many rows per group, emulating an index skip scan with an rCTE performs best - second only to the query technique with a separate lookup table (if that's available).

The row_number() technique demonstrated in the currently accepted answer never wins any performance test. Not then, not now. It never comes even close to DISTINCT ON, not even when the data distribution is unfavorable for the latter. The only good thing about row_number(): it does not scale terribly, just mediocre.

More benchmarks

Benchmark by "ogr" with 10M rows and 60k unique "customers" on Postgres 11.5. Results are in line with what we have seen so far:

Original (outdated) benchmark from 2011

I ran three tests with PostgreSQL 9.1 on a real life table of 65579 rows and single-column btree indexes on each of the three columns involved and took the best execution time of 5 runs.

Comparing @OMGPonies' first query (A) to the above DISTINCT ON solution (B):

- Select the whole table, results in 5958 rows in this case.

A: 567.218 ms

B: 386.673 ms

- Use condition

WHERE customer BETWEEN x AND y resulting in 1000 rows.

A: 249.136 ms

B: 55.111 ms

- Select a single customer with

WHERE customer = x.

A: 0.143 ms

B: 0.072 ms

Same test repeated with the index described in the other answer:

CREATE INDEX purchases_3c_idx ON purchases (customer, total DESC, id);

1A: 277.953 ms

1B: 193.547 ms

2A: 249.796 ms -- special index not used

2B: 28.679 ms

3A: 0.120 ms

3B: 0.048 ms

MAX(total)? – Phallicism