Can someone explain to me in simple English or an easy way to explain it?

On a "traditional" merge sort, each pass through the data doubles the size of the sorted subsections. After the first pass, the file will be sorted into sections of length two. After the second pass, length four. Then eight, sixteen, etc. up to the size of the file.

It's necessary to keep doubling the size of the sorted sections until there's one section comprising the whole file. It will take lg(N) doublings of the section size to reach the file size, and each pass of the data will take time proportional to the number of records.

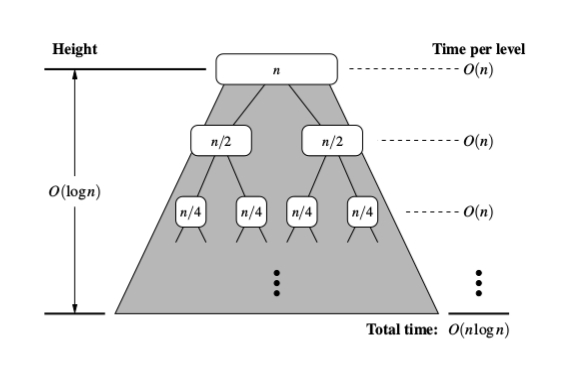

The Merge Sort use the Divide-and-Conquer approach to solve the sorting problem. First, it divides the input in half using recursion. After dividing, it sort the halfs and merge them into one sorted output. See the figure

It means that is better to sort half of your problem first and do a simple merge subroutine. So it is important to know the complexity of the merge subroutine and how many times it will be called in the recursion.

The pseudo-code for the merge sort is really simple.

# C = output [length = N]

# A 1st sorted half [N/2]

# B 2nd sorted half [N/2]

i = j = 1

for k = 1 to n

if A[i] < B[j]

C[k] = A[i]

i++

else

C[k] = B[j]

j++

It is easy to see that in every loop you will have 4 operations: k++, i++ or j++, the if statement and the attribution C = A|B. So you will have less or equal to 4N + 2 operations giving a O(N) complexity. For the sake of the proof 4N + 2 will be treated as 6N, since is true for N = 1 (4N +2 <= 6N).

So assume you have an input with N elements and assume N is a power of 2. At every level you have two times more subproblems with an input with half elements from the previous input. This means that at the the level j = 0, 1, 2, ..., lgN there will be 2^j subproblems with an input of length N / 2^j. The number of operations at each level j will be less or equal to

2^j * 6(N / 2^j) = 6N

Observe that it doens't matter the level you will always have less or equal 6N operations.

Since there are lgN + 1 levels, the complexity will be

O(6N * (lgN + 1)) = O(6N*lgN + 6N) = O(n lgN)

References:

n lowercase but the second N uppercase? Is there any significance in that? –

Fincher 2^j * 6(N / 2^j) = 6N have 2 more operations. well, they doesn't matter, but in that case it should looks like: 2^j * 6(N / 2^j) + 2 = 6N and as you said, will have less or equal 6N operations –

Lucius On a "traditional" merge sort, each pass through the data doubles the size of the sorted subsections. After the first pass, the file will be sorted into sections of length two. After the second pass, length four. Then eight, sixteen, etc. up to the size of the file.

It's necessary to keep doubling the size of the sorted sections until there's one section comprising the whole file. It will take lg(N) doublings of the section size to reach the file size, and each pass of the data will take time proportional to the number of records.

After splitting the array to the stage where you have single elements i.e. call them sublists,

at each stage we compare elements of each sublist with its adjacent sublist. For example, [Reusing @Davi's image ]

- At Stage-1 each element is compared with its adjacent one, so n/2 comparisons.

- At Stage-2, each element of sublist is compared with its adjacent sublist, since each sublist is sorted, this means that the max number of comparisons made between two sublists is <= length of the sublist i.e. 2 (at Stage-2) and 4 comparisons at Stage-3 and 8 at Stage-4 since the sublists keep doubling in length. Which means the max number of comparisons at each stage = (length of sublist * (number of sublists/2)) ==> n/2

- As you've observed the total number of stages would be

log(n) base 2So the total complexity would be == (max number of comparisons at each stage * number of stages) == O((n/2)*log(n)) ==> O(nlog(n))

This is because whether it be worst case or average case the merge sort just divide the array in two halves at each stage which gives it lg(n) component and the other N component comes from its comparisons that are made at each stage. So combining it becomes nearly O(nlg n). No matter if is average case or the worst case, lg(n) factor is always present. Rest N factor depends on comparisons made which comes from the comparisons done in both cases. Now the worst case is one in which N comparisons happens for an N input at each stage. So it becomes an O(nlg n).

Many of the other answers are great, but I didn't see any mention of height and depth related to the "merge-sort tree" examples. Here is another way of approaching the question with a lot of focus on the tree. Here's another image to help explain:

Just a recap: as other answers have pointed out we know that the work of merging two sorted slices of the sequence runs in linear time (the merge helper function that we call from the main sorting function).

Now looking at this tree, where we can think of each descendant of the root (other than the root) as a recursive call to the sorting function, let's try to assess how much time we spend on each node... Since the slicing of the sequence and merging (both together) take linear time, the running time of any node is linear with respect to the length of the sequence at that node.

Here's where tree depth comes in. If n is the total size of the original sequence, the size of the sequence at any node is n/2i, where i is the depth. This is shown in the image above. Putting this together with the linear amount of work for each slice, we have a running time of O(n/2i) for every node in the tree. Now we just have to sum that up for the n nodes. One way to do this is to recognize that there are 2i nodes at each level of depth in the tree. So for any level, we have O(2i * n/2i), which is O(n) because we can cancel out the 2is! If each depth is O(n), we just have to multiply that by the height of this binary tree, which is logn. Answer: O(nlogn)

reference: Data Structures and Algorithms in Python

The recursive tree will have depth log(N), and at each level in that tree you will do a combined N work to merge one or more pairs of sorted arrays.

- You recursively split the starting array into halves until you end up with

Narrays that each contain a single element. Because there is just one element these arrays are technically sorted — this is important. - Now you unwind the recursion by recombining each of these sorted arrays with an

O(N)sort algorithm (shown below).

Merging sorted arrays

To merge two sorted arrays A[1,5] and B[3,4] you simply iterate both starting at the beginning, picking the lowest element between the two arrays and incrementing the pointer for that array. You're done when both pointers reach the end of their respective arrays.

The ^ represents the respective index when iterating each array.

[1,5] [3,4] --> []

^ ^

[1,5] [3,4] --> [1]

^ ^

[1,5] [3,4] --> [1,3]

^ ^

[1,5] [3,4] --> [1,3,4]

^ x

[1,5] [3,4] --> [1,3,4,5]

x x

Runtime = O(A + B)

Merge sort illustration

Your recursive call stack will look like this. The work starts at the bottom leaf nodes and bubbles up.

beginning with [1,5,3,4], N = 4, depth k = log(4) = 2

[1,5] [3,4] depth = k-1 (2^1 nodes) * (N/2^1 values to merge per node) == N

[1] [5] [3] [4] depth = k (2^2 nodes) * (N/2^2 values to merge per node) == N

Thus you do N work at each of k levels in the tree, where k = log(N)

N * k = N * log(N)

MergeSort algorithm takes three steps:

- Divide step computes mid position of sub-array and it takes constant time O(1).

- Conquer step recursively sort two sub arrays of approx n/2 elements each.

- Combine step merges a total of n elements at each pass requiring at most n comparisons so it take O(n).

The algorithm requires approx logn passes to sort an array of n elements and so total time complexity is nlogn.

lets take an example of 8 element{1,2,3,4,5,6,7,8} you have to first divide it in half means n/2=4({1,2,3,4} {5,6,7,8}) this two divides section take 0(n/2) and 0(n/2) times so in first step it take 0(n/2+n/2)=0(n)time. 2. Next step is divide n/22 which means (({1,2} {3,4} )({5,6}{7,8})) which would take (0(n/4),0(n/4),0(n/4),0(n/4)) respectively which means this step take total 0(n/4+n/4+n/4+n/4)=0(n) time. 3. next similar as previous step we have to divide further second step by 2 means n/222 ((({1},{2},{3},{4})({5},{6},{7},{8})) whose time is 0(n/8+n/8+n/8+n/8+n/8+n/8+n/8+n/8)=0(n) which means every step takes 0(n) times .lets steps would be a so time taken by merge sort is 0(an) which mean a must be log (n) because step will always divide by 2 .so finally TC of merge sort is 0(nlog(n))

© 2022 - 2025 — McMap. All rights reserved.