Seaborn's creator has unfortunately stated that he won't add such a feature. Below are some options. (The last section contains my original suggestion, which was a hack that used private implementation details of seaborn and was not particularly flexible.)

Simple alternative version of regplot

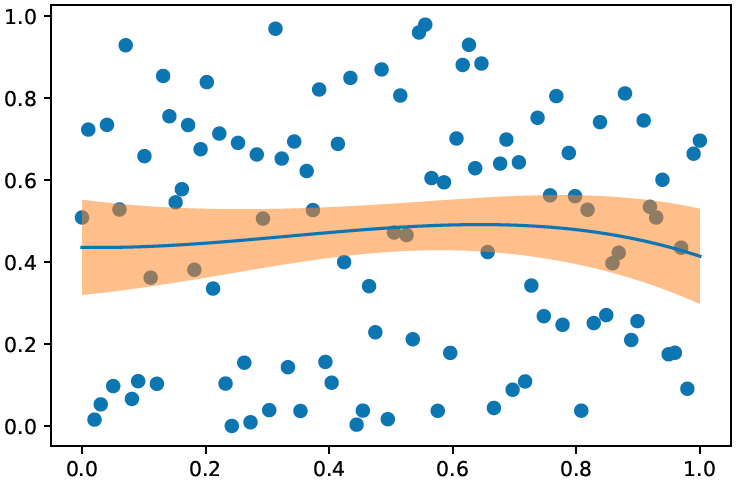

The following function overlays a fit line on a scatter plot and returns the results from statsmodels. This supports the simplest and perhaps most common usage for sns.regplot, but does not implement any of the fancier functionality.

import statsmodels.api as sm

def simple_regplot(

x, y, n_std=2, n_pts=100, ax=None, scatter_kws=None, line_kws=None, ci_kws=None

):

""" Draw a regression line with error interval. """

ax = plt.gca() if ax is None else ax

# calculate best-fit line and interval

x_fit = sm.add_constant(x)

fit_results = sm.OLS(y, x_fit).fit()

eval_x = sm.add_constant(np.linspace(np.min(x), np.max(x), n_pts))

pred = fit_results.get_prediction(eval_x)

# draw the fit line and error interval

ci_kws = {} if ci_kws is None else ci_kws

ax.fill_between(

eval_x[:, 1],

pred.predicted_mean - n_std * pred.se_mean,

pred.predicted_mean + n_std * pred.se_mean,

alpha=0.5,

**ci_kws,

)

line_kws = {} if line_kws is None else line_kws

h = ax.plot(eval_x[:, 1], pred.predicted_mean, **line_kws)

# draw the scatterplot

scatter_kws = {} if scatter_kws is None else scatter_kws

ax.scatter(x, y, c=h[0].get_color(), **scatter_kws)

return fit_results

The results from statsmodels contain a wealth of information, e.g.:

>>> print(fit_results.summary())

OLS Regression Results

==============================================================================

Dep. Variable: y R-squared: 0.477

Model: OLS Adj. R-squared: 0.471

Method: Least Squares F-statistic: 89.23

Date: Fri, 08 Jan 2021 Prob (F-statistic): 1.93e-15

Time: 17:56:00 Log-Likelihood: -137.94

No. Observations: 100 AIC: 279.9

Df Residuals: 98 BIC: 285.1

Df Model: 1

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

const -0.1417 0.193 -0.735 0.464 -0.524 0.241

x1 3.1456 0.333 9.446 0.000 2.485 3.806

==============================================================================

Omnibus: 2.200 Durbin-Watson: 1.777

Prob(Omnibus): 0.333 Jarque-Bera (JB): 1.518

Skew: -0.002 Prob(JB): 0.468

Kurtosis: 2.396 Cond. No. 4.35

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

A drop-in replacement (almost) for sns.regplot

The advantage of the method above over my original answer below is that it's easy to extend it to more complex fits.

Shameless plug: here is such an extended regplot function that I wrote that implements a large fraction of sns.regplot's functionality: https://github.com/ttesileanu/pydove.

While some features are still missing, the function I wrote

- allows flexibility by separating the plotting from the statistical modeling (and you also get easy access to the fitting results).

- is much faster for large datasets because it lets

statsmodels calculate confidence intervals instead of using bootstrapping.

- allows for slightly more diverse fits (e.g., polynomials in

log(x)).

- allows for slightly more fine-grained plotting options.

Old answer

Seaborn's creator has unfortunately stated that he won't add such a feature, so here's a workaround.

def regplot(

*args,

line_kws=None,

marker=None,

scatter_kws=None,

**kwargs

):

# this is the class that `sns.regplot` uses

plotter = sns.regression._RegressionPlotter(*args, **kwargs)

# this is essentially the code from `sns.regplot`

ax = kwargs.get("ax", None)

if ax is None:

ax = plt.gca()

scatter_kws = {} if scatter_kws is None else copy.copy(scatter_kws)

scatter_kws["marker"] = marker

line_kws = {} if line_kws is None else copy.copy(line_kws)

plotter.plot(ax, scatter_kws, line_kws)

# unfortunately the regression results aren't stored, so we rerun

grid, yhat, err_bands = plotter.fit_regression(plt.gca())

# also unfortunately, this doesn't return the parameters, so we infer them

slope = (yhat[-1] - yhat[0]) / (grid[-1] - grid[0])

intercept = yhat[0] - slope * grid[0]

return slope, intercept

Note that this only works for linear regression because it simply infers the slope and intercept from the regression results. The nice thing is that it uses seaborn's own regression class and so the results are guaranteed to be consistent with what's shown. The downside is of course that we're using a private implementation detail in seaborn that can break at any point.