Using R, I am trying to scrape a web page save the text, which is in Japanese, to a file. Ultimately this needs to be scaled to tackle hundreds of pages on a daily basis. I already have a workable solution in Perl, but I am trying to migrate the script to R to reduce the cognitive load of switching between multiple languages. So far I am not succeeding. Related questions seem to be this one on saving csv files and this one on writing Hebrew to a HTML file. However, I haven't been successful in cobbling together a solution based on the answers there. Edit: this question on UTF-8 output from R is also relevant but was not resolved.

The pages are from Yahoo! Japan Finance and my Perl code that looks like this.

use strict;

use HTML::Tree;

use LWP::Simple;

#use Encode;

use utf8;

binmode STDOUT, ":utf8";

my @arr_links = ();

$arr_links[1] = "http://stocks.finance.yahoo.co.jp/stocks/detail/?code=7203";

$arr_links[2] = "http://stocks.finance.yahoo.co.jp/stocks/detail/?code=7201";

foreach my $link (@arr_links){

$link =~ s/"//gi;

print("$link\n");

my $content = get($link);

my $tree = HTML::Tree->new();

$tree->parse($content);

my $bar = $tree->as_text;

open OUTFILE, ">>:utf8", join("","c:/", substr($link, -4),"_perl.txt") || die;

print OUTFILE $bar;

}

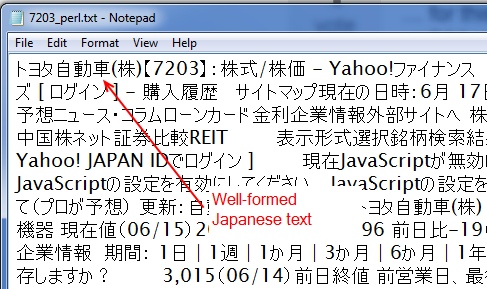

This Perl script produces a CSV file that looks like the screenshot below, with proper kanji and kana that can be mined and manipulated offline:

My R code, such as it is, looks like the following. The R script is not an exact duplicate of the Perl solution just given, as it doesn't strip out the HTML and leave the text (this answer suggests an approach using R but it doesn't work for me in this case) and it doesn't have the loop and so on, but the intent is the same.

require(RCurl)

require(XML)

links <- list()

links[1] <- "http://stocks.finance.yahoo.co.jp/stocks/detail/?code=7203"

links[2] <- "http://stocks.finance.yahoo.co.jp/stocks/detail/?code=7201"

txt <- getURL(links, .encoding = "UTF-8")

Encoding(txt) <- "bytes"

write.table(txt, "c:/geturl_r.txt", quote = FALSE, row.names = FALSE, sep = "\t", fileEncoding = "UTF-8")

This R script generates the output shown in the screenshot below. Basically rubbish.

I assume that there is some combination of HTML, text and file encoding that will allow me to generate in R a similar result to that of the Perl solution but I cannot find it. The header of the HTML page I'm trying to scrape says the chartset is utf-8 and I have set the encoding in the getURL call and in the write.table function to utf-8, but this alone isn't enough.

The question How can I scrape the above web page using R and save the text as CSV in "well-formed" Japanese text rather than something that looks like line noise?

Edit: I have added a further screenshot to show what happens when I omit the Encoding step. I get what look like Unicode codes, but not the graphical representation of the characters. It may be some kind of locale-related issue, but in the exact same locale the Perl script does provide useful output. So this is still puzzling.

My session info:

R version 2.15.0 Patched (2012-05-24 r59442)

Platform: i386-pc-mingw32/i386 (32-bit)

locale:

1 LC_COLLATE=English_United Kingdom.1252

2 LC_CTYPE=English_United Kingdom.1252

3 LC_MONETARY=English_United Kingdom.1252

4 LC_NUMERIC=C

5 LC_TIME=English_United Kingdom.1252

attached base packages:

1 stats graphics grDevices utils datasets methods base

Encoding(txt) <- "bytes"and it works well in my environment. – JotaEncoding(); unfortunately I was unsuccessful. – Insistence