What is Apache Parquet?

Apache Parquet is a binary file format that stores data in a columnar fashion.

Data inside a Parquet file is similar to an RDBMS style table where you have columns and rows. But instead of accessing the data one row at a time, you typically access it one column at a time.

Apache Parquet is one of the modern big data storage formats. It has several advantages, some of which are:

- Columnar storage: efficient data retrieval, efficient compression, etc...

- Metadata is at the end of the file: allows Parquet files to be generated from a stream of data. (common in big data scenarios)

- Supported by all Apache big data products

Do I need Hadoop or HDFS?

No. Parquet files can be stored in any file system, not just HDFS. As mentioned above it is a file format. So it's just like any other file where it has a name and a .parquet extension. What will usually happen in big data environments though is that one dataset will be split (or partitioned) into multiple parquet files for even more efficiency.

All Apache big data products support Parquet files by default. So that is why it might seem like it only can exist in the Apache ecosystem.

How can I create/read Parquet Files?

As mentioned, all current Apache big data products such as Hadoop, Hive, Spark, etc. support Parquet files by default.

So it's possible to leverage these systems to generate or read Parquet data. But this is far from practical. Imagine that in order to read or create a CSV file you had to install Hadoop/HDFS + Hive and configure them. Luckily there are other solutions.

To create your own parquet files:

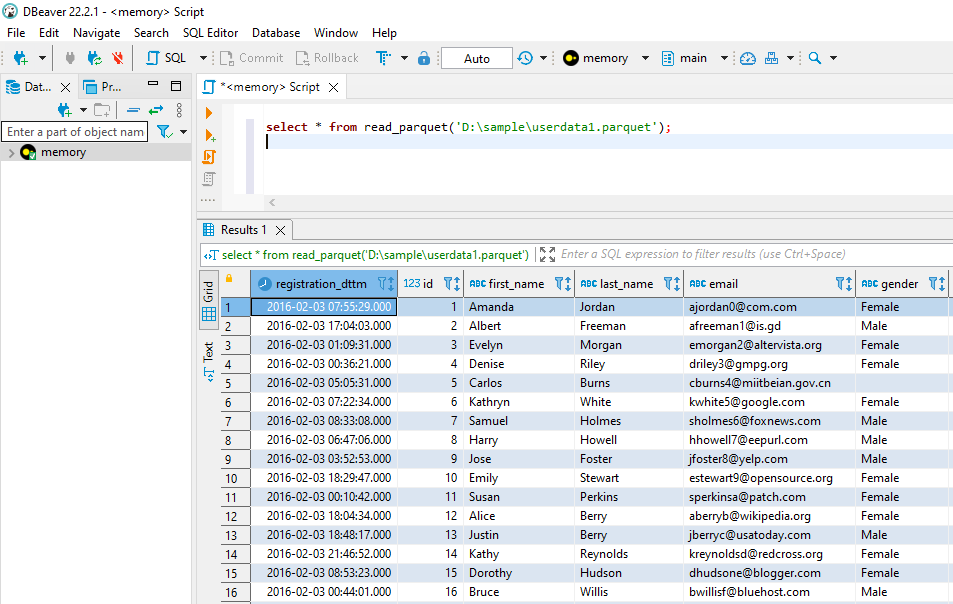

To view parquet file contents:

Are there other methods?

Possibly. But not many exist and they mostly aren't well documented. This is due to Parquet being a very complicated file format (I could not even find a formal definition). The ones I've listed are the only ones I'm aware of as I'm writing this response