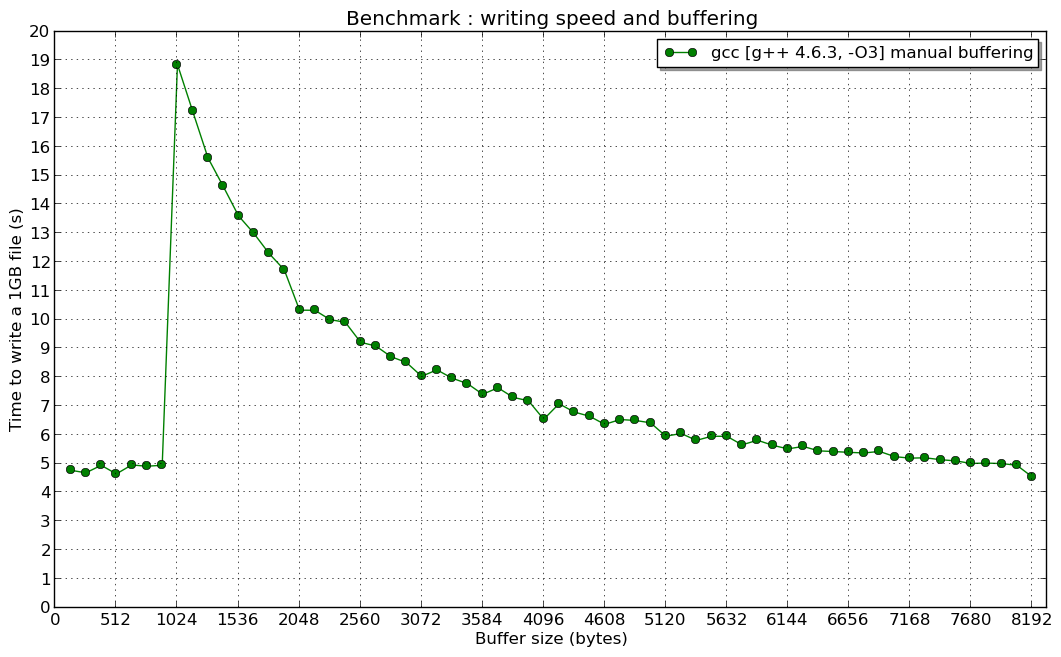

I would like to explain what is the cause of the peak in the second chart.

In fact, virtual functions used by std::ofstream lead to a performance decrement similar to what we see on the first picture, but it does not give an answer to why the highest performance hit when manual buffer size was less than 1024 bytes.

The problem relates to the high cost of writev() and write() system call and internal implementation of std::filebuf internal class of std::ofstream.

To show how write() influences the performance, I did a simple test using the dd tool on my Linux machine to copy 10MB file with different buffer sizes (bs option):

test@test$ time dd if=/dev/zero of=zero bs=256 count=40000

40000+0 records in

40000+0 records out

10240000 bytes (10 MB) copied, 2.36589 s, 4.3 MB/s

real 0m2.370s

user 0m0.000s

sys 0m0.952s

test$test: time dd if=/dev/zero of=zero bs=512 count=20000

20000+0 records in

20000+0 records out

10240000 bytes (10 MB) copied, 1.31708 s, 7.8 MB/s

real 0m1.324s

user 0m0.000s

sys 0m0.476s

test@test: time dd if=/dev/zero of=zero bs=1024 count=10000

10000+0 records in

10000+0 records out

10240000 bytes (10 MB) copied, 0.792634 s, 12.9 MB/s

real 0m0.798s

user 0m0.008s

sys 0m0.236s

test@test: time dd if=/dev/zero of=zero bs=4096 count=2500

2500+0 records in

2500+0 records out

10240000 bytes (10 MB) copied, 0.274074 s, 37.4 MB/s

real 0m0.293s

user 0m0.000s

sys 0m0.064s

As you can see: the smaller the buffer is, the lower the write speed is and therefore the more the time dd spends in the system space. So, the read/write speed decreases when the buffer size decreases.

But why did the speed peak when the manual buffer size was less than 1024 bytes in the topic creator manual buffer tests? Why it was almost constant?

The explanation relates to the std::ofstream implementation, especially to the std::basic_filebuf.

By default it uses 1024 bytes buffer (BUFSIZ variable). So, when you write your data using pieces less than 1024, writev() (not write()) system call is called at least once for two ofstream::write() operations (pieces have a size of 1023 < 1024 - first is written to the buffer, and second forces writing of first and second). Based on it, we can conclude that ofstream::write() speed does not depend on the manual buffer size before the peak (write() is called at least twice rarely).

When you try writing greater or equal to 1024 bytes buffer at once using ofstream::write() call, writev() system call is called for each ofstream::write. So, you see that speed increases when the manual buffer is greater than 1024 (after the peak).

Moreover, if you would like to set std::ofstream buffer greater than 1024 buffer (for example, 8192 bytes buffer) using streambuf::pubsetbuf() and call ostream::write() to write data using pieces of 1024 size, you would be surprised that the write speed will be the same as if you would use 1024 buffer. It is because implementation of std::basic_filebuf - the internal class of std::ofstream - is hard coded to force calling system writev() call for each ofstream::write() call when passed buffer is greater or equal to 1024 bytes (see basic_filebuf::xsputn() source code). There is also an open issue in the GCC bugzilla which was reported at 2014-11-05.

So, the solution of this problem can be provided using two possible cases:

- replace

std::filebuf by your own class and redefine std::ofstream

- devide a buffer, which has to be passed to the

ofstream::write(), into pieces of size less than 1024 and pass them to the ofstream::write() one by one

- don't pass small pieces of data to the

ofstream::write() to avoid decreasing performance on the virtual functions of std::ofstream

(and I don't explain the reason of the "resonance" for a 1kB manual buffer...)

(and I don't explain the reason of the "resonance" for a 1kB manual buffer...)

g++-4.7 -Wall -Wextra -Winline -O3 -std=c++0x test.cpp -o test. I will come back with more complete results. – Rolphstd::streambufthat triple buffers async flushes, and found big improvements in my I/O bound code. I wonder how that would affect this question? – Kamerad