Here's the complete probability tree for these replacements.

Let's assume that you start with the sequence 123, and then we'll enumerate all the various ways to produce random results with the code in question.

123

+- 123 - swap 1 and 1 (these are positions,

| +- 213 - swap 2 and 1 not numbers)

| | +- 312 - swap 3 and 1

| | +- 231 - swap 3 and 2

| | +- 213 - swap 3 and 3

| +- 123 - swap 2 and 2

| | +- 321 - swap 3 and 1

| | +- 132 - swap 3 and 2

| | +- 123 - swap 3 and 3

| +- 132 - swap 2 and 3

| +- 231 - swap 3 and 1

| +- 123 - swap 3 and 2

| +- 132 - swap 3 and 3

+- 213 - swap 1 and 2

| +- 123 - swap 2 and 1

| | +- 321 - swap 3 and 1

| | +- 132 - swap 3 and 2

| | +- 123 - swap 3 and 3

| +- 213 - swap 2 and 2

| | +- 312 - swap 3 and 1

| | +- 231 - swap 3 and 2

| | +- 213 - swap 3 and 3

| +- 231 - swap 2 and 3

| +- 132 - swap 3 and 1

| +- 213 - swap 3 and 2

| +- 231 - swap 3 and 3

+- 321 - swap 1 and 3

+- 231 - swap 2 and 1

| +- 132 - swap 3 and 1

| +- 213 - swap 3 and 2

| +- 231 - swap 3 and 3

+- 321 - swap 2 and 2

| +- 123 - swap 3 and 1

| +- 312 - swap 3 and 2

| +- 321 - swap 3 and 3

+- 312 - swap 2 and 3

+- 213 - swap 3 and 1

+- 321 - swap 3 and 2

+- 312 - swap 3 and 3

Now, the fourth column of numbers, the one before the swap information, contains the final outcome, with 27 possible outcomes.

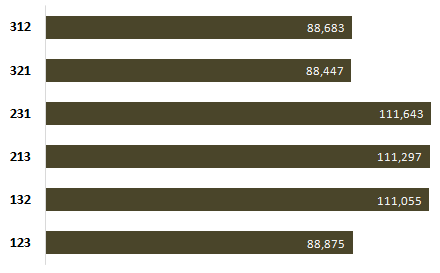

Let's count how many times each pattern occurs:

123 - 4 times

132 - 5 times

213 - 5 times

231 - 5 times

312 - 4 times

321 - 4 times

=============

27 times total

If you run the code that swaps at random for an infinite number of times, the patterns 132, 213 and 231 will occur more often than the patterns 123, 312, and 321, simply because the way the code swaps makes that more likely to occur.

Now, of course, you can say that if you run the code 30 times (27 + 3), you could end up with all the patterns occuring 5 times, but when dealing with statistics you have to look at the long term trend.

Here's C# code that explores the randomness for one of each possible pattern:

class Program

{

static void Main(string[] args)

{

Dictionary<String, Int32> occurances = new Dictionary<String, Int32>

{

{ "123", 0 },

{ "132", 0 },

{ "213", 0 },

{ "231", 0 },

{ "312", 0 },

{ "321", 0 }

};

Char[] digits = new[] { '1', '2', '3' };

Func<Char[], Int32, Int32, Char[]> swap = delegate(Char[] input, Int32 pos1, Int32 pos2)

{

Char[] result = new Char[] { input[0], input[1], input[2] };

Char temp = result[pos1];

result[pos1] = result[pos2];

result[pos2] = temp;

return result;

};

for (Int32 index1 = 0; index1 < 3; index1++)

{

Char[] level1 = swap(digits, 0, index1);

for (Int32 index2 = 0; index2 < 3; index2++)

{

Char[] level2 = swap(level1, 1, index2);

for (Int32 index3 = 0; index3 < 3; index3++)

{

Char[] level3 = swap(level2, 2, index3);

String output = new String(level3);

occurances[output]++;

}

}

}

foreach (var kvp in occurances)

{

Console.Out.WriteLine(kvp.Key + ": " + kvp.Value);

}

}

}

This outputs:

123: 4

132: 5

213: 5

231: 5

312: 4

321: 4

So while this answer does in fact count, it's not a purely mathematical answer, you just have to evaluate all possible ways the random function can go, and look at the final outputs.