If the conversion is the bottle neck (which is quite possible),

you should start by using the different possiblities in the

standard. Logically, one would expect them to be very close,

but practically, they aren't always:

You've already determined that std::ifstream is too slow.

Converting your memory mapped data to an std::istringstream

is almost certainly not a good solution; you'll first have to

create a string, which will copy all of the data.

Writing your own streambuf to read directly from the memory,

without copying (or using the deprecated std::istrstream)

might be a solution, although if the problem really is the

conversion... this still uses the same conversion routines.

You can always try fscanf, or scanf on your memory mapped

stream. Depending on the implementation, they might be faster

than the various istream implementations.

Probably faster than any of these is to use strtod. No need

to tokenize for this: strtod skips leading white space

(including '\n'), and has an out parameter where it puts the

address of the first character not read. The end condition is

a bit tricky, your loop should probably look a bit like:

char* begin; // Set to point to the mmap'ed data...

// You'll also have to arrange for a '\0'

// to follow the data. This is probably

// the most difficult issue.

char* end;

errno = 0;

double tmp = strtod( begin, &end );

while ( errno == 0 && end != begin ) {

// do whatever with tmp...

begin = end;

tmp = strtod( begin, &end );

}

If none of these are fast enough, you'll have to consider the

actual data. It probably has some sort of additional

constraints, which means that you can potentially write

a conversion routine which is faster than the more general ones;

e.g. strtod has to handle both fixed and scientific, and it

has to be 100% accurate even if there are 17 significant digits.

It also has to be locale specific. All of this is added

complexity, which means added code to execute. But beware:

writing an efficient and correct conversion routine, even for

a restricted set of input, is non-trivial; you really do have to

know what you are doing.

EDIT:

Just out of curiosity, I've run some tests. In addition to the

afore mentioned solutions, I wrote a simple custom converter,

which only handles fixed point (no scientific), with at most

five digits after the decimal, and the value before the decimal

must fit in an int:

double

convert( char const* source, char const** endPtr )

{

char* end;

int left = strtol( source, &end, 10 );

double results = left;

if ( *end == '.' ) {

char* start = end + 1;

int right = strtol( start, &end, 10 );

static double const fracMult[]

= { 0.0, 0.1, 0.01, 0.001, 0.0001, 0.00001 };

results += right * fracMult[ end - start ];

}

if ( endPtr != nullptr ) {

*endPtr = end;

}

return results;

}

(If you actually use this, you should definitely add some error

handling. This was just knocked up quickly for experimental

purposes, to read the test file I'd generated, and nothing

else.)

The interface is exactly that of strtod, to simplify coding.

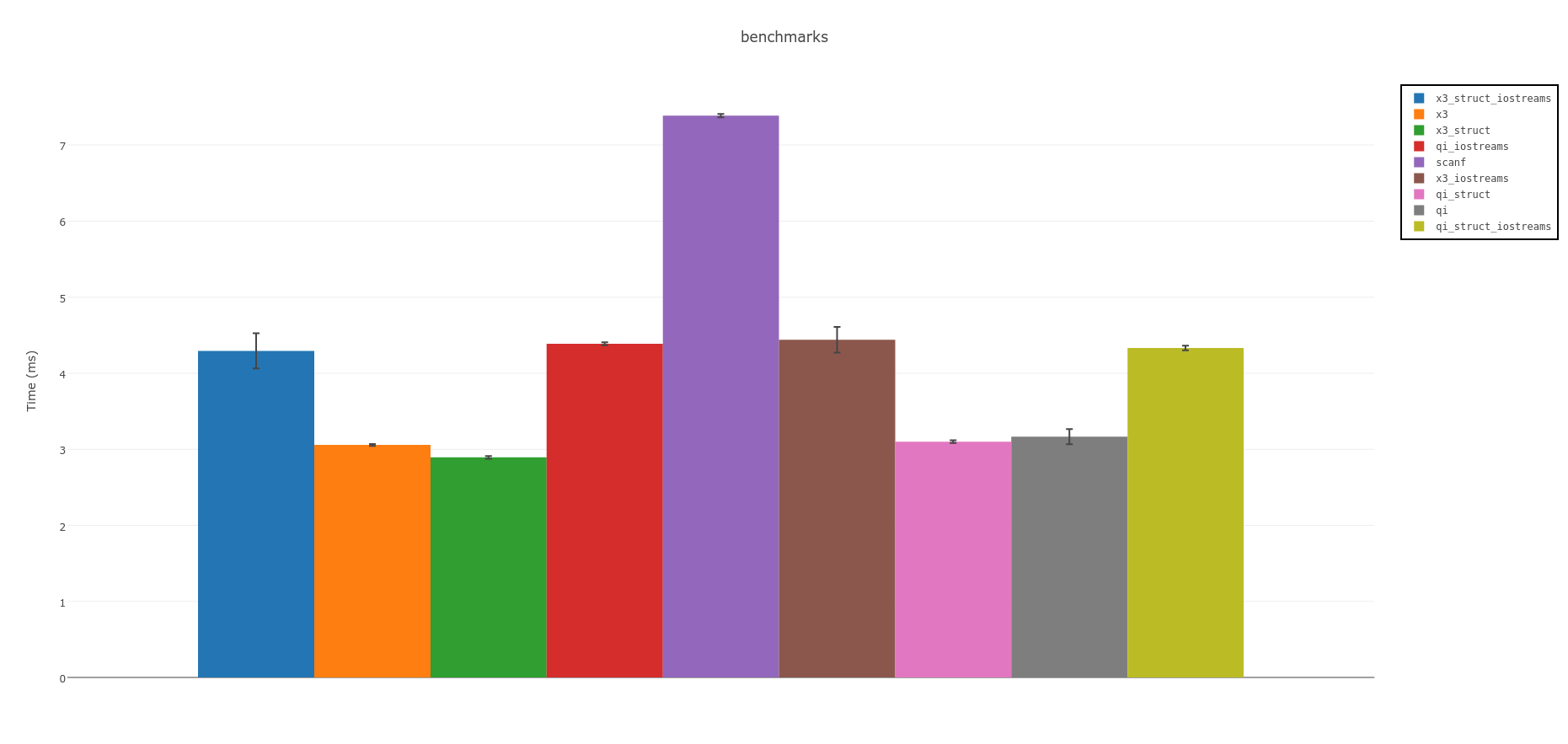

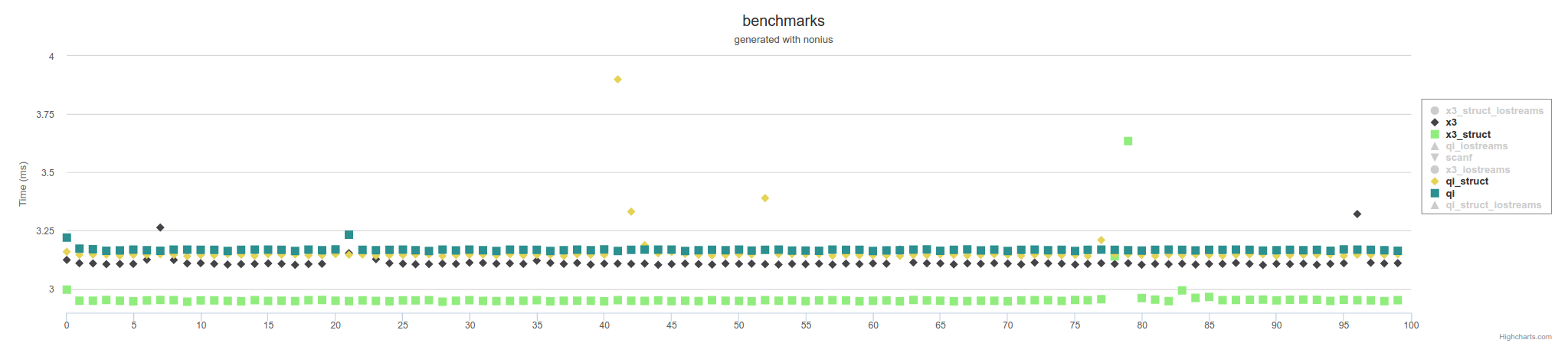

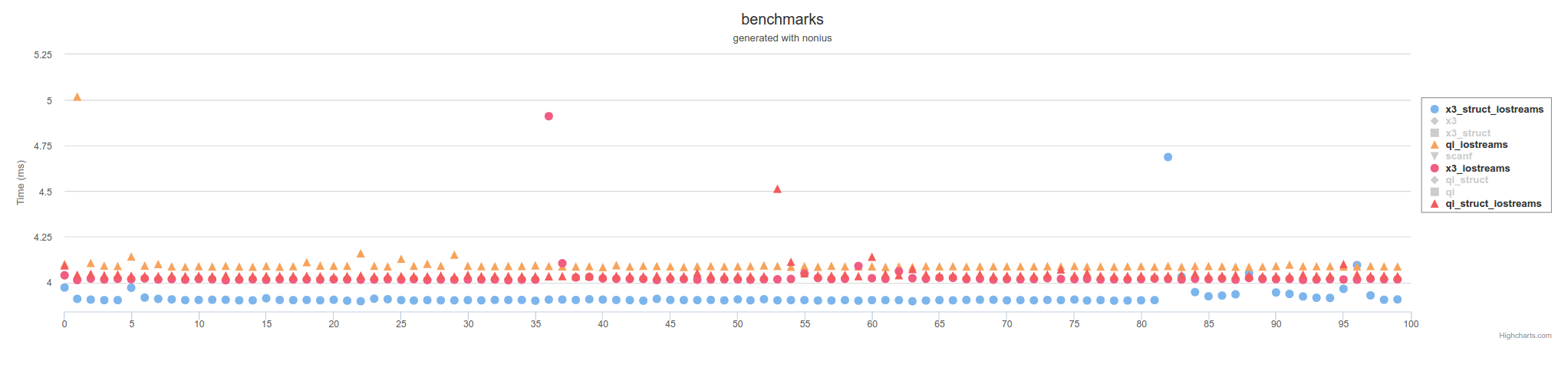

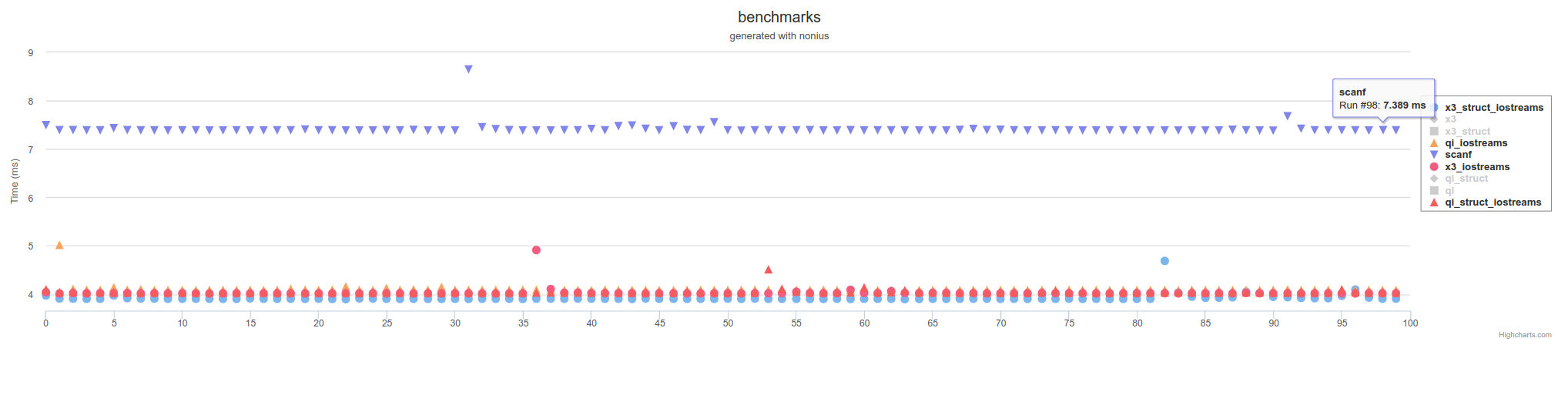

I ran the benchmarks in two environments (on different machines,

so the absolute values of any times aren't relevant). I got the

following results:

Under Windows 7, compiled with VC 11 (/O2):

Testing Using fstream directly (5 iterations)...

6.3528e+006 microseconds per iteration

Testing Using fscan directly (5 iterations)...

685800 microseconds per iteration

Testing Using strtod (5 iterations)...

597000 microseconds per iteration

Testing Using manual (5 iterations)...

269600 microseconds per iteration

Under Linux 2.6.18, compiled with g++ 4.4.2 (-O2, IIRC):

Testing Using fstream directly (5 iterations)...

784000 microseconds per iteration

Testing Using fscanf directly (5 iterations)...

526000 microseconds per iteration

Testing Using strtod (5 iterations)...

382000 microseconds per iteration

Testing Using strtof (5 iterations)...

360000 microseconds per iteration

Testing Using manual (5 iterations)...

186000 microseconds per iteration

In all cases, I'm reading 554000 lines, each with 3 randomly

generated floating point in the range [0...10000).

The most striking thing is the enormous difference between

fstream and fscan under Windows (and the relatively small

difference between fscan and strtod). The second thing is

just how much the simple custom conversion function gains, on

both platforms. The necessary error handling would slow it down

a little, but the difference is still significant. I expected

some improvement, since it doesn't handle a lot of things the

the standard conversion routines do (like scientific format,

very, very small numbers, Inf and NaN, i18n, etc.), but not this

much.

fscanf? – Pythonessstd::istream/spirit) with the C (scanf). (I don't see an obvious reason why C++-based solutions would be slower; but that is my prejudice) – Rothkocin(and the synchronization ofcin), but this question does not involvecinnor involves flushing the stream (doctorlove pointed thread). The best explanation I read so far (on whyfscanfandstrtodare faster) is a comment by JamesKanze in JeffFoster answer: "...both are locale sensitive---the main reason fscanf and >> have such different performance is because the C++ locale is much more awkward to use efficiently." – Rothkocrack_atof: https://mcmap.net/q/242734/-how-to-parse-space-separated-floats-in-c-quickly new record 1.327s for 11,000,000 lines on old Core i7 2600. See below. – Schoolfellow