First of all, as written by @bmu, you should use combinations of vectorized calculations, ufuncs and indexing. There are indeed some cases where explicit looping is required, but those are really rare.

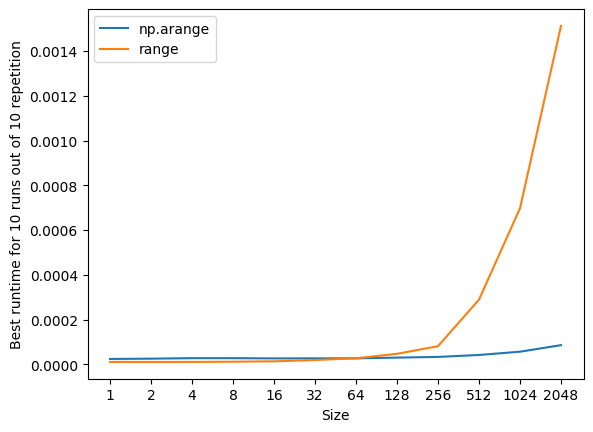

If explicit loop is needed, with python 2.6 and 2.7, you should use xrange (see below). From what you say, in Python 3, range is the same as xrange (returns a generator). So maybe range is as good for you.

Now, you should try it yourself

(using timeit: - here the ipython "magic function"):

%timeit for i in range(1000000): pass

[out] 10 loops, best of 3: 63.6 ms per loop

%timeit for i in np.arange(1000000): pass

[out] 10 loops, best of 3: 158 ms per loop

%timeit for i in xrange(1000000): pass

[out] 10 loops, best of 3: 23.4 ms per loop

Again, as mentioned above, most of the time it is possible to use numpy vector/array formula (or ufunc etc...) which run a c speed: much faster. This is what we could call "vector programming". It makes program easier to implement than C (and more readable) but almost as fast in the end.