This is my dataframe:

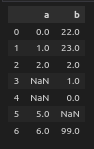

date ids

0 2011-04-23 [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13,...

1 2011-04-24 [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13,...

2 2011-04-25 [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13,...

3 2011-04-26 Nan

4 2011-04-27 [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13,...

5 2011-04-28 [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13,...

I want to replace Nan with []. How to do that? .fillna([]) did not work. I even tried replace(np.nan, []) but it gives error:

TypeError('Invalid "to_replace" type: \'float\'',)

ids? – Mendesdf.ix[df['ids'].isnull(), 'ids'] = set()set work? – ElnoraRecursionError) using:df.ids.where(df.ids.isnull(), [[]]). – Leonteen