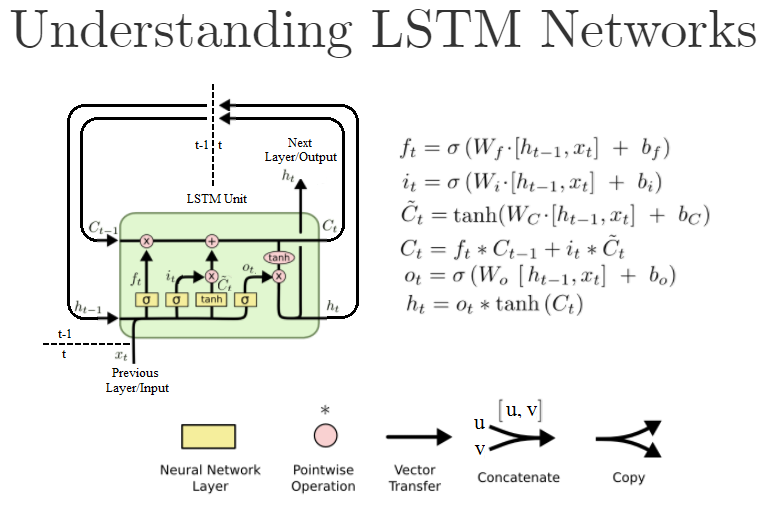

The first arguments in a normal Dense layer is also units, and is the number of neurons/nodes in that layer. A standard LSTM unit however looks like the following:

(This is a reworked version of "Understanding LSTM Networks")

In Keras, when I create an LSTM object like this LSTM(units=N, ...), am I actually creating N of these LSTM units? Or is it the size of the "Neural Network" layers inside the LSTM unit, i.e., the W's in the formulas? Or is it something else?

For context, I'm working based on this example code.

The following is the documentation: https://keras.io/layers/recurrent/

It says:

units: Positive integer, dimensionality of the output space.

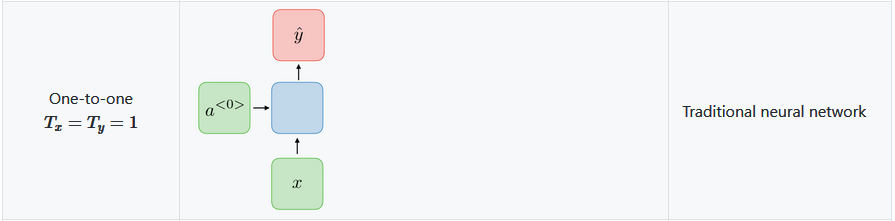

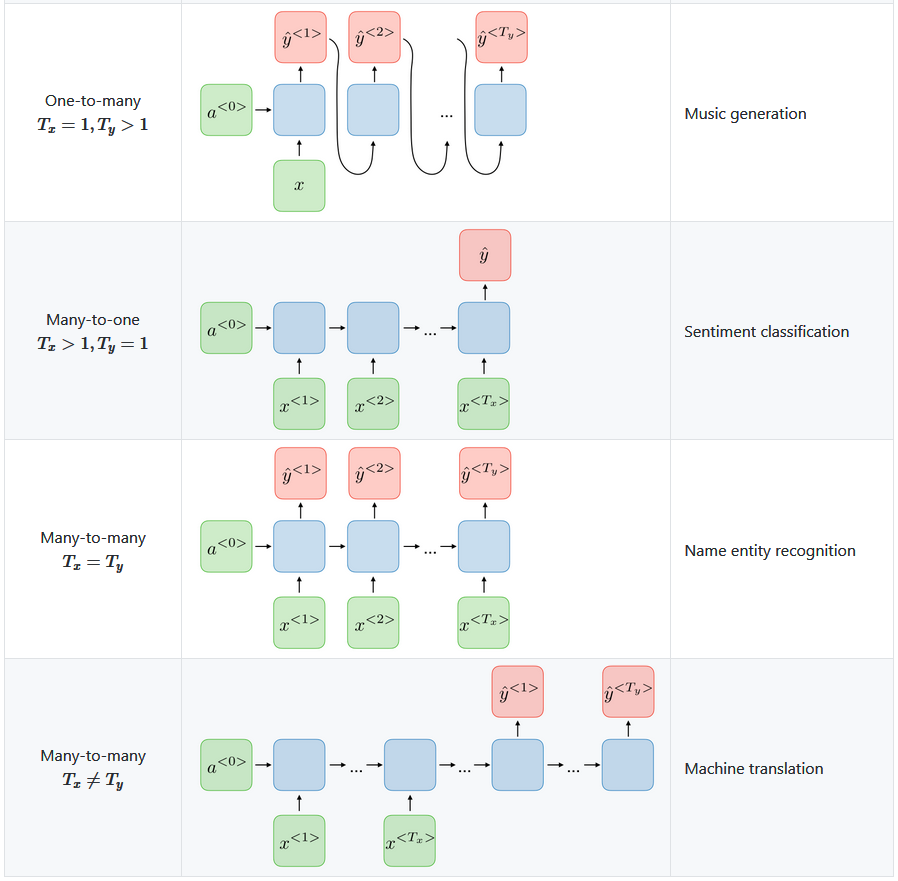

It makes me think it is the number of outputs from the Keras LSTM "layer" object. Meaning the next layer will have N inputs. Does that mean there actually exists N of these LSTM units in the LSTM layer, or maybe that that exactly one LSTM unit is run for N iterations outputting N of these h[t] values, from, say, h[t-N] up to h[t]?

If it only defines the number of outputs, does that mean the input still can be, say, just one, or do we have to manually create lagging input variables x[t-N] to x[t], one for each LSTM unit defined by the units=N argument?

As I'm writing this it occurs to me what the argument return_sequences does. If set to True all the N outputs are passed forward to the next layer, while if it is set to False it only passes the last h[t] output to the next layer. Am I right?