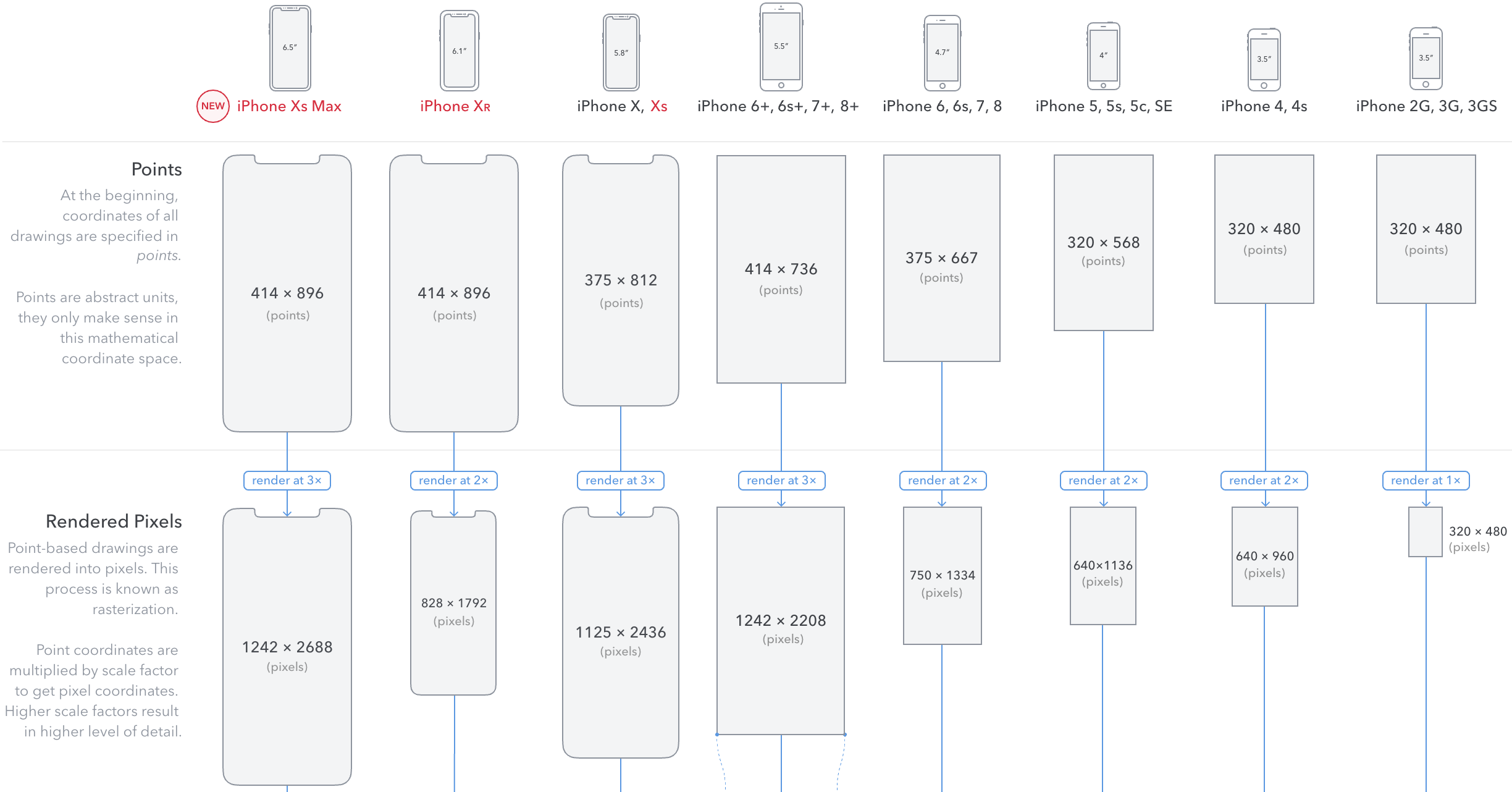

A pixel on iOS is the full resolution of the device, which means if I have an image that is 100x100 pixels in length, then the phone will render it 100x100 pixels on a standard non-retina device. However, because newer iPhones have a quadrupled pixel density, that same image will render at 100x100 pixels, but look half that size. The iOS engineers solved this a long time ago (way back in OS X with Quartz) when they introduced Core Graphics' point system. A point is a standard length equivalent to 1x1 pixels on a non-retina device, and 2x2 pixels on a retina device. That way, your 100x100 image will render twice the size on a retina device and basically normalize what the user sees.

It also provides a standard system of measurement on iOS devices because no matter how the pixel density changes, there have always been 320x480 points on an iPhone screen and 768x1024 points on an iPad screen.*

But at the same time, you can basically disregard the documentation considering that retina devices were introduced with iOS 4 at a minimum, and I don't know of too many people still running iOS 3 on a newer iPhone. But if such a case arises, your UIImage would need to be rendered at exactly twice its dimensions in pixels on a retina iPhone to make up for the pixel density difference.

*Starting with the iPhone 5, the iPhone's dimensions are now no longer standardized. Please use the appropriate APIs to retrieve the screen's dimensions or use layout constraints.