Is a switch statement actually faster than an if statement?

I ran the code below on Visual Studio 2010's x64 C++ compiler with the /Ox flag:

#include <stdlib.h>

#include <stdio.h>

#include <time.h>

#define MAX_COUNT (1 << 29)

size_t counter = 0;

size_t testSwitch()

{

clock_t start = clock();

size_t i;

for (i = 0; i < MAX_COUNT; i++)

{

switch (counter % 4 + 1)

{

case 1: counter += 4; break;

case 2: counter += 3; break;

case 3: counter += 2; break;

case 4: counter += 1; break;

}

}

return 1000 * (clock() - start) / CLOCKS_PER_SEC;

}

size_t testIf()

{

clock_t start = clock();

size_t i;

for (i = 0; i < MAX_COUNT; i++)

{

const size_t c = counter % 4 + 1;

if (c == 1) { counter += 4; }

else if (c == 2) { counter += 3; }

else if (c == 3) { counter += 2; }

else if (c == 4) { counter += 1; }

}

return 1000 * (clock() - start) / CLOCKS_PER_SEC;

}

int main()

{

printf("Starting...\n");

printf("Switch statement: %u ms\n", testSwitch());

printf("If statement: %u ms\n", testIf());

}

and got these results:

Switch statement: 5261 ms

If statement: 5196 ms

From what I've learned, switch statements apparently use jump tables to optimize the branching.

Questions:

What would a basic jump table look like, in x86 or x64?

Is this code using a jump table?

Why is there no performance difference in this example? Is there any situation in which there is a significant performance difference?

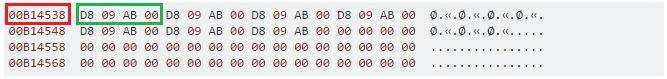

Disassembly of the code:

testIf:

13FE81B10 sub rsp,48h

13FE81B14 call qword ptr [__imp_clock (13FE81128h)]

13FE81B1A mov dword ptr [start],eax

13FE81B1E mov qword ptr [i],0

13FE81B27 jmp testIf+26h (13FE81B36h)

13FE81B29 mov rax,qword ptr [i]

13FE81B2E inc rax

13FE81B31 mov qword ptr [i],rax

13FE81B36 cmp qword ptr [i],20000000h

13FE81B3F jae testIf+0C3h (13FE81BD3h)

13FE81B45 xor edx,edx

13FE81B47 mov rax,qword ptr [counter (13FE835D0h)]

13FE81B4E mov ecx,4

13FE81B53 div rax,rcx

13FE81B56 mov rax,rdx

13FE81B59 inc rax

13FE81B5C mov qword ptr [c],rax

13FE81B61 cmp qword ptr [c],1

13FE81B67 jne testIf+6Dh (13FE81B7Dh)

13FE81B69 mov rax,qword ptr [counter (13FE835D0h)]

13FE81B70 add rax,4

13FE81B74 mov qword ptr [counter (13FE835D0h)],rax

13FE81B7B jmp testIf+0BEh (13FE81BCEh)

13FE81B7D cmp qword ptr [c],2

13FE81B83 jne testIf+89h (13FE81B99h)

13FE81B85 mov rax,qword ptr [counter (13FE835D0h)]

13FE81B8C add rax,3

13FE81B90 mov qword ptr [counter (13FE835D0h)],rax

13FE81B97 jmp testIf+0BEh (13FE81BCEh)

13FE81B99 cmp qword ptr [c],3

13FE81B9F jne testIf+0A5h (13FE81BB5h)

13FE81BA1 mov rax,qword ptr [counter (13FE835D0h)]

13FE81BA8 add rax,2

13FE81BAC mov qword ptr [counter (13FE835D0h)],rax

13FE81BB3 jmp testIf+0BEh (13FE81BCEh)

13FE81BB5 cmp qword ptr [c],4

13FE81BBB jne testIf+0BEh (13FE81BCEh)

13FE81BBD mov rax,qword ptr [counter (13FE835D0h)]

13FE81BC4 inc rax

13FE81BC7 mov qword ptr [counter (13FE835D0h)],rax

13FE81BCE jmp testIf+19h (13FE81B29h)

13FE81BD3 call qword ptr [__imp_clock (13FE81128h)]

13FE81BD9 sub eax,dword ptr [start]

13FE81BDD imul eax,eax,3E8h

13FE81BE3 cdq

13FE81BE4 mov ecx,3E8h

13FE81BE9 idiv eax,ecx

13FE81BEB cdqe

13FE81BED add rsp,48h

13FE81BF1 ret

testSwitch:

13FE81C00 sub rsp,48h

13FE81C04 call qword ptr [__imp_clock (13FE81128h)]

13FE81C0A mov dword ptr [start],eax

13FE81C0E mov qword ptr [i],0

13FE81C17 jmp testSwitch+26h (13FE81C26h)

13FE81C19 mov rax,qword ptr [i]

13FE81C1E inc rax

13FE81C21 mov qword ptr [i],rax

13FE81C26 cmp qword ptr [i],20000000h

13FE81C2F jae testSwitch+0C5h (13FE81CC5h)

13FE81C35 xor edx,edx

13FE81C37 mov rax,qword ptr [counter (13FE835D0h)]

13FE81C3E mov ecx,4

13FE81C43 div rax,rcx

13FE81C46 mov rax,rdx

13FE81C49 inc rax

13FE81C4C mov qword ptr [rsp+30h],rax

13FE81C51 cmp qword ptr [rsp+30h],1

13FE81C57 je testSwitch+73h (13FE81C73h)

13FE81C59 cmp qword ptr [rsp+30h],2

13FE81C5F je testSwitch+87h (13FE81C87h)

13FE81C61 cmp qword ptr [rsp+30h],3

13FE81C67 je testSwitch+9Bh (13FE81C9Bh)

13FE81C69 cmp qword ptr [rsp+30h],4

13FE81C6F je testSwitch+0AFh (13FE81CAFh)

13FE81C71 jmp testSwitch+0C0h (13FE81CC0h)

13FE81C73 mov rax,qword ptr [counter (13FE835D0h)]

13FE81C7A add rax,4

13FE81C7E mov qword ptr [counter (13FE835D0h)],rax

13FE81C85 jmp testSwitch+0C0h (13FE81CC0h)

13FE81C87 mov rax,qword ptr [counter (13FE835D0h)]

13FE81C8E add rax,3

13FE81C92 mov qword ptr [counter (13FE835D0h)],rax

13FE81C99 jmp testSwitch+0C0h (13FE81CC0h)

13FE81C9B mov rax,qword ptr [counter (13FE835D0h)]

13FE81CA2 add rax,2

13FE81CA6 mov qword ptr [counter (13FE835D0h)],rax

13FE81CAD jmp testSwitch+0C0h (13FE81CC0h)

13FE81CAF mov rax,qword ptr [counter (13FE835D0h)]

13FE81CB6 inc rax

13FE81CB9 mov qword ptr [counter (13FE835D0h)],rax

13FE81CC0 jmp testSwitch+19h (13FE81C19h)

13FE81CC5 call qword ptr [__imp_clock (13FE81128h)]

13FE81CCB sub eax,dword ptr [start]

13FE81CCF imul eax,eax,3E8h

13FE81CD5 cdq

13FE81CD6 mov ecx,3E8h

13FE81CDB idiv eax,ecx

13FE81CDD cdqe

13FE81CDF add rsp,48h

13FE81CE3 ret

Update:

Interesting results here. Not sure why one is faster and one is slower, though.

ifcase. – Dethroneswitchbeats that ofifdramatically. It seems like the lack of a "default" case really affects this. – Jaineswitchbecause it (at least on my compiler) does a single range comparison, skips over the jump table if out of range. while theifalways does the comparisons in order. – Dethronecharof space for the offset)) – Ihram5196 vs. 5261 shouldn't be enough to actually care--> I'm not sure if you misunderstood the question or if I misunderstand your comment, but isn't the whole point of my question to ask why there isn't a difference? (Did I ever claim that's a significant difference to care about?) – Jaineswitchversusif. I've run across that problem tons of times (e.g. for a lexer I'm making for D, if you really need a specific specific example) and I'm trying to see why I would or wouldn't see a performance difference. Of course, the example is more general because the point isn't to make a lexer (that was just one situation, out of many... another included a matrix multiply optimization I had, etc.). Just because it's hard to imagine it being practical doesn't mean it isn't! – Jaine-O3(godbolt.org/g/ovVJHU) notice that they could implement this ascounter += 5 - (counter%4), i.e.counter += 5 - (counter&3). Also, that once we reach thecounter += 4;case, it always repeats so you can just docounter += 4 * i; break;. – Jemappes