The C11 Standard's ruling is as follows.

5.1.2.4 Multi-threaded executions and data races

An evaluation A is dependency-ordered before 16) an evaluation B if:

— A performs a release operation on an atomic object M, and, in another thread, B performs a consume operation on M and reads a value written by any side effect in the release sequence headed by A, or

— for some evaluation X, A is dependency-ordered before X and X carries a dependency to B.

An evaluation A inter-thread happens before an evaluation B if A synchronizes with B, A is dependency-ordered before B, or, for some evaluation X:

— A synchronizes with X and X is sequenced before B,

— A is sequenced before X and X inter-thread happens before B, or

— A inter-thread happens before X and X inter-thread happens before B.

NOTE 7 The ‘‘inter-thread happens before’’ relation describes arbitrary concatenations of ‘‘sequenced before’’, ‘‘synchronizes with’’, and ‘‘dependency-ordered before’’ relationships, with two exceptions. The first exception is that a concatenation is not permitted to end with ‘‘dependency-ordered before’’ followed by ‘‘sequenced before’’. The reason for this limitation is that a consume operation participating in a ‘‘dependency-ordered before’’ relationship provides ordering only with respect to operations to which this consume operation actually carries a dependency. The reason that this limitation applies only to the end of such a concatenation is that any subsequent release operation will provide the required ordering for a prior consume operation. The second exception is that a concatenation is not permitted to consist entirely of ‘‘sequenced before’’. The reasons for this limitation are (1) to permit ‘‘inter-thread happens before’’ to be transitively closed and (2) the ‘‘happens before’’ relation, defined below, provides for relationships consisting entirely of ‘‘sequenced before’’.

An evaluation A happens before an evaluation B if A is sequenced before B or A inter-thread happens before B.

A visible side effect A on an object M with respect to a value computation B of M satisfies the conditions:

— A happens before B, and

— there is no other side effect X to M such that A happens before X and X happens before B.

The value of a non-atomic scalar object M, as determined by evaluation B, shall be the value stored by the visible side effect A.

(emphasis added)

In the commentary below, I'll abbreviate below as follows:

- Dependency-ordered before: DOB

- Inter-thread happens before: ITHB

- Happens before: HB

- Sequenced before: SeqB

Let us review how this applies. We have 4 relevant memory operations, which we will name Evaluations A, B, C and D:

Thread 1:

y.store (20); // Release; Evaluation A

x.store (10); // Release; Evaluation B

Thread 2:

if (x.load() == 10) { // Consume; Evaluation C

assert (y.load() == 20) // Consume; Evaluation D

y.store (10)

}

To prove the assert never trips, we in effect seek to prove that A is always a visible side-effect at D. In accordance with 5.1.2.4 (15), we have:

A SeqB B DOB C SeqB D

which is a concatenation ending in DOB followed by SeqB. This is explicitly ruled by (17) to not be an ITHB concatenation, despite what (16) says.

We know that since A and D are not in the same thread of execution, A is not SeqB D; Hence neither of the two conditions in (18) for HB is satisfied, and A does not HB D.

It follows then that A is not visible to D, since one of the conditions of (19) is not met. The assert may fail.

How this could play out, then, is described here, in the C++ standard's memory model discussion and here, Section 4.2 Control Dependencies:

- (Some time ahead) Thread 2's branch predictor guesses that the

if will be taken.

- Thread 2 approaches the predicted-taken branch and begins speculative fetching.

- Thread 2 out-of-order and speculatively loads

0xGUNK from y (Evaluation D). (Maybe it was not yet evicted from cache?).

- Thread 1 stores

20 into y (Evaluation A)

- Thread 1 stores

10 into x (Evaluation B)

- Thread 2 loads

10 from x (Evaluation C)

- Thread 2 confirms the

if is taken.

- Thread 2's speculative load of

y == 0xGUNK is committed.

- Thread 2 fails assert.

The reason why it is permitted for Evaluation D to be reordered before C is because a consume does not forbid it. This is unlike an acquire-load, which prevents any load/store after it in program order from being reordered before it. Again, 5.1.2.4(15) states, a consume operation participating in a ‘‘dependency-ordered before’’ relationship provides ordering only with respect to operations to which this consume operation actually carries a dependency, and there most definitely is not a dependency between the two loads.

CppMem verification

CppMem is a tool that helps explore shared data access scenarios under the C11 and C++11 memory models.

For the following code that approximates the scenario in the question:

int main() {

atomic_int x, y;

y.store(30, mo_seq_cst);

{{{ { y.store(20, mo_release);

x.store(10, mo_release); }

||| { r3 = x.load(mo_consume).readsvalue(10);

r4 = y.load(mo_consume); }

}}};

return 0; }

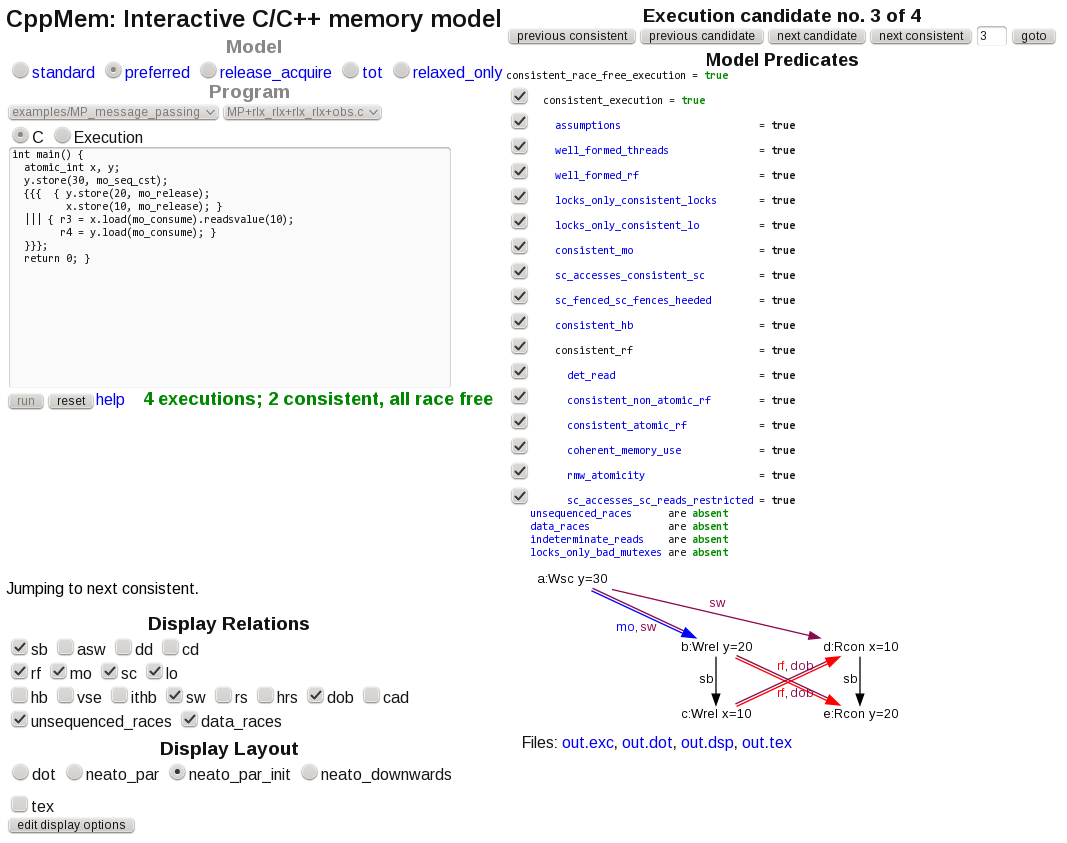

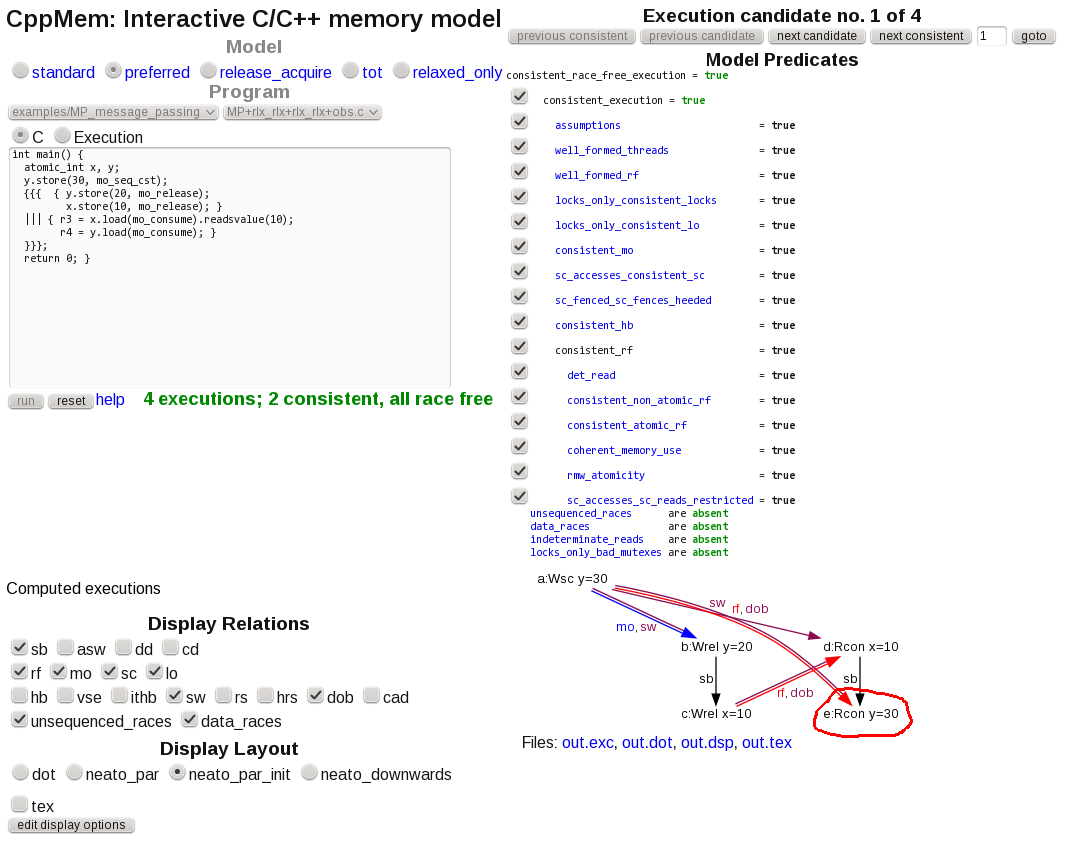

The tool reports two consistent, race-free scenarios, namely:

![Consume, Success Scenario]()

In which y=20 is successfully read, and

![Consume, Failure Scenario]()

In which the "stale" initialization value y=30 is read. Freehand circle is mine.

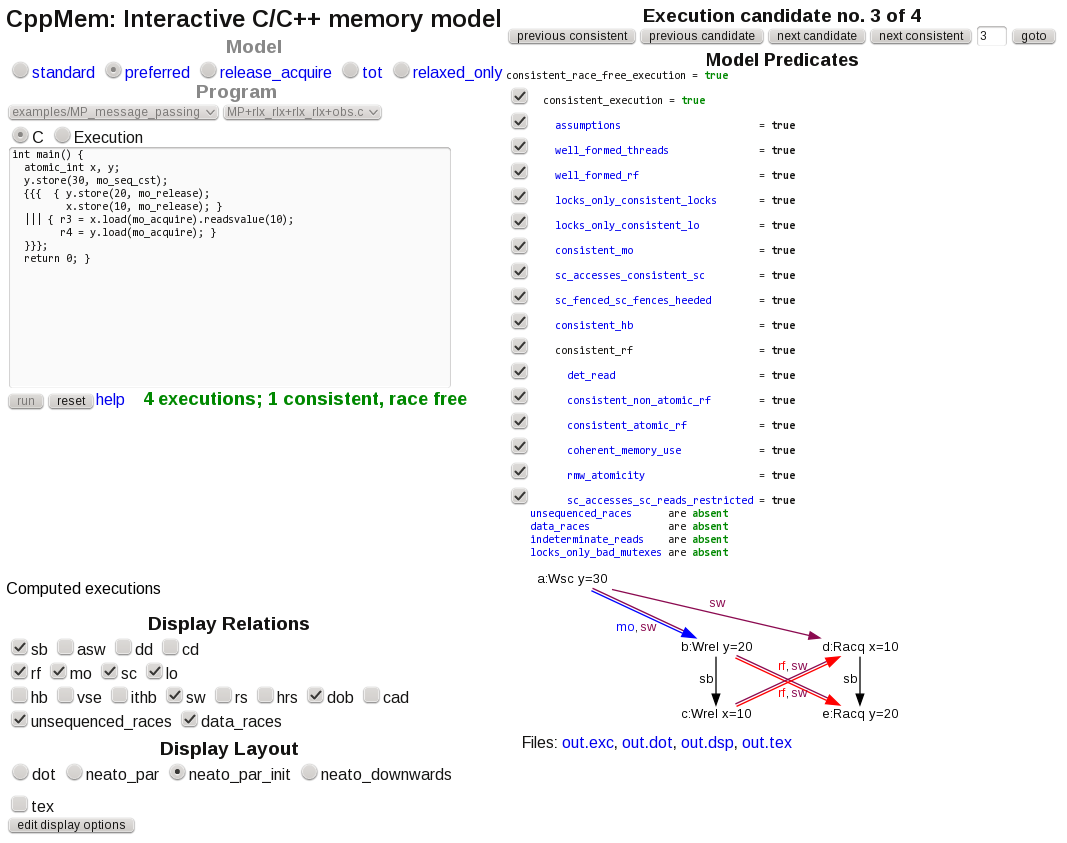

By contrast, when mo_acquire is used for the loads, CppMem reports only one consistent, race-free scenario, namely the correct one:

![Acquire, Success Scenario]()

in which y=20 is read.

return bstatement in the first quoted code block, effectively. Immediately after said code block, he says," The above example can have a return value of 0 if translated straightforwardly into either ARM or Power, right? Both of these can speculate a read into a conditional, and both can translate a consume load into a plain load if data dependencies remain unbroken." – Agavereturn bis supposed to loadbnon-atomically (that's why the suggested alternative of using acquire makes sense). Jens Gustedt claims that there's a guaranteed order between two atomic consume-loads. – Mongo