All benchmarks are run on either Icelake or Whiskey Lake (In Skylake Family).

Summary

I am seeing a strange phenomina where it appears that when a loop transitions from running out of the Uop Cache to running out of the LSD (Loop Stream Detector) there is a spike in Branch Misses that can cause severe performance hits. I tested on both Icelake and Whiskey Lake by benchmarking a nested loop with the outer loop having a sufficiently large body s.t the entire thing did not fit in the LSD itself, but with an inner loop small enough to fit in the LSD.

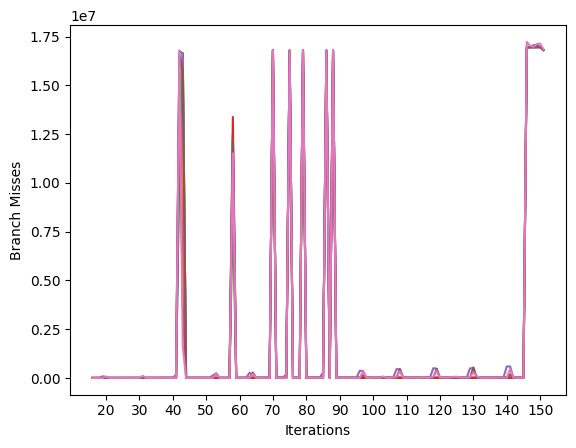

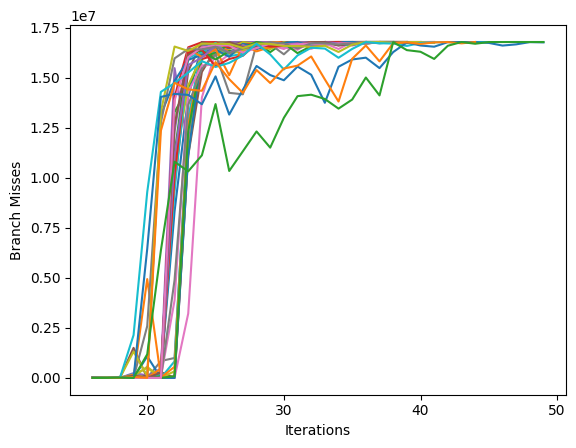

Basically once the inner loop reaches some iteration count decoding seems to switch for idq.dsb_uops (Uop Cache) to lsd.uops (LSD) and at that point there is a large increase in branch-misses (without a corresponding jump in branches) causing a severe performance drop. Note: This only seems to occur for nested loops. Travis Down's Loop Test for example will not show any meaningful variation in branch misses. AFAICT this has something to do with when a loop transitions from running out of the Uop Cache to running out of the LSD.

Questions

What is happening when the loop transitions from running out of the Uop Cache to running out of the LSD that causes this spike in Branch Misses?

Is there a way to avoid this?

Benchmark

This is the minimum reproducible example I could come up with:

Note: If the .p2align statements are removed both loops will fit in

the LSD and there will not be a transitions.

#include <assert.h>

#include <stdint.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#define BENCH_ATTR __attribute__((noinline, noclone, aligned(4096)))

static const uint64_t outer_N = (1UL << 24);

static void BENCH_ATTR

bench(uint64_t inner_N) {

uint64_t inner_loop_cnt, outer_loop_cnt;

asm volatile(

".p2align 12\n"

"movl %k[outer_N], %k[outer_loop_cnt]\n"

".p2align 6\n"

"1:\n"

"movl %k[inner_N], %k[inner_loop_cnt]\n"

// Extra align surrounding inner loop so that the entire thing

// doesn't execute out of LSD.

".p2align 10\n"

"2:\n"

"decl %k[inner_loop_cnt]\n"

"jnz 2b\n"

".p2align 10\n"

"decl %k[outer_loop_cnt]\n"

"jnz 1b\n"

: [ inner_loop_cnt ] "=&r"(inner_loop_cnt),

[ outer_loop_cnt ] "=&r"(outer_loop_cnt)

: [ inner_N ] "ri"(inner_N), [ outer_N ] "i"(outer_N)

:);

}

int

main(int argc, char ** argv) {

assert(argc > 1);

uint64_t inner_N = atoi(argv[1]);

bench(inner_N);

}

Compile: gcc -O3 -march=native -mtune=native <filename>.c -o <filename>

Run Icelake: sudo perf stat -C 0 --all-user -e cycles -e branches -e branch-misses -x, -e idq.ms_uops -e idq.dsb_uops -e lsd.uops taskset -c 0 ./<filename> <N inner loop iterations>

Run Whiskey Lake: sudo perf stat -C 0 -e cycles -e branches -e branch-misses -x, -e idq.ms_uops -e idq.dsb_uops -e lsd.uops taskset -c 0 ./<filename> <N inner loop iterations>

Graphs

Edit: x label is N iterations of inner loop.

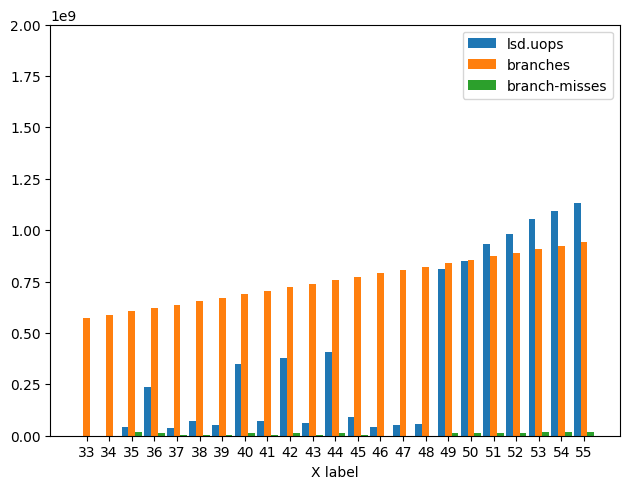

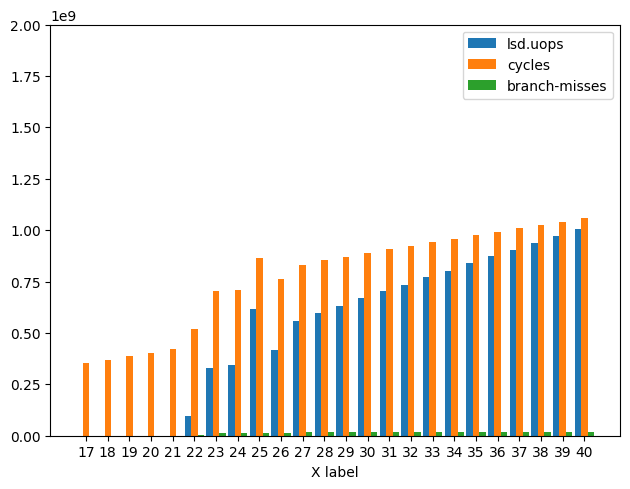

Below is a graph of Branch Misses, Branches, and LSD Uops.

Generally you can see that 1) there is no corresponding jump in Branches. 2) that the number of added Branch Misses stabilizes at a constant. And 3) That there is a strong relationship between the Branch Misses and LSD Uops.

Icelake Graph:

Whiskey Lake Graph:

Below is a graph of Branch Misses, Cycles, and LSD Uops for Icelake only as performance is not affected nearly as much on:

Analysis

Hard numbers below.

For Icelake starting at N = 22 and finishing at N = 27 there

is some fluctuation in the number of uops coming from the LSD vs

Uop Cache and during that time Branch Misses increases by

roughly 3 order of magnitude from 10^4 -> 10^7. During this period

Cycles also increased by a factor of 2. For all N > 27

Branch Misses stays at around 1.67 x 10^7 (roughly outer_loop_N). For N = [17, 40]

branches continues to only increase linearly.

The results for Whiskey Lake look different in that 1) N begins fluctuating at N = 35 and continues to fluctuate until N = 49. And 2) there is less of a

performance impact and more fluctuation in the data. That being said

the increase in Branch-Misses corresponding with transitions from

uops being fed by Uop Cache to being fed by LSD still exists.

Results

Data is mean result for 25 runs.

Icelake Results:

| N | cycles | branches | branch-misses | idq.ms_uops | idq.dsb_uops | lsd.uops |

|---|---|---|---|---|---|---|

| 1 | 33893260 | 67129521 | 1590 | 43163 | 115243 | 83908732 |

| 2 | 42540891 | 83908928 | 1762 | 49023 | 142909 | 100690381 |

| 3 | 50725933 | 100686143 | 1782 | 47656 | 142506 | 117440256 |

| 4 | 67533597 | 117461172 | 1655 | 52538 | 186123 | 134158311 |

| 5 | 68022910 | 134238387 | 1711 | 53405 | 204481 | 150954035 |

| 6 | 85543126 | 151018722 | 1924 | 62445 | 141397 | 167633971 |

| 7 | 84847823 | 167799220 | 1935 | 60248 | 160146 | 184563523 |

| 8 | 101532158 | 184570060 | 1709 | 60064 | 361208 | 201100179 |

| 9 | 101864898 | 201347253 | 1773 | 63827 | 459873 | 217780207 |

| 10 | 118024033 | 218124499 | 1698 | 59480 | 177223 | 234834304 |

| 11 | 118644416 | 234908571 | 2201 | 62514 | 422977 | 251503052 |

| 12 | 134627567 | 251678909 | 1679 | 57262 | 133462 | 268435650 |

| 13 | 285607942 | 268456135 | 1770 | 74070 | 285032524 | 315423 |

| 14 | 302717754 | 285233352 | 1731 | 74663 | 302101097 | 15953 |

| 15 | 321627434 | 302010569 | 81796 | 77831 | 319192830 | 1520819 |

| 16 | 337876736 | 318787786 | 71638 | 77056 | 335904260 | 1265766 |

| 17 | 353054773 | 335565563 | 1798 | 79839 | 352434780 | 15879 |

| 18 | 369800279 | 352344970 | 1978 | 79863 | 369229396 | 16790 |

| 19 | 386921048 | 369119438 | 1972 | 84075 | 385984022 | 16115 |

| 20 | 404248461 | 385896655 | 29454 | 85348 | 402790977 | 510176 |

| 21 | 421100725 | 402673872 | 37598 | 83400 | 419537730 | 729397 |

| 22 | 519623794 | 419451095 | 4447767 | 91209 | 431865775 | 97827331 |

| 23 | 702206338 | 436228323 | 12603617 | 109064 | 427880075 | 327661987 |

| 24 | 710626194 | 453005538 | 12316933 | 106929 | 432926173 | 344838509 |

| 25 | 863214037 | 469782765 | 14641887 | 121776 | 428085132 | 614871430 |

| 26 | 761037251 | 486559974 | 13067814 | 113011 | 438093034 | 418124984 |

| 27 | 832686921 | 503337195 | 16381350 | 113953 | 421924080 | 556915419 |

| 28 | 854713119 | 520114412 | 16642396 | 124448 | 420515666 | 598907353 |

| 29 | 869873144 | 536891629 | 16572581 | 119280 | 421188631 | 629696780 |

| 30 | 889642335 | 553668847 | 16717446 | 120116 | 420086570 | 668628871 |

| 31 | 906912275 | 570446064 | 16735759 | 126094 | 419970933 | 702822733 |

| 32 | 923023862 | 587223281 | 16706519 | 132498 | 420332680 | 735003892 |

| 33 | 940308170 | 604000498 | 16744992 | 124365 | 419945191 | 770185745 |

| 34 | 957075000 | 620777716 | 16726856 | 133675 | 420215897 | 802779119 |

| 35 | 974557538 | 637554932 | 16763071 | 134871 | 419764866 | 838012637 |

| 36 | 991110971 | 654332149 | 16772560 | 130903 | 419641144 | 872037131 |

| 37 | 1008489575 | 671109367 | 16757219 | 138788 | 419900997 | 904638287 |

| 38 | 1024971256 | 687886583 | 16772585 | 139782 | 419663863 | 938988917 |

| 39 | 1041404669 | 704669411 | 16776722 | 137681 | 419617131 | 972896126 |

| 40 | 1058594326 | 721441018 | 16773492 | 142959 | 419662133 | 1006109192 |

| 41 | 1075179100 | 738218235 | 16776636 | 141185 | 419601996 | 1039892900 |

| 42 | 1092093726 | 754995452 | 16776233 | 142793 | 419611902 | 1073373451 |

| 43 | 1108706464 | 771773224 | 16776359 | 139500 | 419610885 | 1106976114 |

| 44 | 1125413652 | 788549886 | 16764637 | 143717 | 419889127 | 1139628280 |

| 45 | 1142023614 | 805327103 | 16778640 | 144397 | 419558217 | 1174329696 |

| 46 | 1158833317 | 822104321 | 16765518 | 148045 | 419889914 | 1206833484 |

| 47 | 1175665684 | 838881537 | 16778437 | 148347 | 419562885 | 1241397845 |

| 48 | 1192454164 | 855658755 | 16778865 | 153651 | 419552747 | 1275006511 |

| 49 | 1210199084 | 872436025 | 16778287 | 152468 | 419599314 | 1307945613 |

| 50 | 1226321832 | 889213188 | 16778464 | 155552 | 419572344 | 1341893668 |

| 51 | 1242886388 | 905990406 | 16778745 | 155401 | 419559249 | 1375589883 |

| 52 | 1259559053 | 922767623 | 16778809 | 154847 | 419554082 | 1409206082 |

| 53 | 1276875799 | 939544839 | 16778460 | 162521 | 419576455 | 1442424993 |

| 54 | 1293113199 | 956322057 | 16778931 | 154913 | 419550955 | 1476316161 |

| 55 | 1310449232 | 973099274 | 16778534 | 157364 | 419578102 | 1509485876 |

| 56 | 1327022109 | 989876491 | 16778794 | 162881 | 419562403 | 1543193559 |

| 57 | 1344097516 | 1006653708 | 16778906 | 157486 | 419567545 | 1576414302 |

| 58 | 1362935064 | 1023430928 | 16778959 | 315120 | 419583132 | 1609691339 |

| 59 | 1381567560 | 1040208143 | 16778564 | 179997 | 419661259 | 1640660745 |

| 60 | 1394829416 | 1056985359 | 16778779 | 167613 | 419575969 | 1677034188 |

| 61 | 1411847237 | 1073762626 | 16778071 | 166332 | 419613028 | 1710194702 |

| 62 | 1428918439 | 1090539795 | 16778409 | 168073 | 419610487 | 1743644637 |

| 63 | 1445223241 | 1107317011 | 16778486 | 172446 | 419591254 | 1777573503 |

| 64 | 1461530579 | 1124094228 | 16769606 | 169559 | 419970612 | 1810351736 |

Whiskey Lake Results:

| N | cycles | branches | branch-misses | idq.dsb_uops | lsd.uops |

|---|---|---|---|---|---|

| 1 | 8332553879 | 35005847 | 37925 | 1799462 | 6019 |

| 2 | 8329926329 | 51163346 | 34338 | 1114352 | 5919 |

| 3 | 8357233041 | 67925775 | 32270 | 9241935 | 5555 |

| 4 | 8379609449 | 85364250 | 35667 | 18215077 | 5712 |

| 5 | 8394301337 | 101563554 | 33177 | 26392216 | 2159 |

| 6 | 8409830612 | 118918934 | 35007 | 35318763 | 5295 |

| 7 | 8435794672 | 135162597 | 35592 | 43033739 | 4478 |

| 8 | 8445843118 | 152636271 | 37802 | 52154850 | 5629 |

| 9 | 8459141676 | 168577876 | 30766 | 59245754 | 1543 |

| 10 | 8475484632 | 185354280 | 30825 | 68059212 | 4672 |

| 11 | 8493529857 | 202489273 | 31703 | 77386249 | 5556 |

| 12 | 8509281533 | 218912407 | 32133 | 84390084 | 4399 |

| 13 | 8528605921 | 236303681 | 33056 | 93995496 | 2093 |

| 14 | 8553971099 | 252439989 | 572416 | 99700289 | 2477 |

| 15 | 8558526147 | 269148605 | 29912 | 109772044 | 6121 |

| 16 | 8576658106 | 286414453 | 29839 | 118504526 | 5850 |

| 17 | 8591545887 | 302698593 | 28993 | 126409458 | 4865 |

| 18 | 8611628234 | 319960954 | 32568 | 136298306 | 5066 |

| 19 | 8627289083 | 336312187 | 30094 | 143759724 | 6598 |

| 20 | 8644741581 | 353730396 | 49458 | 152217853 | 9275 |

| 21 | 8685908403 | 369886284 | 1175195 | 161313923 | 7958903 |

| 22 | 8694494654 | 387336207 | 354008 | 169541244 | 2553802 |

| 23 | 8702920906 | 403389097 | 29315 | 176524452 | 12932 |

| 24 | 8711458401 | 420211718 | 31924 | 184984842 | 11574 |

| 25 | 8729941722 | 437299615 | 32472 | 194553843 | 12002 |

| 26 | 8743658904 | 453739403 | 28809 | 202074676 | 13279 |

| 27 | 8763317458 | 470902005 | 32298 | 211321630 | 15377 |

| 28 | 8788189716 | 487432842 | 37105 | 218972477 | 27666 |

| 29 | 8796580152 | 504414945 | 36756 | 228334744 | 79954 |

| 30 | 8821174857 | 520930989 | 39550 | 235849655 | 140461 |

| 31 | 8818857058 | 537611096 | 34142 | 648080 | 79191 |

| 32 | 8855038758 | 555138781 | 37680 | 18414880 | 70489 |

| 33 | 8870680446 | 571194669 | 37541 | 34596108 | 131455 |

| 34 | 8888946679 | 588222521 | 33724 | 52553756 | 80009 |

| 35 | 9256640352 | 604791887 | 16658672 | 132185723 | 41881719 |

| 36 | 9189040776 | 621918353 | 12296238 | 257921026 | 235389707 |

| 37 | 8962737456 | 638241888 | 1086663 | 109613368 | 35222987 |

| 38 | 9005853511 | 655453884 | 2059624 | 131945369 | 73389550 |

| 39 | 9005576553 | 671845678 | 1434478 | 143002441 | 51959363 |

| 40 | 9284680907 | 688991063 | 12776341 | 349762585 | 347998221 |

| 41 | 9049931865 | 705399210 | 1778532 | 174597773 | 72566933 |

| 42 | 9314836359 | 722226758 | 12743442 | 365270833 | 380415682 |

| 43 | 9072200927 | 739449289 | 1344663 | 205181163 | 61284843 |

| 44 | 9346737669 | 755766179 | 12681859 | 383580355 | 409359111 |

| 45 | 9117099955 | 773167996 | 1801713 | 235583664 | 88985013 |

| 46 | 9108062783 | 789247474 | 860680 | 250992592 | 43508069 |

| 47 | 9129892784 | 806871038 | 984804 | 268229102 | 51249366 |

| 48 | 9146468279 | 822765997 | 1018387 | 282312588 | 58278399 |

| 49 | 9476835578 | 840085058 | 13985421 | 241172394 | 809315446 |

| 50 | 9495578885 | 856579327 | 14155046 | 241909464 | 847629148 |

| 51 | 9537115189 | 873483093 | 15057500 | 238735335 | 932663942 |

| 52 | 9556102594 | 890026435 | 15322279 | 238194482 | 982429654 |

| 53 | 9589094741 | 907142375 | 15899251 | 234845868 | 1052080437 |

| 54 | 9609053333 | 923477989 | 16049518 | 233890599 | 1092323040 |

| 55 | 9628950166 | 940997348 | 16172619 | 235383688 | 1131146866 |

| 56 | 9650657175 | 957049360 | 16445697 | 231276680 | 1183699383 |

| 57 | 9666446210 | 973785857 | 16330748 | 233203869 | 1205098118 |

| 58 | 9687274222 | 990692518 | 16523542 | 230842647 | 1254624242 |

| 59 | 9706652879 | 1007946602 | 16576268 | 231502185 | 1288374980 |

| 60 | 9720091630 | 1024044005 | 16547047 | 230966608 | 1321807705 |

| 61 | 9741079017 | 1041285110 | 16635400 | 230873663 | 1362929599 |

| 62 | 9761596587 | 1057847755 | 16683756 | 230289842 | 1399235989 |

| 63 | 9782104875 | 1075055403 | 16299138 | 237386812 | 1397167324 |

| 64 | 9790122724 | 1091147494 | 16650471 | 229928585 | 1463076072 |

Edit: 2 things worth noting:

If I add padding to the inner loop so it won't fit in the uop cache I don't see this behavior until ~150 iterations.

Adding an

lfenceon with the padding in the outer loop changes N threshold to 31.

edit2: Benchmark which clears branch history The condition was reversed. It should be cmove not cmovne. With the fixed version any iteration count sees elevated Branch Misses at the same rate as above (1.67 * 10^9). This means the LSD is not itself causing Branch Misses, but leaves open the possibility that LSD is in some way defeating the Branch Predictor (what I believe to be the case).

static void BENCH_ATTR

bench(uint64_t inner_N) {

uint64_t inner_loop_cnt, outer_loop_cnt;

asm volatile(

".p2align 12\n"

"movl %k[outer_N], %k[outer_loop_cnt]\n"

".p2align 6\n"

"1:\n"

"testl $3, %k[outer_loop_cnt]\n"

"movl $1000, %k[inner_loop_cnt]\n"

THIS NEEDS TO BE CMOVE

"cmovne %k[inner_N], %k[inner_loop_cnt]\n"

// Extra align surrounding inner loop so that the entire thing

// doesn't execute out of LSD.

".p2align 10\n"

"2:\n"

"decl %k[inner_loop_cnt]\n"

"jnz 2b\n"

".p2align 10\n"

"decl %k[outer_loop_cnt]\n"

"jnz 1b\n"

: [ inner_loop_cnt ] "=&r"(inner_loop_cnt),

[ outer_loop_cnt ] "=&r"(outer_loop_cnt)

: [ inner_N ] "ri"(inner_N), [ outer_N ] "i"(outer_N)

:);

}

taskset perf ...so taskset isn't part of the workload you're profiling. Also, if you config yourperf_event_paranoidto0for example, you can avoid needingsudoon benchmark runs. – Cuprite