As the GPU driver vendors don't usually bother to implement noiseX in GLSL, I'm looking for a "graphics randomization swiss army knife" utility function set, preferably optimised to use within GPU shaders. I prefer GLSL, but code any language will do for me, I'm ok with translating it on my own to GLSL.

Specifically, I'd expect:

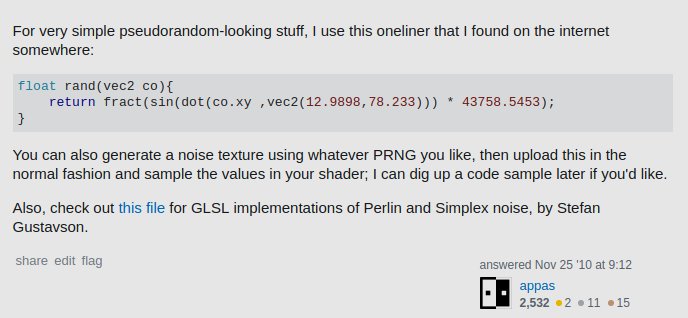

a) Pseudo-random functions - N-dimensional, uniform distribution over [-1,1] or over [0,1], calculated from M-dimensional seed (ideally being any value, but I'm OK with having the seed restrained to, say, 0..1 for uniform result distribution). Something like:

float random (T seed);

vec2 random2 (T seed);

vec3 random3 (T seed);

vec4 random4 (T seed);

// T being either float, vec2, vec3, vec4 - ideally.

b) Continous noise like Perlin Noise - again, N-dimensional, +- uniform distribution, with constrained set of values and, well, looking good (some options to configure the appearance like Perlin levels could be useful too). I'd expect signatures like:

float noise (T coord, TT seed);

vec2 noise2 (T coord, TT seed);

// ...

I'm not very much into random number generation theory, so I'd most eagerly go for a pre-made solution, but I'd also appreciate answers like "here's a very good, efficient 1D rand(), and let me explain you how to make a good N-dimensional rand() on top of it..." .