I'm creating a terrain mesh, and following this SO answer I'm trying to migrate my CPU computed normals to a shader based version, in order to improve performances by reducing my mesh resolution and using a normal map computed in the fragment shader.

I'm using MapBox height map for the terrain data. Tiles look like this:

And elevation at each pixel is given by the following formula:

const elevation = -10000.0 + ((red * 256.0 * 256.0 + green * 256.0 + blue) * 0.1);

My original code first creates a dense mesh (256*256 squares of 2 triangles) and then computes triangle and vertices normals. To get a visually satisfying result I was diving the elevation by 5000 to match the tile's width & height in my scene (in the future I'll do a proper computation to display the real elevation).

I was drawing with these simple shaders:

Vertex shader:

uniform mat4 u_Model;

uniform mat4 u_View;

uniform mat4 u_Projection;

attribute vec3 a_Position;

attribute vec3 a_Normal;

attribute vec2 a_TextureCoordinates;

varying vec3 v_Position;

varying vec3 v_Normal;

varying mediump vec2 v_TextureCoordinates;

void main() {

v_TextureCoordinates = a_TextureCoordinates;

v_Position = vec3(u_View * u_Model * vec4(a_Position, 1.0));

v_Normal = vec3(u_View * u_Model * vec4(a_Normal, 0.0));

gl_Position = u_Projection * u_View * u_Model * vec4(a_Position, 1.0);

}

Fragment shader:

precision mediump float;

varying vec3 v_Position;

varying vec3 v_Normal;

varying mediump vec2 v_TextureCoordinates;

uniform sampler2D texture;

void main() {

vec3 lightVector = normalize(-v_Position);

float diffuse = max(dot(v_Normal, lightVector), 0.1);

highp vec4 textureColor = texture2D(texture, v_TextureCoordinates);

gl_FragColor = vec4(textureColor.rgb * diffuse, textureColor.a);

}

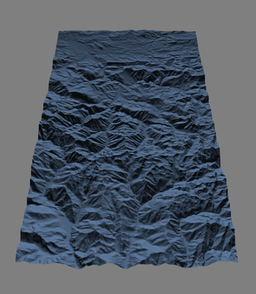

It was slow but gave visually satisfying results:

Now, I removed all the CPU based normals computation code, and replaced my shaders by those:

Vertex shader:

#version 300 es

precision highp float;

precision highp int;

uniform mat4 u_Model;

uniform mat4 u_View;

uniform mat4 u_Projection;

in vec3 a_Position;

in vec2 a_TextureCoordinates;

out vec3 v_Position;

out vec2 v_TextureCoordinates;

out mat4 v_Model;

out mat4 v_View;

void main() {

v_TextureCoordinates = a_TextureCoordinates;

v_Model = u_Model;

v_View = u_View;

v_Position = vec3(u_View * u_Model * vec4(a_Position, 1.0));

gl_Position = u_Projection * u_View * u_Model * vec4(a_Position, 1.0);

}

Fragment shader:

#version 300 es

precision highp float;

precision highp int;

in vec3 v_Position;

in vec2 v_TextureCoordinates;

in mat4 v_Model;

in mat4 v_View;

uniform sampler2D u_dem;

uniform sampler2D u_texture;

out vec4 color;

const vec2 size = vec2(2.0,0.0);

const ivec3 offset = ivec3(-1,0,1);

float getAltitude(vec4 pixel) {

float red = pixel.x;

float green = pixel.y;

float blue = pixel.z;

return (-10000.0 + ((red * 256.0 * 256.0 + green * 256.0 + blue) * 0.1)) * 6.0; // Why * 6 and not / 5000 ??

}

void main() {

float s01 = getAltitude(textureOffset(u_dem, v_TextureCoordinates, offset.xy));

float s21 = getAltitude(textureOffset(u_dem, v_TextureCoordinates, offset.zy));

float s10 = getAltitude(textureOffset(u_dem, v_TextureCoordinates, offset.yx));

float s12 = getAltitude(textureOffset(u_dem, v_TextureCoordinates, offset.yz));

vec3 va = (vec3(size.xy, s21 - s01));

vec3 vb = (vec3(size.yx, s12 - s10));

vec3 normal = normalize(cross(va, vb));

vec3 transformedNormal = normalize(vec3(v_View * v_Model * vec4(normal, 0.0)));

vec3 lightVector = normalize(-v_Position);

float diffuse = max(dot(transformedNormal, lightVector), 0.1);

highp vec4 textureColor = texture(u_texture, v_TextureCoordinates);

color = vec4(textureColor.rgb * diffuse, textureColor.a);

}

It now loads nearly instantly, but something is wrong:

- in the fragment shader I had to multiply the elevation by 6 rather than dividing by 5000 to get something close to my original code

- the result is not as good. Especially when I tilt the scene, the shadows are very dark (the more I tilt the darker they get):

Can you spot what causes that difference?

EDIT: I created two JSFiddles:

- first version with CPU computed vertices normals: http://jsfiddle.net/tautin/tmugzv6a/10

- second version with GPU computed normal map: http://jsfiddle.net/tautin/8gqa53e1/42

The problem appears when you play with the tilt slider.

a_Positionvariable. Do you set the height of the vertices according to the height in your heightmap? – Cutinizetexture2D()call. – Cutinize