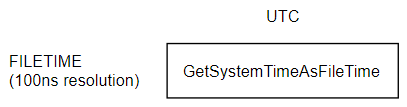

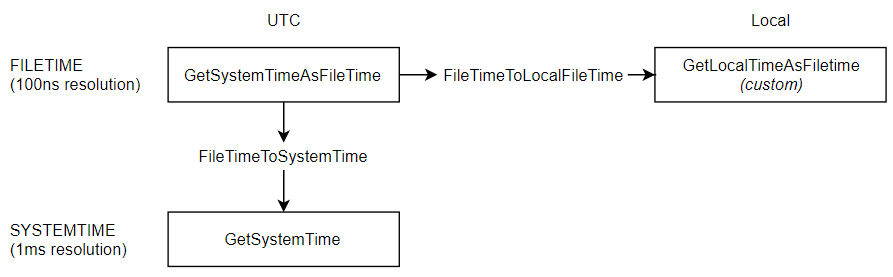

In Windows, the base of all time is a function called GetSystemTimeAsFileTime.

- It returns a structure that is capable of holding a time with 100ns resoution.

- It is kept in UTC

The FILETIME structure records the number of 100ns intervals since January 1, 1600; meaning its resolution is limited to 100ns.

This forms our first function:

![enter image description here]()

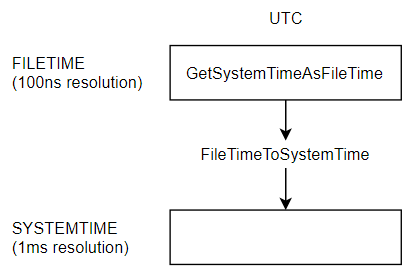

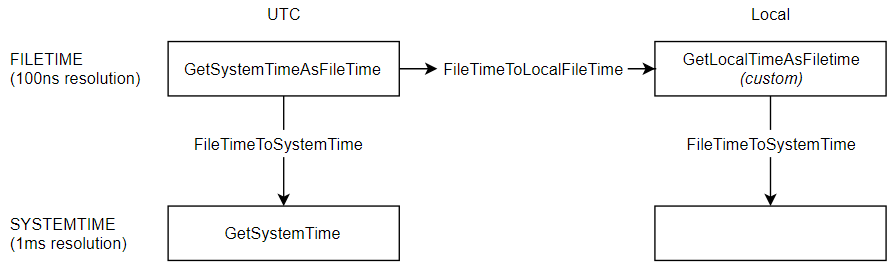

A 64-bit number of 100ns ticks since January 1, 1600 is somewhat unwieldy. Windows provides a handy helper function, FileTimeToSystemTime that can decode this 64-bit integer into useful parts:

record SYSTEMTIME {

wYear: Word;

wMonth: Word;

wDayOfWeek: Word;

wDay: Word;

wHour: Word;

wMinute: Word;

wSecond: Word;

wMilliseconds: Word;

}

Notice that SYSTEMTIME has a built-in resolution limitation of 1ms

Now we have a way to go from FILETIME to SYSTEMTIME:

![enter image description here]()

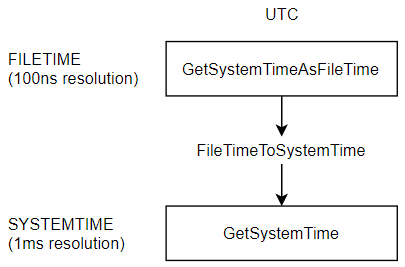

We could write the function to get the current system time as a SYSTEIMTIME structure:

SYSTEMTIME GetSystemTime()

{

//Get the current system time utc in it's native 100ns FILETIME structure

FILETIME ftNow;

GetSytemTimeAsFileTime(ref ft);

//Decode the 100ns intervals into a 1ms resolution SYSTEMTIME for us

SYSTEMTIME stNow;

FileTimeToSystemTime(ref stNow);

return stNow;

}

Except Windows already wrote such a function for you: GetSystemTime

![enter image description here]()

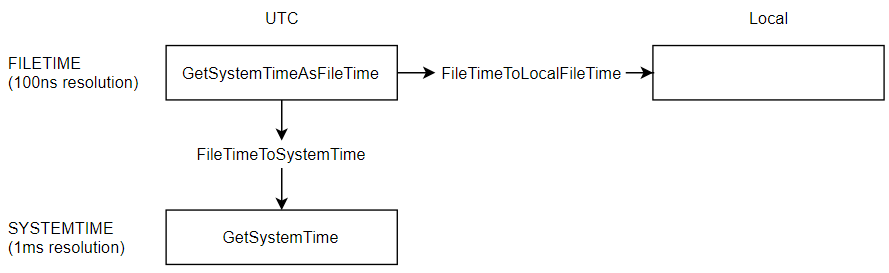

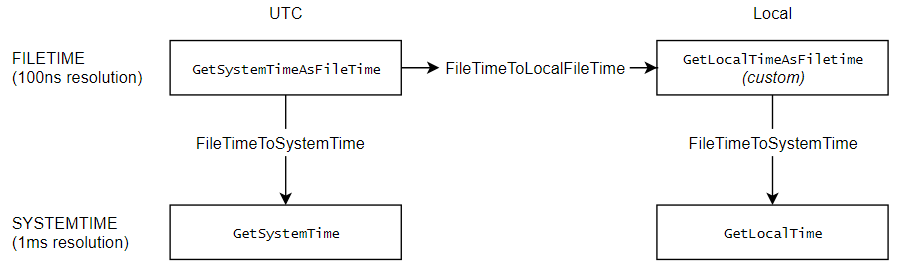

Local, rather than UTC

Now what if you don't want the current time in UTC. What if you want it in your local time? Windows provides a function to convert a FILETIME that is in UTC into your local time: FileTimeToLocalFileTime

![enter image description here]()

You could write a function that returns you a FILETIME in local time already:

FILETIME GetLocalTimeAsFileTime()

{

FILETIME ftNow;

GetSystemTimeAsFileTime(ref ftNow);

//convert to local

FILETIME ftNowLocal

FileTimeToLocalFileTime(ftNow, ref ftNowLocal);

return ftNowLocal;

}

![enter image description here]()

And lets say you want to decode the local FILETIME into a SYSTEMTIME. That's no problem, you can use FileTimeToSystemTime again:

![enter image description here]()

Fortunately, Windows already provides you a function that returns you the value:

![enter image description here]()

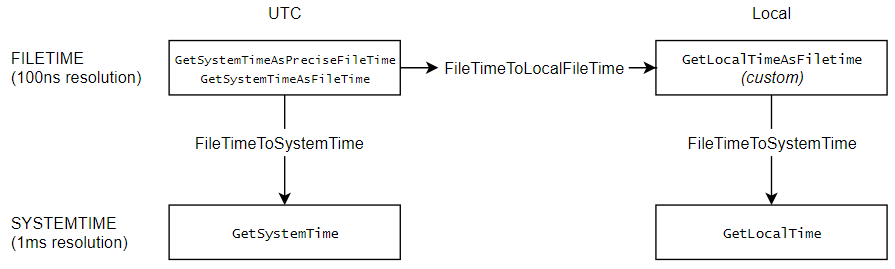

Precise

There is another consideration. Before Windows 8, the clock had a resolution of around 15ms. In Windows 8 they improved the clock to 100ns (matching the resolution of FILETIME).

GetSystemTimeAsFileTime (legacy, 15ms resolution)GetSystemTimePreciseAsFileTime (Windows 8, 100ns resolution)

This means we should always prefer the new value:

![enter image description here]()

You asked for the time

You asked for the time; but you have some choices.

The timezone:

- UTC (system native)

- Local timezone

The format:

FILETIME (system native, 100ns resolution)SYTEMTIME (decoded, 1ms resolution)

Summary

- 100ns resolution:

FILETIME

- UTC:

GetSytemTimePreciseAsFileTime (or GetSystemTimeAsFileTime)

- Local: (roll your own)

- 1ms resolution:

SYSTEMTIME

- UTC:

GetSystemTime

- Local:

GetLocalTime

GetSystemTime?? – Breadboard