I'm looking for some kind of formula or algorithm to determine the brightness of a color given the RGB values. I know it can't be as simple as adding the RGB values together and having higher sums be brighter, but I'm kind of at a loss as to where to start.

The method could vary depending on your needs. Here are 3 ways to calculate Luminance:

Luminance (standard for certain colour spaces):

(0.2126*R + 0.7152*G + 0.0722*B)source![img]()

Luminance (perceived option 1):

(0.299*R + 0.587*G + 0.114*B)source![img]()

Luminance (perceived option 2, slower to calculate):

sqrt( 0.241*R^2 + 0.691*G^2 + 0.068*B^2 )sqrt( 0.299*R^2 + 0.587*G^2 + 0.114*B^2 )(thanks to @MatthewHerbst) source![img]()

[Edit: added examples using named css colors sorted with each method.]

0.299*(R^2) (because exponentiation goes before multiplication) –

Musgrove L*" lightness, but not quite. The struck out version is ~5% incorrect, the M.Ransom version is ~15% incorrect and #1, #2 are ~40% incorrect if referenced to perceptual lightness (L*). None of these find luminance (Y), and none find perceptual brightness (Q). –

Chantry (0.299*R^2.2 + 0.587*G^2.2 + 0.114*B^2.2) ^ (1/2.2). –

Irritant I think what you are looking for is the RGB -> Luma conversion formula.

Photometric/digital ITU BT.709:

Y = 0.2126 R + 0.7152 G + 0.0722 B

Digital ITU BT.601 (gives more weight to the R and B components):

Y = 0.299 R + 0.587 G + 0.114 B

If you are willing to trade accuracy for perfomance, there are two approximation formulas for this one:

Y = 0.33 R + 0.5 G + 0.16 B

Y = 0.375 R + 0.5 G + 0.125 B

These can be calculated quickly as

Y = (R+R+B+G+G+G)/6

Y = (R+R+R+B+G+G+G+G)>>3

Blue + 3*Green)/6, 2nd one is (3*Red + Blue + 4*Green)>>3. granted, in both quick approximations, Blue has the lowest weight, but it's still there. –

Blither Y = (R<<1+R+G<<2+B)>>3 (thats only 3-4 CPU cycles on ARM) but I guess a good compiler will do that optimisation for you. –

Photovoltaic Y = (R << (1 + R + G) << (2 + B)) >> 3. The correct snippet: int Y = ((R << 1) + R + (G << 2) + B) >> 3;. –

Koval The "Accepted" Answer is Incorrect and Incomplete

The only answers that are accurate are the @jive-dadson and @EddingtonsMonkey answers, and in support @nils-pipenbrinck. The other answers (including the accepted) are linking to or citing sources that are either wrong, irrelevant, obsolete, or broken.

Briefly:

- sRGB must be LINEARIZED before applying the coefficients.

- Luminance (L or Y) is linearly additive as is light.

- Perceived lightness (L*) is nonlinear to light as is human perception.

- HSV and HSL are not even remotely accurate in terms of perception. (Not perceptually uniform).

- The IEC standard for sRGB specifies a threshold of 0.04045 it is NOT 0.03928 (that was from an obsolete early draft).

- To be useful (i.e. relative to perception), Euclidian distances require a perceptually uniform Cartesian vector space such as CIELAB. sRGB is not one.

What follows is a correct and complete answer:

Because this thread appears highly in search engines, I am adding this answer to clarify the various misconceptions on the subject.

Luminance is a linear measure of light, spectrally weighted for normal vision but not adjusted for the non-linear perception of lightness. It can be a relative measure, Y as in CIEXYZ, or as L, an absolute measure in cd/m2 (not to be confused with L*).

Perceived lightness is used by some vision models such as CIELAB, here L* (Lstar) is a value of perceptual lightness, and is non-linear relative to light, to approximate the human vision non-linear response curve. (That is, linear to perception but therefore non-linear to light).

Brightness is a perceptual attribute, it does not have a "physical" measure. However some color appearance models do have a value, usually denoted as Q for perceived brightness, which is different than perceived lightness. Note: Brightness is not to be confused with luminous energy, which is also denoted with Q, but is not necessarily calculated the same as brightness for a given color appearance model.

Luma is a gamma encoded and spectrally weighted signal used in some video encodings (such as Y´I´Q´ or Y´U´V´). The prime symbol after the Y (Y´) indicates the data is gamma encoded. Luma is not to be confused with linear luminance (Y or L per above).

Weighting means the digital values for each of the red, green, or blue primary colors in a display are adjusted so that an equal value of red, green, and blue results in a neutral gray or white. This is defined in the given color space, such as BT.709. in other words, 255 blue is much darker than 255 green, in accordance with the luminous efficiency function.

Gamma or transfer curve (TRC) is a curve that is often similar to the perceptual curve, and is commonly applied to image data for storage or broadcast to reduce perceived noise and/or improve data utilization (and related reasons).

In the early days of electronic imaging, it was often referred to as Gamma Correction.

Solution: To estimate perceived lightness

(This assumes conditions close to the reference environment)

- First convert gamma encoded R´G´B´ image values to linear luminance (

Y).- linearize each R´G´B´ value to linear RGB

- apply the weighting coefficients to each R, G, B value.

- sum the results to get Y.

- Then convert Y to non-linear perceived lightness (

L*).

TO FIND LUMINANCE:

...Because apparently it was lost somewhere...

Step One:

Convert all sRGB 8 bit integer values to decimal 0.0-1.0

vR = sR / 255;

vG = sG / 255;

vB = sB / 255;

Step Two:

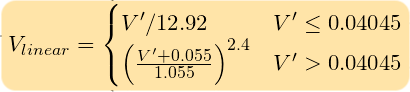

Convert a gamma encoded RGB to a linear value. sRGB (computer standard) for instance requires a power curve of approximately V^2.2, though the "accurate" transform is:

Where V´ is the gamma-encoded R, G, or B channel of sRGB.

Pseudocode:

function sRGBtoLin(colorChannel) {

// Send this function a decimal sRGB gamma encoded color value

// between 0.0 and 1.0, and it returns a linearized value.

if ( colorChannel <= 0.04045 ) {

return colorChannel / 12.92;

} else {

return pow((( colorChannel + 0.055)/1.055),2.4);

}

}

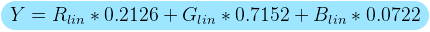

Step Three:

To find Luminance (Y) apply the standard coefficients for sRGB:

Pseudocode using above functions:

Y = (0.2126 * sRGBtoLin(vR) + 0.7152 * sRGBtoLin(vG) + 0.0722 * sRGBtoLin(vB))

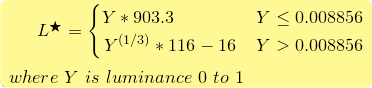

TO FIND PERCEIVED LIGHTNESS:

Step Four:

Take luminance Y from above, and transform to L*

Pseudocode:

function YtoLstar(Y) {

// Send this function a luminance value between 0.0 and 1.0,

// and it returns L* which is "perceptual lightness"

if ( Y <= (216/24389)) { // The CIE standard states 0.008856 but 216/24389 is the intent for 0.008856451679036

return Y * (24389/27); // The CIE standard states 903.3, but 24389/27 is the intent, making 903.296296296296296

} else {

return pow(Y,(1/3)) * 116 - 16;

}

}

L* is a value from 0 (black) to 100 (white) where 50 is the perceptual "middle grey". L* = 50 is the equivalent of Y = 18.4, or in other words an 18% grey card, representing the middle of a photographic exposure (Ansel Adams zone V).

2023 Edit:

Adds by Myndex in an effort toward completeness:

Some of the other answers on this page are showing the math for Luma (Yʹ in other words Y prime) and luma should never be confused with relative luminance (Y). And neither is perceptually uniform lightness nor brightness.

- Luma, while it is gamma compressed and may be useful for encoding image data for later decoding, is not perceptually uniform especially for more saturated colors. As such, Luma is generally not a good metric for perceived lightness.

- Luminance is linear per light, but not linear per perception, in other words, it is not perceptually uniform, and therefore not useful for predicting lightness perception.

- Lstar

L*in my answer above is often used for predicting a perceived lightness. It is based on Munsell Value, which is derived from experiments in a specifically defined environment using large diffuse color samples.L*is not particularly context sensitive, i.e. not sensitive to things like simultaneous contrast, HK, or other contextual sensations.- Therefore,

L*can only take us part way to an accurate model of perceived lightness. L*along with CIELAB is good for determining "small differences" between two items, i.e. close to the "Just Noticeable Difference" (JND threshold).L*becomes less accurate at larger supra-threshold levels and large differences, where other contextual factors begin to have a more substantial effect.- Supra-threshold curve shapes are different than the JND threshold curve.

L*puts perceptual middle grey at 18%.- Actual perceived middle grey depends on context.

- Middle contrast for high spatial frequency stimuli (such as text) is often much higher than 18%.

- Contrast perception is more complicated than the difference between two lightness values, and simple difference or ratios are of limited utility, and not uniform over the visual range.

Additional References:

IEC 61966-2-1:1999 Standard

sRGB

CIELAB

CIEXYZ

Charles Poynton's Gamma FAQ

Reference links in the above text:

Luminance

Lightness

Lightness vs Brightness at CIE

Luminous energy

Luma

YIQ

YUV and others in the book Video Demystified

Rec._709

Luminous efficiency function

Gamma correction

Luma=rgb2gray(RGB);LAB=rgb2lab(RGB);LAB(:,:,2:3)=0;PerceptualGray=lab2rgb(LAB); –

Sheepfold L*a*b* does not take into account a number of psychophysical attributes. Helmholtz-Kohlrausch effect is one, but there are many others. CIELAB is not a "full" image assessment model by any means. In my post I was trying to cover the basic concepts as completely as possible without venturing into the very deep minutiae. The Hunt model, Fairchild's models, and others do a more complete job, but are also substantially more complex. –

Chantry YtoLstar(Y) takes a value in the range 0 to 1, and returns one in the range 0 to 100, which is a bit confusing. –

Instrumentalist if ( Y <= (216/24389) { is missing a ) –

Voltage L* is perceptal lightness 0-100, so set it at 50 for your desired effect. –

Chantry #eee... But there are other aspects of human vision like the HK effect, that causes saturated colors to seem more luminous than the calculated luminance would suggest. For better accuracy you need a much more complicated color model. Look into OKLab –

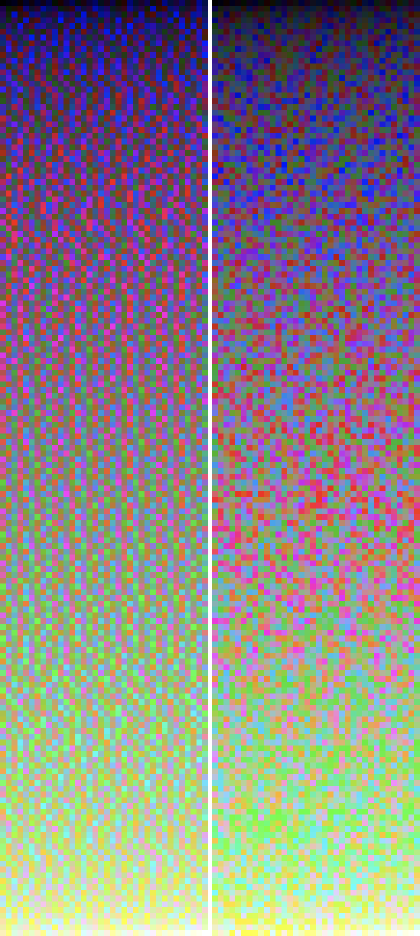

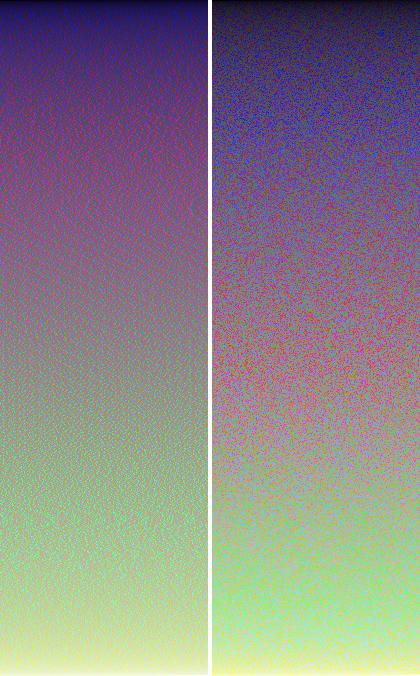

Chantry I have made comparison of the three algorithms in the accepted answer. I generated colors in cycle where only about every 400th color was used. Each color is represented by 2x2 pixels, colors are sorted from darkest to lightest (left to right, top to bottom).

1st picture - Luminance (relative)

0.2126 * R + 0.7152 * G + 0.0722 * B

2nd picture - http://www.w3.org/TR/AERT#color-contrast

0.299 * R + 0.587 * G + 0.114 * B

3rd picture - HSP Color Model

sqrt(0.299 * R^2 + 0.587 * G^2 + 0.114 * B^2)

4th picture - WCAG 2.0 SC 1.4.3 relative luminance and contrast ratio formula (see @Synchro's answer here)

Pattern can be sometimes spotted on 1st and 2nd picture depending on the number of colors in one row. I never spotted any pattern on picture from 3rd or 4th algorithm.

If i had to choose i would go with algorithm number 3 since its much easier to implement and its about 33% faster than the 4th.

^2 and sqrt included in the third formula are a quicker way of approximating linear RGB from non-linear RGB instead of the ^2.2 and ^(1/2.2) that would be more correct. Using nonlinear inputs instead of linear ones is extremely common unfortunately. –

Jettiejettison Below is the only CORRECT algorithm for converting sRGB images, as used in browsers etc., to grayscale.

It is necessary to apply an inverse of the gamma function for the color space before calculating the inner product. Then you apply the gamma function to the reduced value. Failure to incorporate the gamma function can result in errors of up to 20%.

For typical computer stuff, the color space is sRGB. The right numbers for sRGB are approx. 0.21, 0.72, 0.07. Gamma for sRGB is a composite function that approximates exponentiation by 1/(2.2). Here is the whole thing in C++.

// sRGB luminance(Y) values

const double rY = 0.212655;

const double gY = 0.715158;

const double bY = 0.072187;

// Inverse of sRGB "gamma" function. (approx 2.2)

double inv_gam_sRGB(int ic) {

double c = ic/255.0;

if ( c <= 0.04045 )

return c/12.92;

else

return pow(((c+0.055)/(1.055)),2.4);

}

// sRGB "gamma" function (approx 2.2)

int gam_sRGB(double v) {

if(v<=0.0031308)

v *= 12.92;

else

v = 1.055*pow(v,1.0/2.4)-0.055;

return int(v*255+0.5); // This is correct in C++. Other languages may not

// require +0.5

}

// GRAY VALUE ("brightness")

int gray(int r, int g, int b) {

return gam_sRGB(

rY*inv_gam_sRGB(r) +

gY*inv_gam_sRGB(g) +

bY*inv_gam_sRGB(b)

);

}

gray function performs a gamma compression afterwards, but luminance itself is the uncompressed gray value, so if you want to compute the brightness then leave out the call togam_sRGB. If you just want to convert colors in order to display them black-and-white, then you should leave it in. –

Achromat Rather than getting lost amongst the random selection of formulae mentioned here, I suggest you go for the formula recommended by W3C standards.

Here's a straightforward but exact PHP implementation of the WCAG 2.0 SC 1.4.3 relative luminance and contrast ratio formulae. It produces values that are appropriate for evaluating the ratios required for WCAG compliance, as on this page, and as such is suitable and appropriate for any web app. This is trivial to port to other languages.

/**

* Calculate relative luminance in sRGB colour space for use in WCAG 2.0 compliance

* @link http://www.w3.org/TR/WCAG20/#relativeluminancedef

* @param string $col A 3 or 6-digit hex colour string

* @return float

* @author Marcus Bointon <[email protected]>

*/

function relativeluminance($col) {

//Remove any leading #

$col = trim($col, '#');

//Convert 3-digit to 6-digit

if (strlen($col) == 3) {

$col = $col[0] . $col[0] . $col[1] . $col[1] . $col[2] . $col[2];

}

//Convert hex to 0-1 scale

$components = array(

'r' => hexdec(substr($col, 0, 2)) / 255,

'g' => hexdec(substr($col, 2, 2)) / 255,

'b' => hexdec(substr($col, 4, 2)) / 255

);

//Correct for sRGB

foreach($components as $c => $v) {

if ($v <= 0.04045) {

$components[$c] = $v / 12.92;

} else {

$components[$c] = pow((($v + 0.055) / 1.055), 2.4);

}

}

//Calculate relative luminance using ITU-R BT. 709 coefficients

return ($components['r'] * 0.2126) + ($components['g'] * 0.7152) + ($components['b'] * 0.0722);

}

/**

* Calculate contrast ratio acording to WCAG 2.0 formula

* Will return a value between 1 (no contrast) and 21 (max contrast)

* @link http://www.w3.org/TR/WCAG20/#contrast-ratiodef

* @param string $c1 A 3 or 6-digit hex colour string

* @param string $c2 A 3 or 6-digit hex colour string

* @return float

* @author Marcus Bointon <[email protected]>

*/

function contrastratio($c1, $c2) {

$y1 = relativeluminance($c1);

$y2 = relativeluminance($c2);

//Arrange so $y1 is lightest

if ($y1 < $y2) {

$y3 = $y1;

$y1 = $y2;

$y2 = $y3;

}

return ($y1 + 0.05) / ($y2 + 0.05);

}

To add what all the others said:

All these equations work kinda well in practice, but if you need to be very precise you have to first convert the color to linear color space (apply inverse image-gamma), do the weight average of the primary colors and - if you want to display the color - take the luminance back into the monitor gamma.

The luminance difference between ingnoring gamma and doing proper gamma is up to 20% in the dark grays.

Interestingly, this formulation for RGB=>HSV just uses v=MAX3(r,g,b). In other words, you can use the maximum of (r,g,b) as the V in HSV.

I checked and on page 575 of Hearn & Baker this is how they compute "Value" as well.

#FF0000 is as bright as #FFFFFF? –

Edinburgh lightness = (max(r, g, b) + min(r, g, b)) / 2 in HSL –

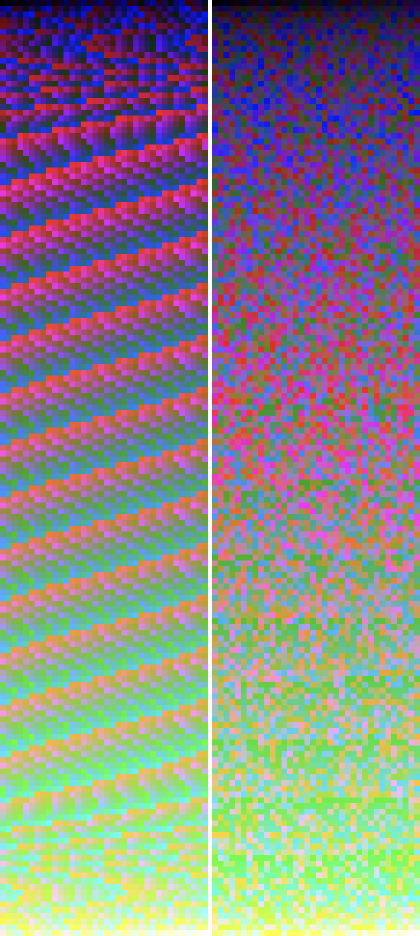

Edinburgh Consider this an addendum to Myndex's excellent answer. As he (and others) explain, the algorithms for calculating the relative luminance (and the perceptual lightness) of an RGB colour are designed to work with linear RGB values. You can't just apply them to raw sRGB values and hope to get the same results.

Well that all sounds great in theory, but I really needed to see the evidence for myself, so, inspired by Petr Hurtak's colour gradients, I went ahead and made my own. They illustrate the two most common algorithms (ITU-R Recommendation BT.601 and BT.709), and clearly demonstrate why you should do your calculations with linear values (not gamma-corrected ones).

Firstly, here are the results from the older ITU BT.601 algorithm. The one on the left uses raw sRGB values. The one on the right uses linear values.

ITU-R BT.601 colour luminance gradients

0.299 R + 0.587 G + 0.114 B

At this resolution, the left one actually looks surprisingly good! But if you look closely, you can see a few issues. At a higher resolution, unwanted artefacts are more obvious:

The linear one doesn't suffer from these, but there's quite a lot of noise there. Let's compare it to ITU-R Recommendation BT.709…

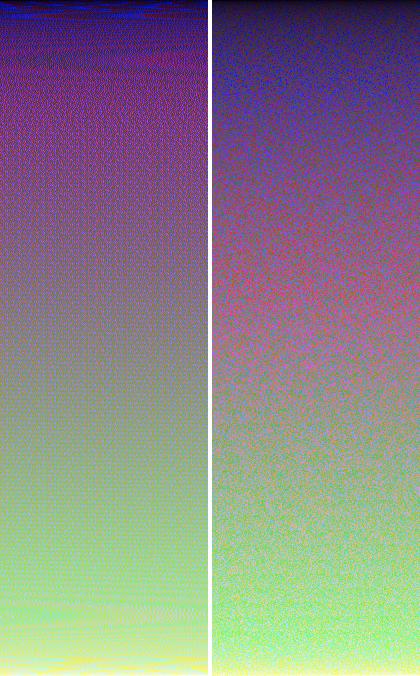

ITU-R BT.709 colour luminance gradients

0.2126 R + 0.7152 G + 0.0722 B

Oh boy. Clearly not intended to be used with raw sRGB values! And yet, that's exactly what most people do!

At high-res, you can really see how effective this algorithm is when using linear values. It doesn't have nearly as much noise as the earlier one. While none of these algorithms are perfect, this one is about as good as it gets.

I was solving a similar task today in javascript.

I've settled on this getPerceivedLightness(rgb) function for a HEX RGB color.

It deals with Helmholtz-Kohlrausch effect via Fairchild and Perrotta formula for luminance correction.

/**

* Converts RGB color to CIE 1931 XYZ color space.

* https://www.image-engineering.de/library/technotes/958-how-to-convert-between-srgb-and-ciexyz

* @param {string} hex

* @return {number[]}

*/

export function rgbToXyz(hex) {

const [r, g, b] = hexToRgb(hex).map(_ => _ / 255).map(sRGBtoLinearRGB)

const X = 0.4124 * r + 0.3576 * g + 0.1805 * b

const Y = 0.2126 * r + 0.7152 * g + 0.0722 * b

const Z = 0.0193 * r + 0.1192 * g + 0.9505 * b

// For some reason, X, Y and Z are multiplied by 100.

return [X, Y, Z].map(_ => _ * 100)

}

/**

* Undoes gamma-correction from an RGB-encoded color.

* https://en.wikipedia.org/wiki/SRGB#Specification_of_the_transformation

* https://mcmap.net/q/20665/-formula-to-determine-perceived-brightness-of-rgb-color

* @param {number}

* @return {number}

*/

function sRGBtoLinearRGB(color) {

// Send this function a decimal sRGB gamma encoded color value

// between 0.0 and 1.0, and it returns a linearized value.

if (color <= 0.04045) {

return color / 12.92

} else {

return Math.pow((color + 0.055) / 1.055, 2.4)

}

}

/**

* Converts hex color to RGB.

* https://mcmap.net/q/9259/-rgb-to-hex-and-hex-to-rgb

* @param {string} hex

* @return {number[]} [rgb]

*/

function hexToRgb(hex) {

const match = /^#?([a-f\d]{2})([a-f\d]{2})([a-f\d]{2})$/i.exec(hex)

if (match) {

match.shift()

return match.map(_ => parseInt(_, 16))

}

}

/**

* Converts CIE 1931 XYZ colors to CIE L*a*b*.

* The conversion formula comes from <http://www.easyrgb.com/en/math.php>.

* https://github.com/cangoektas/xyz-to-lab/blob/master/src/index.js

* @param {number[]} color The CIE 1931 XYZ color to convert which refers to

* the D65/2° standard illuminant.

* @returns {number[]} The color in the CIE L*a*b* color space.

*/

// X, Y, Z of a "D65" light source.

// "D65" is a standard 6500K Daylight light source.

// https://en.wikipedia.org/wiki/Illuminant_D65

const D65 = [95.047, 100, 108.883]

export function xyzToLab([x, y, z]) {

[x, y, z] = [x, y, z].map((v, i) => {

v = v / D65[i]

return v > 0.008856 ? Math.pow(v, 1 / 3) : v * 7.787 + 16 / 116

})

const l = 116 * y - 16

const a = 500 * (x - y)

const b = 200 * (y - z)

return [l, a, b]

}

/**

* Converts Lab color space to Luminance-Chroma-Hue color space.

* http://www.brucelindbloom.com/index.html?Eqn_Lab_to_LCH.html

* @param {number[]}

* @return {number[]}

*/

export function labToLch([l, a, b]) {

const c = Math.sqrt(a * a + b * b)

const h = abToHue(a, b)

return [l, c, h]

}

/**

* Converts a and b of Lab color space to Hue of LCH color space.

* https://mcmap.net/q/20773/-conversion-of-cielab-to-cielch-ab-not-yielding-correct-result

* @param {number} a

* @param {number} b

* @return {number}

*/

function abToHue(a, b) {

if (a >= 0 && b === 0) {

return 0

}

if (a < 0 && b === 0) {

return 180

}

if (a === 0 && b > 0) {

return 90

}

if (a === 0 && b < 0) {

return 270

}

let xBias

if (a > 0 && b > 0) {

xBias = 0

} else if (a < 0) {

xBias = 180

} else if (a > 0 && b < 0) {

xBias = 360

}

return radiansToDegrees(Math.atan(b / a)) + xBias

}

function radiansToDegrees(radians) {

return radians * (180 / Math.PI)

}

function degreesToRadians(degrees) {

return degrees * Math.PI / 180

}

/**

* Saturated colors appear brighter to human eye.

* That's called Helmholtz-Kohlrausch effect.

* Fairchild and Pirrotta came up with a formula to

* calculate a correction for that effect.

* "Color Quality of Semiconductor and Conventional Light Sources":

* https://books.google.ru/books?id=ptDJDQAAQBAJ&pg=PA45&lpg=PA45&dq=fairchild+pirrotta+correction&source=bl&ots=7gXR2MGJs7&sig=ACfU3U3uIHo0ZUdZB_Cz9F9NldKzBix0oQ&hl=ru&sa=X&ved=2ahUKEwi47LGivOvmAhUHEpoKHU_ICkIQ6AEwAXoECAkQAQ#v=onepage&q=fairchild%20pirrotta%20correction&f=false

* @return {number}

*/

function getLightnessUsingFairchildPirrottaCorrection([l, c, h]) {

const l_ = 2.5 - 0.025 * l

const g = 0.116 * Math.abs(Math.sin(degreesToRadians((h - 90) / 2))) + 0.085

return l + l_ * g * c

}

export function getPerceivedLightness(hex) {

return getLightnessUsingFairchildPirrottaCorrection(labToLch(xyzToLab(rgbToXyz(hex))))

}

Here's a bit of C code that should properly calculate perceived luminance.

// reverses the rgb gamma

#define inverseGamma(t) (((t) <= 0.0404482362771076) ? ((t)/12.92) : pow(((t) + 0.055)/1.055, 2.4))

//CIE L*a*b* f function (used to convert XYZ to L*a*b*) http://en.wikipedia.org/wiki/Lab_color_space

#define LABF(t) ((t >= 8.85645167903563082e-3) ? powf(t,0.333333333333333) : (841.0/108.0)*(t) + (4.0/29.0))

float

rgbToCIEL(PIXEL p)

{

float y;

float r=p.r/255.0;

float g=p.g/255.0;

float b=p.b/255.0;

r=inverseGamma(r);

g=inverseGamma(g);

b=inverseGamma(b);

//Observer = 2°, Illuminant = D65

y = 0.2125862307855955516*r + 0.7151703037034108499*g + 0.07220049864333622685*b;

// At this point we've done RGBtoXYZ now do XYZ to Lab

// y /= WHITEPOINT_Y; The white point for y in D65 is 1.0

y = LABF(y);

/* This is the "normal conversion which produces values scaled to 100

Lab.L = 116.0*y - 16.0;

*/

return(1.16*y - 0.16); // return values for 0.0 >=L <=1.0

}

As mentioned by @Nils Pipenbrinck:

All these equations work kinda well in practice, but if you need to be very precise you have to [do some extra gamma stuff]. The luminance difference between ignoring gamma and doing proper gamma is up to 20% in the dark grays.

Here's a fully self-contained JavaScript function that does the "extra" stuff to get that extra accuracy. It's based on Jive Dadson's C++ answer to this same question.

// Returns greyscale "brightness" (0-1) of the given 0-255 RGB values

// Based on this C++ implementation: https://mcmap.net/q/20665/-formula-to-determine-perceived-brightness-of-rgb-color

function rgbBrightness(r, g, b) {

let v = 0;

v += 0.212655 * ((r/255) <= 0.04045 ? (r/255)/12.92 : Math.pow(((r/255)+0.055)/1.055, 2.4));

v += 0.715158 * ((g/255) <= 0.04045 ? (g/255)/12.92 : Math.pow(((g/255)+0.055)/1.055, 2.4));

v += 0.072187 * ((b/255) <= 0.04045 ? (b/255)/12.92 : Math.pow(((b/255)+0.055)/1.055, 2.4));

return v <= 0.0031308 ? v*12.92 : 1.055 * Math.pow(v,1.0/2.4) - 0.055;

}

See Myndex's answer for a more accurate calculation.

RGB Luminance value = 0.3 R + 0.59 G + 0.11 B

http://www.scantips.com/lumin.html

If you're looking for how close to white the color is you can use Euclidean Distance from (255, 255, 255)

I think RGB color space is perceptively non-uniform with respect to the L2 euclidian distance. Uniform spaces include CIE LAB and LUV.

The inverse-gamma formula by Jive Dadson needs to have the half-adjust removed when implemented in Javascript, i.e. the return from function gam_sRGB needs to be return int(v*255); not return int(v*255+.5); Half-adjust rounds up, and this can cause a value one too high on a R=G=B i.e. grey colour triad. Greyscale conversion on a R=G=B triad should produce a value equal to R; it's one proof that the formula is valid. See Nine Shades of Greyscale for the formula in action (without the half-adjust).

I wonder how those rgb coefficients were determined. I did an experiment myself and I ended up with the following:

Y = 0.267 R + 0.642 G + 0.091 B

Close but but obviously different than the long established ITU coefficients. I wonder if those coefficients could be different for each and every observer, because we all may have a different amount of cones and rods on the retina in our eyes, and especially the ratio between the different types of cones may differ.

For reference:

ITU BT.709:

Y = 0.2126 R + 0.7152 G + 0.0722 B

ITU BT.601:

Y = 0.299 R + 0.587 G + 0.114 B

I did the test by quickly moving a small gray bar on a bright red, bright green and bright blue background, and adjusting the gray until it blended in just as much as possible. I also repeated that test with other shades. I repeated the test on different displays, even one with a fixed gamma factor of 3.0, but it all looks the same to me. More over, the ITU coefficients literally are wrong for my eyes.

And yes, I presumably have a normal color vision.

The answer from Myindex coded in Java:

public static double calculateRelativeLuminance(Color color)

{

double red = color.getRed() / 255.0;

double green = color.getGreen() / 255.0;

double blue = color.getBlue() / 255.0;

double r = (red <= 0.04045) ? red / 12.92 : Math.pow((red + 0.055) / 1.055, 2.4);

double g = (green <= 0.04045) ? green / 12.92 : Math.pow((green + 0.055) / 1.055, 2.4);

double b = (blue <= 0.04045) ? blue / 12.92 : Math.pow((blue + 0.055) / 1.055, 2.4);

return 0.2126 * r + 0.7152 * g + 0.0722 * b;

}

I used it to calculate the contrast ratio of the background color and determine if the text color would be bright or not. Full example:

public static boolean isBright(Color backgroundColor)

{

double backgroundLuminance = calculateRelativeLuminance(backgroundColor);

double whiteContrastRatio = calculateContrastRatio(backgroundLuminance, 1.0);

double blackContrastRatio = calculateContrastRatio(backgroundLuminance, 0.0);

return whiteContrastRatio > blackContrastRatio;

}

public static double calculateRelativeLuminance(Color color)

{

double red = color.getRed() / 255.0;

double green = color.getGreen() / 255.0;

double blue = color.getBlue() / 255.0;

double r = (red <= 0.04045) ? red / 12.92 : Math.pow((red + 0.055) / 1.055, 2.4);

double g = (green <= 0.04045) ? green / 12.92 : Math.pow((green + 0.055) / 1.055, 2.4);

double b = (blue <= 0.04045) ? blue / 12.92 : Math.pow((blue + 0.055) / 1.055, 2.4);

return 0.2126 * r + 0.7152 * g + 0.0722 * b;

}

public static double calculateContrastRatio(double backgroundLuminance, double textLuminance)

{

var brightest = Math.max(backgroundLuminance, textLuminance);

var darkest = Math.min(backgroundLuminance, textLuminance);

return (brightest + 0.05) / (darkest + 0.05);

}

The HSV colorspace should do the trick, see the wikipedia article depending on the language you're working in you may get a library conversion .

H is hue which is a numerical value for the color (i.e. red, green...)

S is the saturation of the color, i.e. how 'intense' it is

V is the 'brightness' of the color.

A single line:

brightness = 0.2*r + 0.7*g + 0.1*b

When r,g,b values are between 0 to 255, then the brightness scale is also between 0 (black) to 255 (white).

It can be fine-tuned of course, but I've found it to be sufficient for most use case.

The 'V' of HSV is probably what you're looking for. MATLAB has an rgb2hsv function and the previously cited wikipedia article is full of pseudocode. If an RGB2HSV conversion is not feasible, a less accurate model would be the grayscale version of the image.

To determine the brightness of a color with R, I convert the RGB system color in HSV system color.

In my script, I use the HEX system code before for other reason, but you can start also with RGB system code with rgb2hsv {grDevices}. The documentation is here.

Here is this part of my code:

sample <- c("#010101", "#303030", "#A6A4A4", "#020202", "#010100")

hsvc <-rgb2hsv(col2rgb(sample)) # convert HEX to HSV

value <- as.data.frame(hsvc) # create data.frame

value <- value[3,] # extract the information of brightness

order(value) # ordrer the color by brightness

For clarity, the formulas that use a square root need to be

sqrt(coefficient * (colour_value^2))

not

sqrt((coefficient * colour_value))^2

The proof of this lies in the conversion of a R=G=B triad to greyscale R. That will only be true if you square the colour value, not the colour value times coefficient. See Nine Shades of Greyscale

Please define brightness. If you're looking for how close to white the color is you can use Euclidean Distance from (255, 255, 255)

© 2022 - 2024 — McMap. All rights reserved.