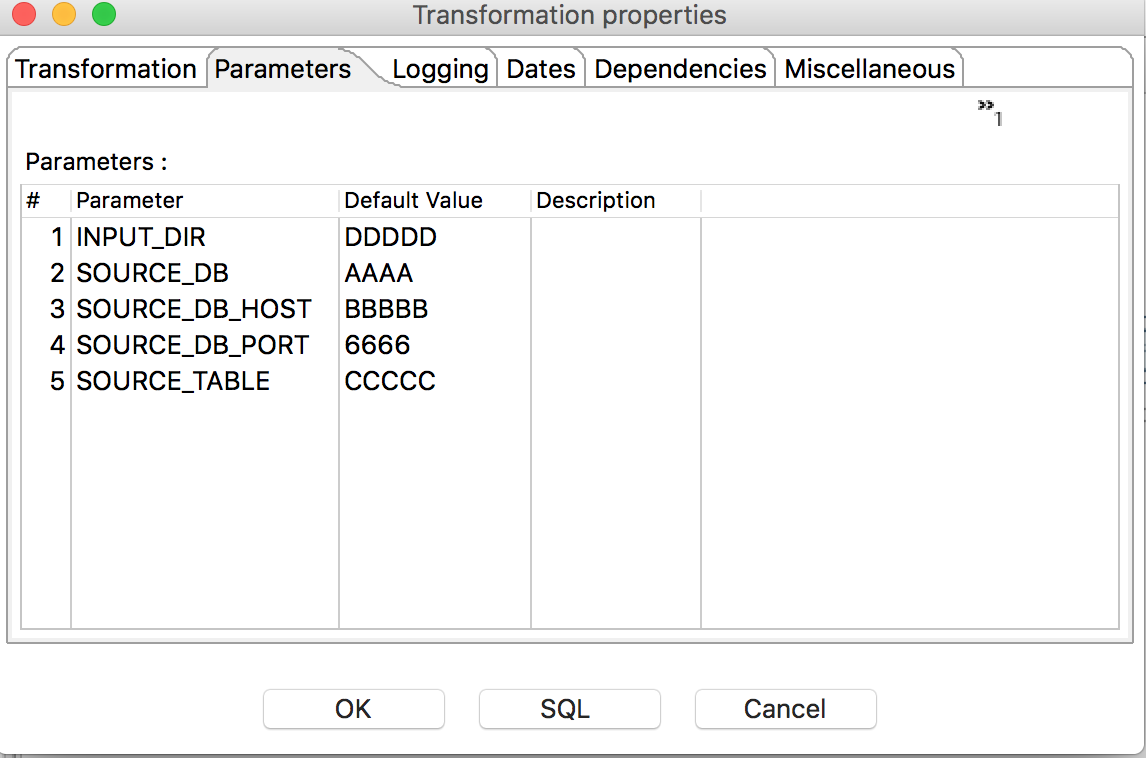

I am required to parameterize all variables in my kettle job and a transformation (the jobs will be run in AWS and all params are passed in as environment variables).

My connections, paths and various other parameters in the job and its attendant transformation use the ${SOURCE_DB_PASSWORD}, ${OUTPUT_DIRECTORY} style.

When I set these as environment variables in Data Integration UI, they all work and job runs successfully in the UI tool. When I run them from a bash script:

#!/bin/sh

export SOURCE_DB_HOST=services.db.dev

export SOURCE_DB_PORT=3306

kitchen.sh -param:SOURCE_DB_PORT=$SOURCE_DB_PORT -param:SOURCE_DB_HOST=$SOURCE_DB_HOST -file MY_JOB.kjb

The job and the transformation it calls do not pick up the variables. The error being:

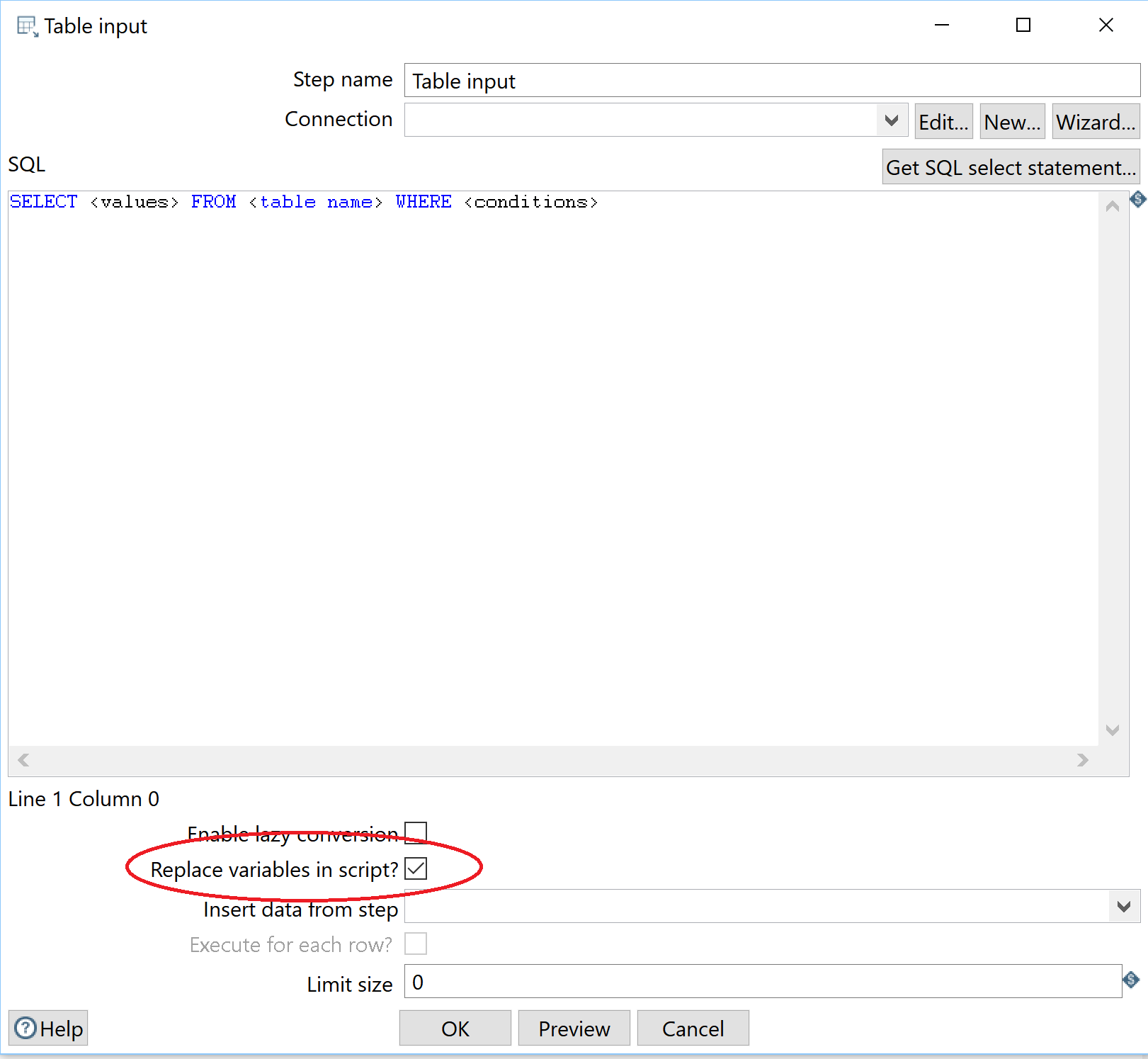

Cannot load connection class because of underlying exception: 'java.lang.NumberFormatException: For input string: "${SOURCE_DB_PORT}"'

So without using jndi files, or kettle.properties, I need some way of mapping environment variables to parameters/variables inside PDI jobs and transformations.

[PDI version 8.1 on Mac OS X 10.13]