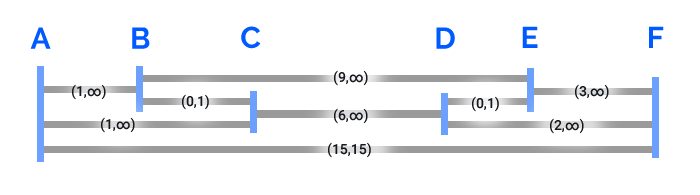

I have a system where I need to calculate the possible range of values for each variable (I don't need to find a solution to the system). Here is an illustration of an example system:

Each blue line is a node (named by the label above it), and the gray lines are the edges between the nodes. There is an initial constraint given: for example, edge BC can be any real number between 0 and 1 inclusive, and edge BE can be any number greater than or equal to 9. The nodes can't cross over other nodes. It's helpful to imagine them as metal bars that can extend and retract, pushing the blue plates around.

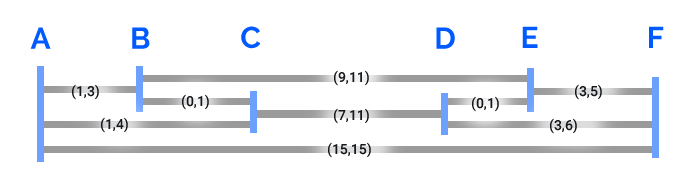

My goal is to calculate for each edge the minimum and maximum length they can be. The starting constraints set up the system, but the end result could be more constrained than them. For example, edge DF has a starting min/max of (2,∞), but you can see that it can't actually be shorter than 3, since contracting it pulls D into E, and E towards F, and EF has a minimum of 3. The end result would be this, I believe:

The caveat is I need an algorithm that can scale up to hundreds of thousands of nodes, which would be more densely connected than this example. It can't exponentially increase in running time, since I also have to run this algorithm hundreds of thousands of times.

I tried a method that gave some more constrained values, but not the most constrained values. To visualize the method, I essentially pulled all the plates over to the left as far as possible, then recorded each plates maximum position. Then I did the same, pulling them over to the right. Then, for each edge, I just find the difference between each plate's values. This method finds the maximum values very efficiently, but the problem is when you have a situation like in this example, where CD is sort of "locked" to BE by BC and DE. It can't be 6, since the system only allows it to be 2 shorter than 9. I need a way that finds the true min value of 7. My method doesn't capture that "locking" relationship: when you pull C all the way over to the left, BC is 0, and when you pull D all the way over to the right, DE is 0, but they can't both be 0 if CD is 6.

In this example, it's simple to see that CD is constrained by BE, but in practice, the system would be much denser and larger than the example, and finding such situations seems non-trivial. If the method involves searching around it locally, I'm worried it will scale poorly, but that may be the only way.

If this is modeled as a linear programming problem (AB + BC = AC, AB>1, etc), maybe there are some attributes of this system that could be taken advantage of: the fact that all the coefficients of the constraints are 1, and that there is only addition and subtraction in the constraints.

Does anyone have any suggestions on how to approach this problem? Or have any insights into what kind of run time complexity I should realistically hope for? Thanks!