I am newbie to Machine Learning in general. I am currently trying to follow a tutorial on sentiment analysis using BERT and Transformers https://curiousily.com/posts/sentiment-analysis-with-bert-and-hugging-face-using-pytorch-and-python/

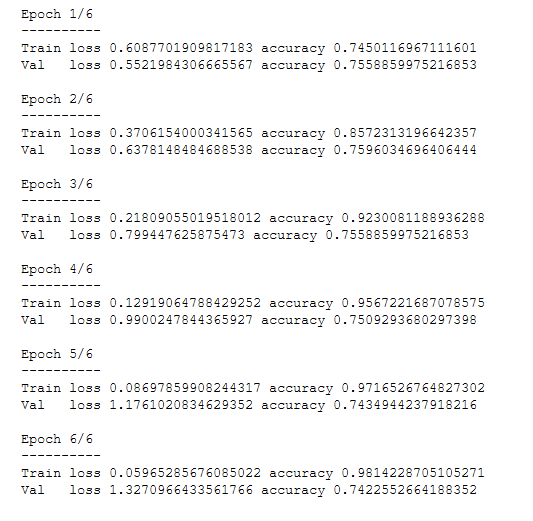

However when I train the model it has appeared that the model is overfitting

I do not know how to fix this. I have tried lowering amount of epochs, increasing batch size , shuffling my data (which is ordered) and increasing the validation split. So far nothing has worked. I have even tried changing different learning rate but the one I am using now is the smallest.

Below is my code:

PRE_TRAINED_MODEL_NAME = 'TurkuNLP/bert-base-finnish-cased-v1'

tokenizer = BertTokenizer.from_pretrained(PRE_TRAINED_MODEL_NAME)

MAX_LEN = 40

#Make a PyTorch dataset

class FIDataset(Dataset):

def __init__(self, texts, targets, tokenizer, max_len):

self.texts = texts

self.targets = targets

self.tokenizer = tokenizer

self.max_len = max_len

def __len__(self):

return len(self.texts)

def __getitem__(self, item):

text = str(self.texts[item])

target = self.targets[item]

encoding = self.tokenizer.encode_plus(

text,

add_special_tokens=True,

max_length=self.max_len,

return_token_type_ids=False,

pad_to_max_length=True,

return_attention_mask=True,

return_tensors='pt',

)

return {

'text': text,

'input_ids': encoding['input_ids'].flatten(),

'attention_mask': encoding['attention_mask'].flatten(),

'targets': torch.tensor(target, dtype=torch.long)

}

#split test and train

df_train, df_test = train_test_split(

df,

test_size=0.1,

random_state=RANDOM_SEED

)

df_val, df_test = train_test_split(

df_test,

test_size=0.5,

random_state=RANDOM_SEED

)

#data loader function

def create_data_loader(df, tokenizer, max_len, batch_size):

ds = FIDataset(

texts=df.content.to_numpy(),

targets=df.sentiment.to_numpy(),

tokenizer=tokenizer,

max_len=max_len

)

return DataLoader(

ds,

batch_size=batch_size,

num_workers=4

)

BATCH_SIZE = 32

#Load data into train, test, val

train_data_loader = create_data_loader(df_train, tokenizer, MAX_LEN, BATCH_SIZE)

val_data_loader = create_data_loader(df_val, tokenizer, MAX_LEN, BATCH_SIZE)

test_data_loader = create_data_loader(df_test, tokenizer, MAX_LEN, BATCH_SIZE)

#Bert model loading

bert_model = BertModel.from_pretrained(PRE_TRAINED_MODEL_NAME)

# Sentiment Classifier based on Bert model just loaded

class SentimentClassifier(nn.Module):

def __init__(self, n_classes):

super(SentimentClassifier, self).__init__()

self.bert = BertModel.from_pretrained(PRE_TRAINED_MODEL_NAME)

self.drop = nn.Dropout(p=0.1)

self.out = nn.Linear(self.bert.config.hidden_size, n_classes)

def forward(self, input_ids, attention_mask):

returned = self.bert(

input_ids=input_ids,

attention_mask=attention_mask

)

pooled_output = returned["pooler_output"]

output = self.drop(pooled_output)

return self.out(output)

#Create a Classifier instance and move to GPU

model = SentimentClassifier(3)

model = model.to(device)

#Optimize with AdamW

EPOCHS = 6

optimizer = AdamW(model.parameters(), lr=2e-5, correct_bias=False)

total_steps = len(train_data_loader) * EPOCHS

scheduler = get_linear_schedule_with_warmup(

optimizer,

num_warmup_steps=0,

num_training_steps=total_steps

)

loss_fn = nn.CrossEntropyLoss().to(device)

#Train each Epoch function

def train_epoch(

model,

data_loader,

loss_fn,

optimizer,

device,

scheduler,

n_examples

):

model = model.train()

losses = []

correct_predictions = 0

for d in data_loader:

input_ids = d["input_ids"].to(device)

attention_mask = d["attention_mask"].to(device)

targets = d["targets"].to(device)

outputs = model(

input_ids=input_ids,

attention_mask=attention_mask

)

_, preds = torch.max(outputs, dim=1)

loss = loss_fn(outputs, targets)

correct_predictions += torch.sum(preds == targets)

losses.append(loss.item())

loss.backward()

nn.utils.clip_grad_norm_(model.parameters(), max_norm=1.0)

optimizer.step()

scheduler.step()

optimizer.zero_grad()

return correct_predictions.double() / n_examples, np.mean(losses)

import torch

history = defaultdict(list)

best_accuracy = 0

if __name__ == '__main__':

for epoch in range(EPOCHS):

print(f'Epoch {epoch + 1}/{EPOCHS}')

print('-' * 10)

train_acc, train_loss = train_epoch(

model,

train_data_loader,

loss_fn,

optimizer,

device,

scheduler,

len(df_train)

)

print(f'Train loss {train_loss} accuracy {train_acc}')

val_acc, val_loss = eval_model(

model,

val_data_loader,

loss_fn,

device,

len(df_val)

)

print(f'Val loss {val_loss} accuracy {val_acc}')

print()

history['train_acc'].append(train_acc)

history['train_loss'].append(train_loss)

history['val_acc'].append(val_acc)

history['val_loss'].append(val_loss)

if val_acc > best_accuracy:

torch.save(model.state_dict(), 'best_model_state.bin')

best_accuracy = val_acc