As a result of my answer to this question, I started reading about the keyword volatile and what the consensus is regarding it. I see there is a lot of information about it, some old which seems wrong now and a lot new which says it has almost no place in multi-threaded programming. Hence, I'd like to clarify a specific usage (couldn't find an exact answer here on SO).

I also want to point out I do understand the requirements for writing multi-threaded code in general and why volatile is not solving things. Still, I see code using volatile for thread control in code bases I work in. Further, this is the only case I use the volatile keyword as all other shared resources are properly synchronized.

Say we have a class like:

class SomeWorker

{

public:

SomeWorker() : isRunning_(false) {}

void start() { isRunning_ = true; /* spawns thread and calls run */ }

void stop() { isRunning_ = false; }

private:

void run()

{

while (isRunning_)

{

// do something

}

}

volatile bool isRunning_;

};

For simplicity some things are left out, but the essential thing is that an object is created which does something in a newly spawned thread checking a (volatile) boolean to know if it should stop. This boolean value is set from another thread whenever it wants the worker to stop.

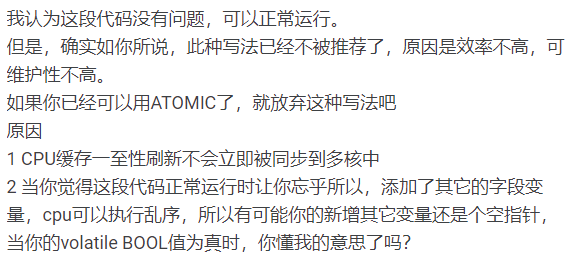

My understanding has been that the reason to use volatile in this specific case is simply to avoid any optimization which would cache it in a register for the loop. Hence, resulting in an infinite loop. There is no need to properly synchronize things, because the worker thread will eventually get the new value?

I'd like to understand if this is considered completely wrong and if the right approach is to use a synchronized variable? Is there a difference between compiler/architecture/cores? Maybe it's just a sloppy approach worth avoiding?

I'd be happy if someone would clarify this. Thanks!

EDIT

I'd be interested to see (in code) how you choose to solve this.

stop()thenstart()in quick succession may result in more than one thread running at the same time. Whether that's a bug or not is a design question. – Fermataintor a pointer? Suddenly you're in trouble. If you stick to atomics as a matter of course, you'll be in the right concurrent mindset from the start. – Nulliporevolatiledoes and with examples for exactly this situation: – Kickvolatilein practice, because accesses are required to compile to a load or store in the asm (which is sufficient because CPUs have coherent caches between the cores that std::thread starts threads across). You don't get any ordering, but this code doesn't depend on that (and wouldn't give any useful sync with SC atomics). The well-defined way to do this would bestd::atomic<bool>withmemory_order_relaxed.volatileis obsolete for this but does work fine. – Lovingstd::atomic<bool>withmemory_order_relaxed. (Just a load or store, no barriers). It could only break if a compiler disregardedvolatileand hoisted a load out of the loop, not actually re-checking it every iteration. The reader will definitely see a value stored by another core after a few tens of nanoseconds, thanks to cache coherency. – Lovingatomic<T>load or store withmemory_order_relaxedcan compile without any extra barrier instructions. (The same asvolatile.) Out-of-order exec might run loads early relative to other code, but the OoO exec window is small so this won't stop a core from noticing anexit_nowvariable becomingtruefor a meaningful time. – Lovingseq_cstorrelease/acquireif you want that), there's no reason to make a thread wait for anything before running a load instruction for arelaxedload. It does sample from cache at a nearby time to when it appears in program order, which is fine. – Lovingstd::atomic<long>.load(relaxed)into just a load instruction with no barriers or anything, same as you'd get fromvolatile, so GCC thinks a pure load is sufficient to give the "reasonable time" visibility guarantee ISO C++ says implementations "should" provide. – Lovingrelaxedorder concerns ordering, not time. And it says thatrelaxedhas no guarantees with respect to order, only that the accesses must be "indivisible". Accessing a properly aligned integral type on a Sparc/Intel/whatever will be "indivisible", provided the integral type isn't larger than a machine word (64 bits on modern processors). There's nothing in the standard to say when this indivisible access will occur. – Spaakmemcpywith the pointers and counter in registers will actually only read and write words (although it will still do the loop on the number of bytes). A side effect is that if you're constantly reading a value from a single word, and not reading anything else in the meantime, the processor, having read the word, may simply acquire the value from the word already read... – Spaak