I'm trying to reproduce this blog post on overfitting. I want to explore how a spline compares to the tested polynomials.

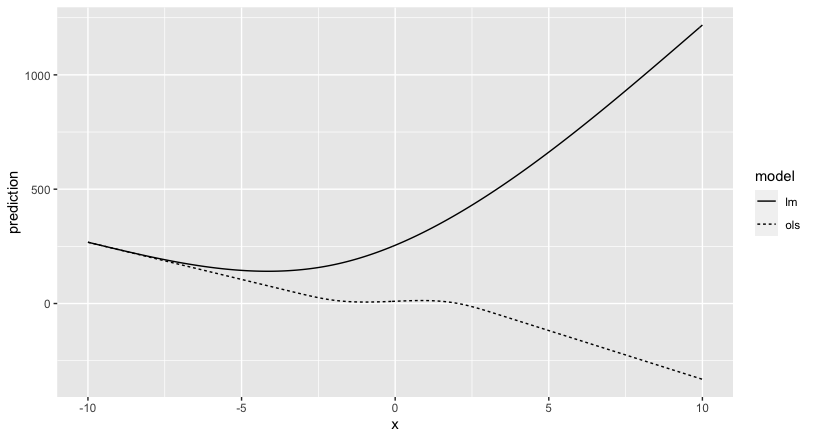

My problem: Using the rcs() - restricted cubic splines - from the rms package I get very strange predictions when applying in regular lm(). The ols() works fine but I'm a little surprised by this strange behavior. Can someone explain to me what's happening?

library(rms)

p4 <- poly(1:100, degree=4)

true4 <- p4 %*% c(1,2,-6,9)

days <- 1:70

noise4 <- true4 + rnorm(100, sd=.5)

reg.n4.4 <- lm(noise4[1:70] ~ poly(days, 4))

reg.n4.4ns <- lm(noise4[1:70] ~ ns(days,5))

reg.n4.4rcs <- lm(noise4[1:70] ~ rcs(days,5))

dd <- datadist(noise4[1:70], days)

options("datadist" = "dd")

reg.n4.4rcs_ols <- ols(noise4[1:70] ~ rcs(days,5))

plot(1:100, noise4)

nd <- data.frame(days=1:100)

lines(1:100, predict(reg.n4.4, newdata=nd), col="orange", lwd=3)

lines(1:100, predict(reg.n4.4ns, newdata=nd), col="red", lwd=3)

lines(1:100, predict(reg.n4.4rcs, newdata=nd), col="darkblue", lwd=3)

lines(1:100, predict(reg.n4.4rcs_ols, newdata=nd), col="grey", lwd=3)

legend("top", fill=c("orange", "red", "darkblue", "grey"),

legend=c("Poly", "Natural splines", "RCS - lm", "RCS - ols"))

As you can see the darkblue is allover the place...

rcsis not designed to work withlm- why do you expect it to? – Otherworld