The problem is that sparklyr doesn't correctly support Spark DateType. It is possible to parse dates, and correct format, but not represent these as proper DateType columns. If that's enough then please follow the instructions below.

In Spark 2.2 or later use to_date with Java SimpleDataFormat compatible string:

df <- copy_to(sc, data.frame(date=c("01/01/2010")))

parsed <- df %>% mutate(date_parsed = to_date(date, "MM/dd/yyyy"))

parsed

# Source: lazy query [?? x 2]

# Database: spark_connection

date date_parsed

<chr> <chr>

1 01/15/2010 2010-01-15

Interestingly internal Spark object still uses DateType columns:

parsed %>% spark_dataframe

<jobj[120]>

class org.apache.spark.sql.Dataset

[date: string, date_parsed: date]

For earlier versions unix_timestamp and cast (but watch for possible timezone problems):

df %>%

mutate(date_parsed = sql(

"CAST(CAST(unix_timestamp(date, 'MM/dd/yyyy') AS timestamp) AS date)"))

# Source: lazy query [?? x 2]

# Database: spark_connection

date date_parsed

<chr> <chr>

1 01/15/2010 2010-01-15

Edit:

It looks like this problem has been resolved on current master (sparklyr_0.7.0-9105):

# Source: lazy query [?? x 2]

# Database: spark_connection

date date_parsed

<chr> <date>

1 01/01/2010 2009-12-31

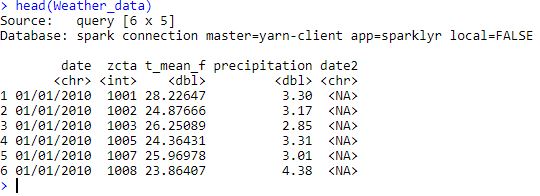

dput(Weather_data)format before you applied the conversion? – Arlettaarlettesdf_mutate(Weather_data, date2 = as.Date(date, "%m/%d/%Y"))– Hoggard