I'm using Django, Celery, RabbitMQ and postgreSQL.

I'm doing exactly what you want to do.

PIP : celery and flower

You need a Celery conf file (in your settings.py folder):

What you want to add is beat_schedule :

app.conf.beat_schedule = {

'task-name': {

'task': 'myapp.tasks.task_name',

'schedule': crontab(minute=30, hour=5, day_of_week='mon-fri'),

},

}

This will add an entry in your database to execute task_name (monday to friday at 5:30), you can change directly on your settings (reload celery and celery beat after)

What i love is you can add a retry mechanism realy easily with security:

@app.task(bind=True, max_retries=50)

def task_name(self, entry_pk):

entry = Entry.objects.get(pk=entry_pk)

try:

entry.method()

except ValueError as e:

raise self.retry(exc=e, countdown=5 * 60, queue="punctual_queue")

When my method() raise ValueError i will re-execute this method in 5 minute for a maximun number of 50 try.

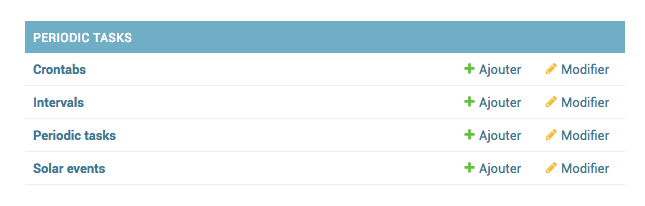

The good part is you have access to the database in Django admin :

![enter image description here]()

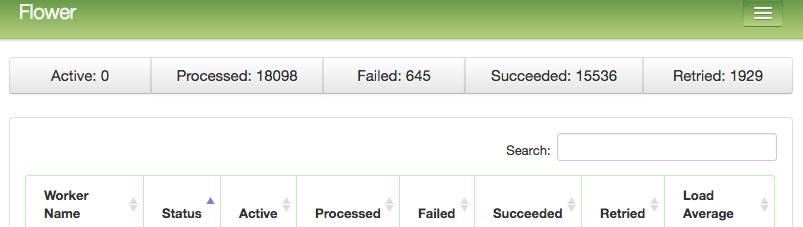

And you can check with flower if the task is executed or not (with traceback):

![enter image description here]()

I have more than 1000 task daily executed, what you need is create queues and worker.

I use 10 workers for that (for future scalling purpose) :

celery multi start 10 -A MYAPP -Q:1-3 recurring_queue,punctual_queue -Q:4,5 punctual_queue -Q recurring_queue --pidfile="%n.pid"

And the daemon that launch task :

celery -A MYAPP beat -S django --detach

It's maybe overkill for you, but he can do much more for you:

- sending email async (if its fail you can correct and resend the email)

- upload and postprocess async for the user

- Every task that take time but you dont want to wait (you can chain task that one need to finish return a result and use it in another task)