Code

import numpy as np

from keras.preprocessing.image import ImageDataGenerator

from keras.models import Sequential,Model

from keras.layers import Dropout, Flatten, Dense,Input

from keras import applications

from keras.preprocessing import image

from keras import backend as K

K.set_image_dim_ordering('tf')

# dimensions of our images.

img_width, img_height = 150,150

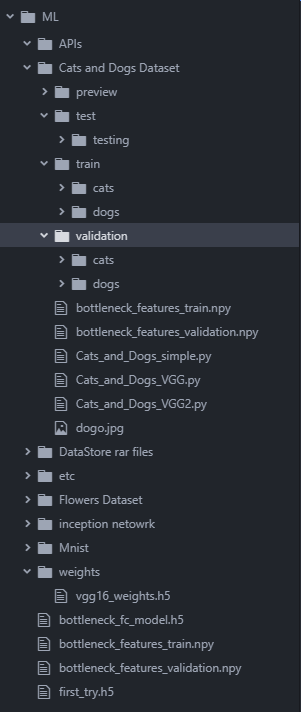

top_model_weights_path = 'bottleneck_fc_model.h5'

train_data_dir = 'Cats and Dogs Dataset/train'

validation_data_dir = 'Cats and Dogs Dataset/validation'

nb_train_samples = 20000

nb_validation_samples = 5000

epochs = 50

batch_size = 16

input_tensor = Input(shape=(150,150,3))

base_model=applications.VGG16(include_top=False, weights='imagenet',input_tensor=input_tensor)

for layer in base_model.layers:

layer.trainable = False

top_model=Sequential()

top_model.add(Flatten(input_shape=base_model.output_shape[1:]))

top_model.add(Dense(256,activation="relu"))

top_model.add(Dropout(0.5))

top_model.add(Dense(1,activation='softmax'))

top_model.load_weights(top_model_weights_path)

model = Model(inputs=base_model.input,outputs=top_model(base_model.output))

datagen = ImageDataGenerator(rescale=1. / 255)

train_data = datagen.flow_from_directory(train_data_dir,target_size=(img_width, img_height),batch_size=batch_size,classes=['dogs', 'cats'],class_mode="binary",shuffle=False)

validation_data = datagen.flow_from_directory(validation_data_dir,target_size=(img_width, img_height),classes=['dogs', 'cats'], batch_size=batch_size,class_mode="binary",shuffle=False)

model.compile(optimizer='adam',loss='binary_crossentropy', metrics=['accuracy'])

model.fit_generator(train_data, steps_per_epoch=nb_train_samples//batch_size, epochs=epochs,validation_data=validation_data, shuffle=False,verbose=

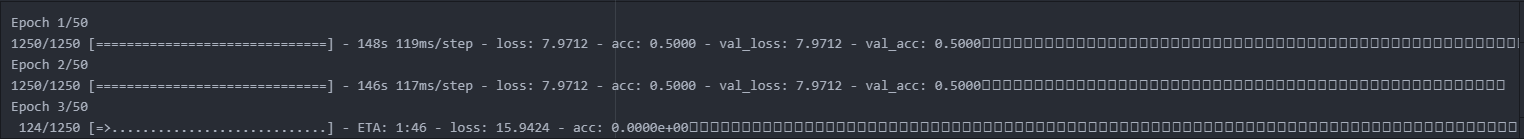

I have implemented a Image Classifier on the cats and dogs Dataset(https://www.kaggle.com/c/dogs-vs-cats/data) using keras(transfer learned using the VGG16 network). The code runs without errors but the accuracy is stuck at 0.0 % for about half of the epoch and after half it increases to an of accuracy of 50%. I am using Atom with hydrogen.

How do I fix this.I really don't think I have a bias problem with such a dataset with VGG16(although i am relatively new to this field).