The read_lines() function within the readr package is faster than base::readLines(), and can be used to specify a start and end line for the read. For example:

library(readr)

myFile <- "./data/veryLargeFile.txt"

first25K <- read_lines(myFile,skip=0,n_max = 25000)

second25K <- read_lines(myFile,skip=25000,n_max=25000)

Here is a complete, working example using the NOAA StormData data set. The file describes the location, event type, and damage information for over 900,000 extreme weather events in the United States between 1950 and 2011. We will use readr::read_lines() to read the first 50,000 lines in groups of 25,000 after downloading and unzipping the file.

Warning: the zip file is about 50Mb.

library(R.utils)

library(readr)

dlMethod <- "curl"

if(substr(Sys.getenv("OS"),1,7) == "Windows") dlMethod <- "wininet"

url <- "https://d396qusza40orc.cloudfront.net/repdata%2Fdata%2FStormData.csv.bz2"

download.file(url,destfile='StormData.csv.bz2',method=dlMethod,mode="wb")

bunzip2("StormData.csv.bz2","StormData.csv")

first25K <- read_lines("StormData.csv",skip=0,n_max = 25000)

second25K <- read_lines("StormData.csv",skip=25000,n_max=25000)

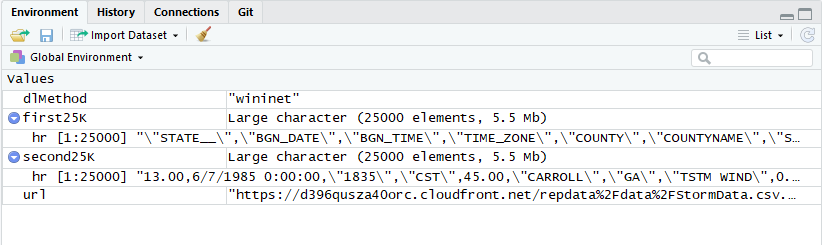

...and the objects as viewed in the RStudio Environment Viewer:

![enter image description here]()

Here are the performance timings comparing base::readLines() with readr::read_lines() on an HP Spectre x-360 laptop with an Intel i7-6500U processor.

> # check performance of readLines()

> system.time(first25K <- readLines("stormData.csv",n=25000))

user system elapsed

0.05 0.00 0.04

> # check performance of readr::read_lines()

> system.time(first25K <- read_lines("StormData.csv",skip=0,n_max = 25000))

user system elapsed

0.00 0.00 0.01