So, it's a bit strange that you say "it's not supported" its k8s.

Enable GKE cluster auto-scaler as specified here:

gcloud container clusters update [CLUSTER_NAME] --enable-autoscaling \

--min-nodes 1 --max-nodes 10 --zone [COMPUTE_ZONE] --node-pool default-pool

this is on default node pool, if you created a new pool, use that one.

Go to your airflow-worker deployment and add in this deployment just after name: airflow-worker or ports:

resource:

requests:

cpu: 400m

Then after this, autoscale you deployment like this:

kubectl autoscale deployment airflow-worker --cpu-percent=95 --min=1 --max=10 -n <your namespace>

In my case it works like a charm, it scaling both nodes and pods for my airflow-worker deployment.

PoC:

$ kubectl get hpa -n <my-namespace> -w

- NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

- airflow-worker Deployment/airflow-worker /95% 1 3

0 13s

- airflow-worker Deployment/airflow-worker 20%/95% 1 10 1

29m

- airflow-worker Deployment/airflow-worker 27%/95% 1 10 2

29m

- airflow-worker Deployment/airflow-worker 30%/95% 1 10 3

29m

- airflow-worker Deployment/airflow-worker 53%/95% 1 10 3

29m

- airflow-worker Deployment/airflow-worker 45%/95% 1 10 3

34m

- airflow-worker Deployment/airflow-worker 45%/95% 1 10 3

34m

- airflow-worker Deployment/airflow-worker 28%/95% 1 10 2

34m

- airflow-worker Deployment/airflow-worker 32%/95% 1 10 2

35

- airflow-worker Deployment/airflow-worker 37%/95% 1 10 2

43m

- airflow-worker Deployment/airflow-worker 84%/95% 1 10 1

43m

- airflow-worker Deployment/airflow-worker 39%/95% 1 10 1

44m

- airflow-worker Deployment/airflow-worker 29%/95% 1 10 1

44m

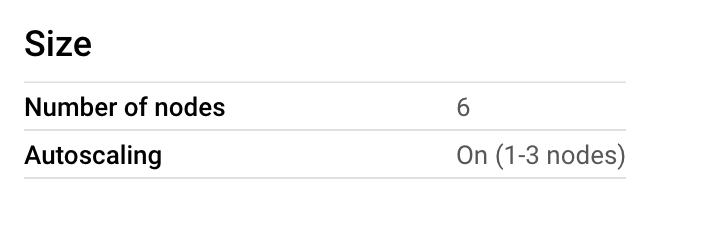

You can see that after a while it`s scaling down to 1pod. Same as the node pool is scaling down to 4 nodes instead of 5-6-7..