I am attempting to understand the best uses of backward and forward chaining in AI programming for a program I am writing. Would anyone be able to explain the most ideal uses of backward and forward chaining? Also, could you provide an example?

I have done some research on the current understanding of "forward chaining" and "backward chaining". This brings up a lot of material. Here is a résumé.

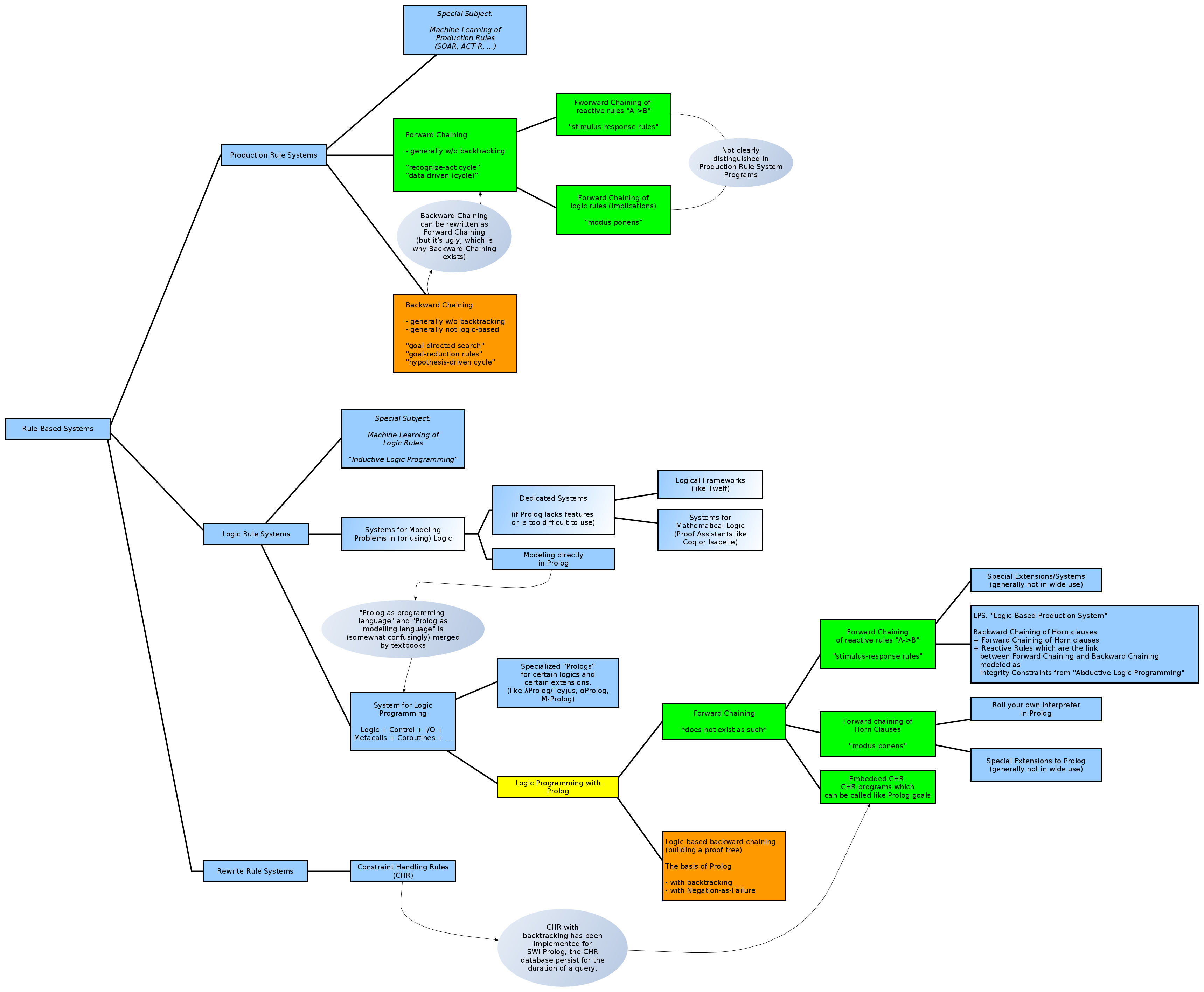

First a diagram, partially based on:

- The Sad State Concerning the Relationships between Logic, Rules and Logic Programming (Robert Kowalski)

LHS stands for "left-hand-side", RHS stands for "right-hand-side" of a rule throughout.

Let us separate "Rule-Based Systems" (i.e. systems which do local computation based on rules), into three groups as follows:

- Production Rule Systems, which include the old-school Expert System Shells, which are not built on logical principles, i.e. "without a guiding model".

- Logic Rule Systems, i.e. system based on a logical formalism (generally a fragment of first-order logic, classical or intuitionistic). This includes Prolog.

- Rewrite Rule Systems, systems which rewrite some working memory based on,

LHS => RHSrewrite rules.

There may be others. Features of one group can be found in another group. Systems of one group may be partially or wholly implemented by systems of another group. Overlap is not only possible but certain.

(Sadly, imgur does not accept .svg in 2020, so it's a .png)

- Green: Forward Chaining

- Orange: Backward Chaining

- Yellow: Prolog

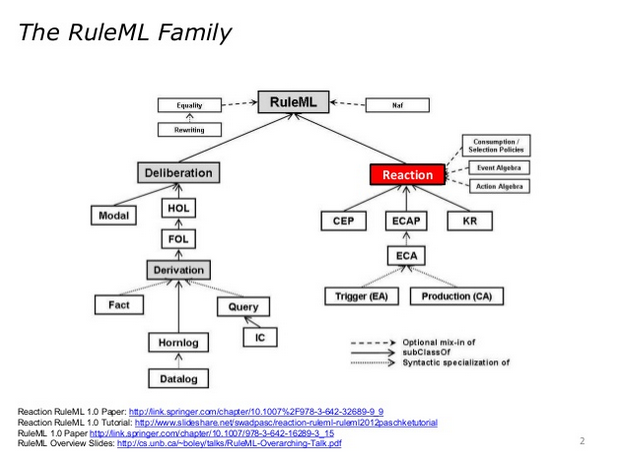

RuleML (an organization) tries to XML-ize the various rulesets which exist. They classify rules as follows:

The above appears in The RuleML Perspective on Reaction Rule Standards by Adrian Paschke.

So they make a differentiation between "deliberative rules" and "reactive rules", which fits.

First box: "Production Rule Systems"

The General Idea of the "Production Rule System" (PRS)

- There are "

LHS->RHS" rules & meta-rules, with the latter controlling application of the first. Rules can be "logical" (similar to Prolog Horn Clauses), but they need not be! - The PRS has a "working memory", which is changed destructively whenever a rule is applied: elements or facts can be removed, added or replaced in the working memory.

- PRS have "operational semantics" only (they are defined by what they do).

- PRS have no "declarative semantics", which means there is no proper way to reason about the ruleset itself: What it computes, what its fixpoint is (if there is one), what its invariants are, whether it terminates etc.

- More features:

- Ad-hoc handling of uncertainty using locally computable functions (i.e. not probability computations) as in MYCIN, with Fuzzy rules, Dempster-Shaefer theory etc.

- Strong Negation may be expressed in an ad-hoc fashion.

- Generally, backtracking on impasse is not performed, one has to implement it explicitly.

- PRS can connect to other systems rather directly: Call a neural network, call an optimizer or SAT Solver, call a sensor, call Prolog etc.

- Special support for explanations & debugging may or may not exist.

Example Implementations

- Ancient:

- Old-school "expert systems shells", often written in LISP.

- Planner of 1971, which is language with rudimentary (?) forward and backward chaining. The implementations of that language were never complete.

- The original OPSx series, in particular OPS5, on which R1/XCON - a VAX system configurator with 2500 rules - was running. This was actually a forward-chaining implementation.

- Recent:

- CLIPS (written in C): http://www.clipsrules.net/

- Jess (written in Java): https://jess.sandia.gov/

- Drools (writen in "Enterprise" Java): https://www.drools.org/

Drools supports "backwards-chaining" (how exactly), but I'm not sure any of the others does, and if they do, how it looks like)

"Forward chaining" in PRS

Forward-chaining is the original approach to the PRS "cycle", also called "recognize-act" cycle, or the "data-driven cycle", which indicates what it is for. Event-Condition-Action architecture is another commonly used description.

The inner working are straightforward:

- The rule

LHSs are matched against the working memory (which happens at every working memory update thanks to the RETE algorithm). - One of the matching rules is selected according to some criterium (e.g. priority) and its

RHSis executed. This continues until noLHSmatches anymore.

This cycle can be seen as higher-level approach to imperative state-based languages.

Robert Kowalski notes that the "forward chaining" rules are actually an amalgamation of two distinct uses:

Forward-chained logic rules

These rules apply Modus Ponens repeatedly to the working memory and add deduced facts.

Example:

"IF X is a man, THEN X is mortal"

Uses:

- Deliberation, refinement of representations.

- Exploration of state spaces.

- Planning if you want more control or space is at a premium (R1/XCON was a forward chaining system, which I find astonishing. This was apparently due to the desire to keep resource usage within bounds).

In Making forward chaining relevant (1998), Fahiem Bacchus writes:

Forward chaining planners have two particularly useful properties. First, they maintain complete information about the intermediate states generated by a potential plan. This information can be utilized to provide highly effective search control, both domain independent heuristic control and even more effective domain dependent control ... The second advantage of forward chaining planners is they can support rich planning languages. The TLPlan system for example, supports the full ADL language, including functions and numeric calculations. Numbers and functions are essential for modeling many features of real planning domains, particularly resourcs and resource consumption.

How much of the above really applies is debatable. You can always write your backward-chaining planner to retain more information or to be open to configuration by a search strategy selecting module.

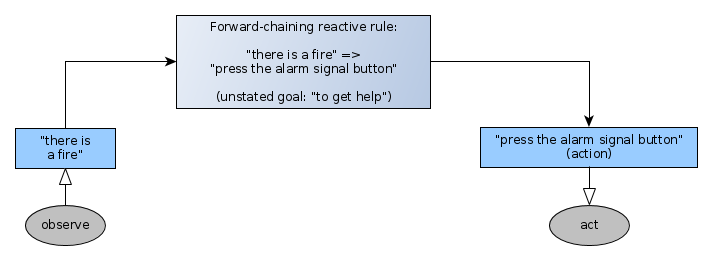

Forward-chaining "reactive rules" aka "stimulus-response rules"

Example:

"IF you are hungry THEN eat something"

The stimulus is "hunger" (which can be read off a sensor). The response is to "eat something" (which may mean controlling an effector). There is an unstated goal, hich is to be "less hungry", which is attained by eating, but there is no deliberative phase where that goal is made explicit.

Uses:

- Immediate, non-deliberative agent control:

LHScan be sensor input,RHScan be effector output.

"Backward chaining" in PRS

Backward chaining, also called "goal-directed search", applies "goal-reduction rules" and runs the "hypothesis-driven cycle", which indicates what it is for.

Examples:

Use this when:

- Your problem looks like a "goal" that may be broken up into "subgoals", which can be solved individually. Depending on the problem, this may not be possible. The subgoals have too many interdependencies or too little structure.

- You need to "pull in more data" on demand. For example, you ask the user

Y/Nquestion until you have classified an object properly, or, equivalently, until a diagnosis has been obtained. - When you need to plan, search, or build a proof of a goal.

One can encode backward-chaining rules also as forward-chaining rules as a programming exercise. However, one should choose the representation and the computational approach that is best adapted to one's problem. That's why backward chaining exists after all.

Second box: "Logic Rule Systems" (LRS)

These are systems based on some underlying logic. The system's behaviour can (at least generally) be studied independently from its implementation.

See this overview: Stanford Encyclopedia of Philosophy: Automated Reasoning.

I make a distinction between systems for "Modeling Problems in Logic" and systems for "Programming in Logic". The two are merged in textbooks on Prolog. Simple "Problems in Logic" can be directly modeled in Prolog (i.e. using Logic Programming) because the language is "good enough" and there is no mismatch. However, at some point you need dedicated systems for your task, and these may be quite different from Prolog. See Isabelle or Coq for examples.

Restricting ourselves to Prolog family of systems for "Logic Programming":

"Forward chaining" in LRS

Forward-chaining is not supported by a Prolog system as such.

Forward-chained logic rules

If you want to forward-chained logic rules you can write your own interpreter "on top of Prolog". This is possible because Prolog is general purpose programming language.

Here is a very silly example of forward chaining of logic rules. It would certainly be preferable to define a domain-specific language and appropriate data structures instead:

add_but_fail_if_exists(Fact,KB,[Fact|KB]) :- \+member(Fact,KB).

fwd_chain(KB,KBFinal,"forall x: man(x) -> mortal(x)") :-

member(man(X),KB),

add_but_fail_if_exists(mortal(X),KB,KB2),

!,

fwd_chain(KB2,KBFinal,_).

fwd_chain(KB,KBFinal,"forall x: man(x),woman(y),(married(x,y);married(y,x)) -> needles(y,x)") :-

member(man(X),KB),

member(woman(Y),KB),

(member(married(X,Y),KB);member(married(Y,X),KB)),

add_but_fail_if_exists(needles(Y,X),KB,KB2),

!,

fwd_chain(KB2,KBFinal,_).

fwd_chain(KB,KB,"nothing to deduce anymore").

rt(KBin,KBout) :- fwd_chain(KBin,KBout,_).

Try it:

?- rt([man(socrates),man(plato),woman(xanthippe),married(socrates,xanthippe)],KB).

KB = [needles(xanthippe, socrates), mortal(plato),

mortal(socrates), man(socrates), man(plato),

woman(xanthippe), married(socrates, xanthippe)].

Extensions to add efficient forward-chaining to Prolog have been studied but they seem to all have been abandoned. I found:

- 1989: Adding Forward Chaining and Truth Maintenance to Prolog (PDF) (Tom_Finin, Rich Fritzson, Dave Matuszek)

- There is an active implementation of this on GitHub: Pfc -- forward chaining in Prolog, and an SWI-Prolog pack, see also this discussion.

- 1997: Efficient Support for Reactive Rules in Prolog (PDF) (Mauro Gaspari) ... the author talks about "reactive rules" but apparently means "forward-chained deliberative rules".

- 1998: On Active Deductive Database: The Statelog Approach (Georg Lausen, Bertram Ludäscher, Wolfgang May).

Kowalski writes:

"Zaniolo (LDL++?) and Statelog use a situation calculus-like representation with frame axioms, and reduce Production Rules and Event-Condition-Action rules to Logic Programs. Both suffer from the frame problem."

Forward-chained reactive rules

Prolog is not really made for "reactive rules". There have been some attempts:

- LUPS : A language for updating logic programs (1999) (Moniz Pereira , Halina Przymusinska , Teodor C. Przymusinski C)

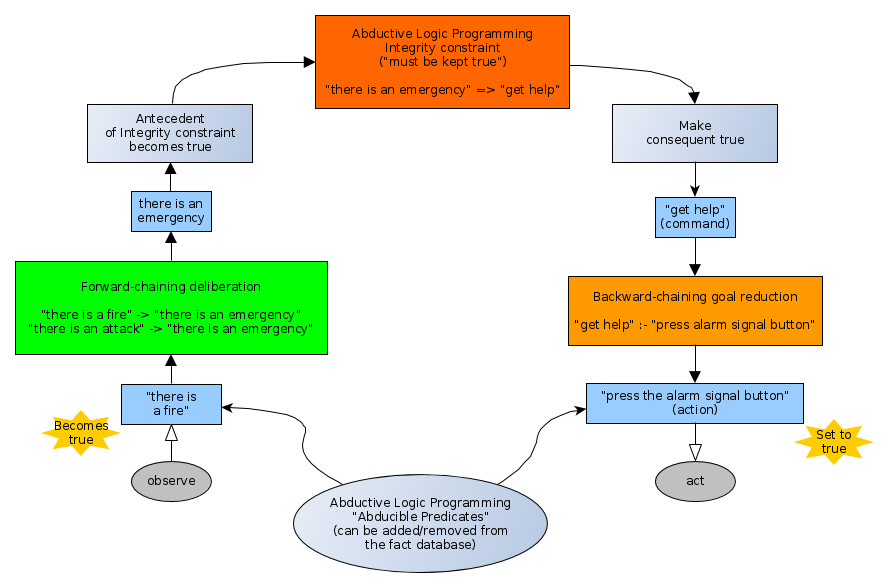

The "Logic-Based Production System" (LPS) is recent and rather interesting:

- Integrating Logic Programming and Production Systems in Abductive Logic Programming Agents (Robert Kowalski, Fariba Sadri)

- Presentation at RR2009: Integrating Logic Programming and Production Systems in Abductive Logic Programming Agents

- LPS website

It defines a new language where Observations lead to Forward-Chaining and Backward-Chaining lead to Acts. Both "silos" are linked by Integrity Constraints from Abductive Logic Programming.

So you can replace a reactive rule like this:

By something like this, which has a logic interpretation:

Third Box: "Rewrite Rule Systems" (forward-chaining)

See also: Rewriting.

Here, I will just mention CHR. It is a forward-chaining system which successively rewrites elements in a working memory according to rules with match working memory elements, verify a logic guard condition , and removed/add working memory elements if the logic guard condition succeeds.

CHR can be understood as an application of a fragment of linear logic (see "A Unified Analytical Foundation for Constraint Handling Rules" by Hariolf Betz).

A CHR implementation exists for SWI Prolog. It provides backtracking capability for CHR rules and a CHR goal can be called like any other Prolog goal.

Usage of CHR:

- General model of computational (i.e. like Turing Machines etc.)

- Bottom up parsing.

- Type checking.

- Constraint propagation in constraint logic programmning.

- Anything that you would rather forward-chain (process bottom-up) rather than backward-chain (process top-down).

I find it useful to start with your process and goals.

If your process can be easily expressed as trying to satisfy a goal by satisfying sub-goals then you should consider a backward-chaining system such as Prolog. These systems work by processing rules for the various ways in which a goal can be satisfied and the constraints on these applying these ways. Rule processing searches the network of goals with backtracking to try alternatives when one way of satisfying a goal fails.

If your process starts with a set of known information and applies the rules to add information then you should consider a forward-chaining system such as Ops5, CLIPS or JESS. These languages apply matching to the left hand side of the rule and invoke the right hand side of rules for which the matching succeeds. The working memory is better thought of as "what is known" than "true facts". Working memory can contain information known to be true, information known to be false, goals, sub-goals, and even domain rules. How this information is used is determined by the rules, not the language. To these languages there is no difference between rules that create values (deduce facts), rules that create goals, rules that create new domain knowledge or rules that change state. It is all in how you write your rules and organize your data and add base clauses to represent this knowledge.

It is fairly easy to implement either method using the other method. If you have a body of knowledge and want to make dedications but this needs to be directed by some goals go ahead and use a forward chaining language with rules to keep track of goals. In a backward chaining language you can have goals to deduce knowledge.

I would suggest that you consider writing rules to handle the processing of domain knowledge and not to encode your domain knowledge directly in the rules processed by the inference engine. Instead, the working memory or base clauses can contain your domain knowledge and the language rules can apply them. By representing the domain knowledge in working memory you can also write rules to check the domain knowledge (validate data, check for overlapping ranges, check for missing values, etc.), add a logic system on top of the rules (to calculate probabilities, confidence values, or truth values) or handle missing values by prompting for user input.

© 2022 - 2024 — McMap. All rights reserved.