How can I convert a uniform distribution (as most random number generators produce, e.g. between 0.0 and 1.0) into a normal distribution? What if I want a mean and standard deviation of my choosing?

There are plenty of methods:

- Do not use Box Muller. Especially if you draw many gaussian numbers. Box Muller yields a result which is clamped between -6 and 6 (assuming double precision. Things worsen with floats.). And it is really less efficient than other available methods.

- Ziggurat is fine, but needs a table lookup (and some platform-specific tweaking due to cache size issues)

- Ratio-of-uniforms is my favorite, only a few addition/multiplications and a log 1/50th of the time (eg. look there).

- Inverting the CDF is efficient (and overlooked, why ?), you have fast implementations of it available if you search google. It is mandatory for Quasi-Random numbers.

The Ziggurat algorithm is pretty efficient for this, although the Box-Muller transform is easier to implement from scratch (and not crazy slow).

Changing the distribution of any function to another involves using the inverse of the function you want.

In other words, if you aim for a specific probability function p(x) you get the distribution by integrating over it -> d(x) = integral(p(x)) and use its inverse: Inv(d(x)). Now use the random probability function (which have uniform distribution) and cast the result value through the function Inv(d(x)). You should get random values cast with distribution according to the function you chose.

This is the generic math approach - by using it you can now choose any probability or distribution function you have as long as it have inverse or good inverse approximation.

Hope this helped and thanks for the small remark about using the distribution and not the probability itself.

Here is a javascript implementation using the polar form of the Box-Muller transformation.

/*

* Returns member of set with a given mean and standard deviation

* mean: mean

* standard deviation: std_dev

*/

function createMemberInNormalDistribution(mean,std_dev){

return mean + (gaussRandom()*std_dev);

}

/*

* Returns random number in normal distribution centering on 0.

* ~95% of numbers returned should fall between -2 and 2

* ie within two standard deviations

*/

function gaussRandom() {

var u = 2*Math.random()-1;

var v = 2*Math.random()-1;

var r = u*u + v*v;

/*if outside interval [0,1] start over*/

if(r == 0 || r >= 1) return gaussRandom();

var c = Math.sqrt(-2*Math.log(r)/r);

return u*c;

/* todo: optimize this algorithm by caching (v*c)

* and returning next time gaussRandom() is called.

* left out for simplicity */

}

Use the central limit theorem wikipedia entry mathworld entry to your advantage.

Generate n of the uniformly distributed numbers, sum them, subtract n*0.5 and you have the output of an approximately normal distribution with mean equal to 0 and variance equal to (1/12) * (1/sqrt(N)) (see wikipedia on uniform distributions for that last one)

n=10 gives you something half decent fast. If you want something more than half decent go for tylers solution (as noted in the wikipedia entry on normal distributions)

Where R1, R2 are random uniform numbers:

NORMAL DISTRIBUTION, with SD of 1:

sqrt(-2*log(R1))*cos(2*pi*R2)

This is exact... no need to do all those slow loops!

Reference: dspguide.com/ch2/6.htm

I would use Box-Muller. Two things about this:

- You end up with two values per iteration

Typically, you cache one value and return the other. On the next call for a sample, you return the cached value. - Box-Muller gives a Z-score

You have to then scale the Z-score by the standard deviation and add the mean to get the full value in the normal distribution.

It seems incredible that I could add something to this after eight years, but for the case of Java I would like to point readers to the Random.nextGaussian() method, which generates a Gaussian distribution with mean 0.0 and standard deviation 1.0 for you.

A simple addition and/or multiplication will change the mean and standard deviation to your needs.

The standard Python library module random has what you want:

normalvariate(mu, sigma)

Normal distribution. mu is the mean, and sigma is the standard deviation.

For the algorithm itself, take a look at the function in random.py in the Python library.

This is my JavaScript implementation of Algorithm P (Polar method for normal deviates) from Section 3.4.1 of Donald Knuth's book The Art of Computer Programming:

function normal_random(mean,stddev)

{

var V1

var V2

var S

do{

var U1 = Math.random() // return uniform distributed in [0,1[

var U2 = Math.random()

V1 = 2*U1-1

V2 = 2*U2-1

S = V1*V1+V2*V2

}while(S >= 1)

if(S===0) return 0

return mean+stddev*(V1*Math.sqrt(-2*Math.log(S)/S))

}

I thing you should try this in EXCEL: =norminv(rand();0;1). This will product the random numbers which should be normally distributed with the zero mean and unite variance. "0" can be supplied with any value, so that the numbers will be of desired mean, and by changing "1", you will get the variance equal to the square of your input.

For example: =norminv(rand();50;3) will yield to the normally distributed numbers with MEAN = 50 VARIANCE = 9.

Q How can I convert a uniform distribution (as most random number generators produce, e.g. between 0.0 and 1.0) into a normal distribution?

For software implementation I know couple random generator names which give you a pseudo uniform random sequence in [0,1] (Mersenne Twister, Linear Congruate Generator). Let's call it U(x)

It is exist mathematical area which called probibility theory. First thing: If you want to model r.v. with integral distribution F then you can try just to evaluate F^-1(U(x)). In pr.theory it was proved that such r.v. will have integral distribution F.

Step 2 can be appliable to generate r.v.~F without usage of any counting methods when F^-1 can be derived analytically without problems. (e.g. exp.distribution)

To model normal distribution you can cacculate y1*cos(y2), where y1~is uniform in[0,2pi]. and y2 is the relei distribution.

Q: What if I want a mean and standard deviation of my choosing?

You can calculate sigma*N(0,1)+m.

It can be shown that such shifting and scaling lead to N(m,sigma)

This is a Matlab implementation using the polar form of the Box-Muller transformation:

Function randn_box_muller.m:

function [values] = randn_box_muller(n, mean, std_dev)

if nargin == 1

mean = 0;

std_dev = 1;

end

r = gaussRandomN(n);

values = r.*std_dev - mean;

end

function [values] = gaussRandomN(n)

[u, v, r] = gaussRandomNValid(n);

c = sqrt(-2*log(r)./r);

values = u.*c;

end

function [u, v, r] = gaussRandomNValid(n)

r = zeros(n, 1);

u = zeros(n, 1);

v = zeros(n, 1);

filter = r==0 | r>=1;

% if outside interval [0,1] start over

while n ~= 0

u(filter) = 2*rand(n, 1)-1;

v(filter) = 2*rand(n, 1)-1;

r(filter) = u(filter).*u(filter) + v(filter).*v(filter);

filter = r==0 | r>=1;

n = size(r(filter),1);

end

end

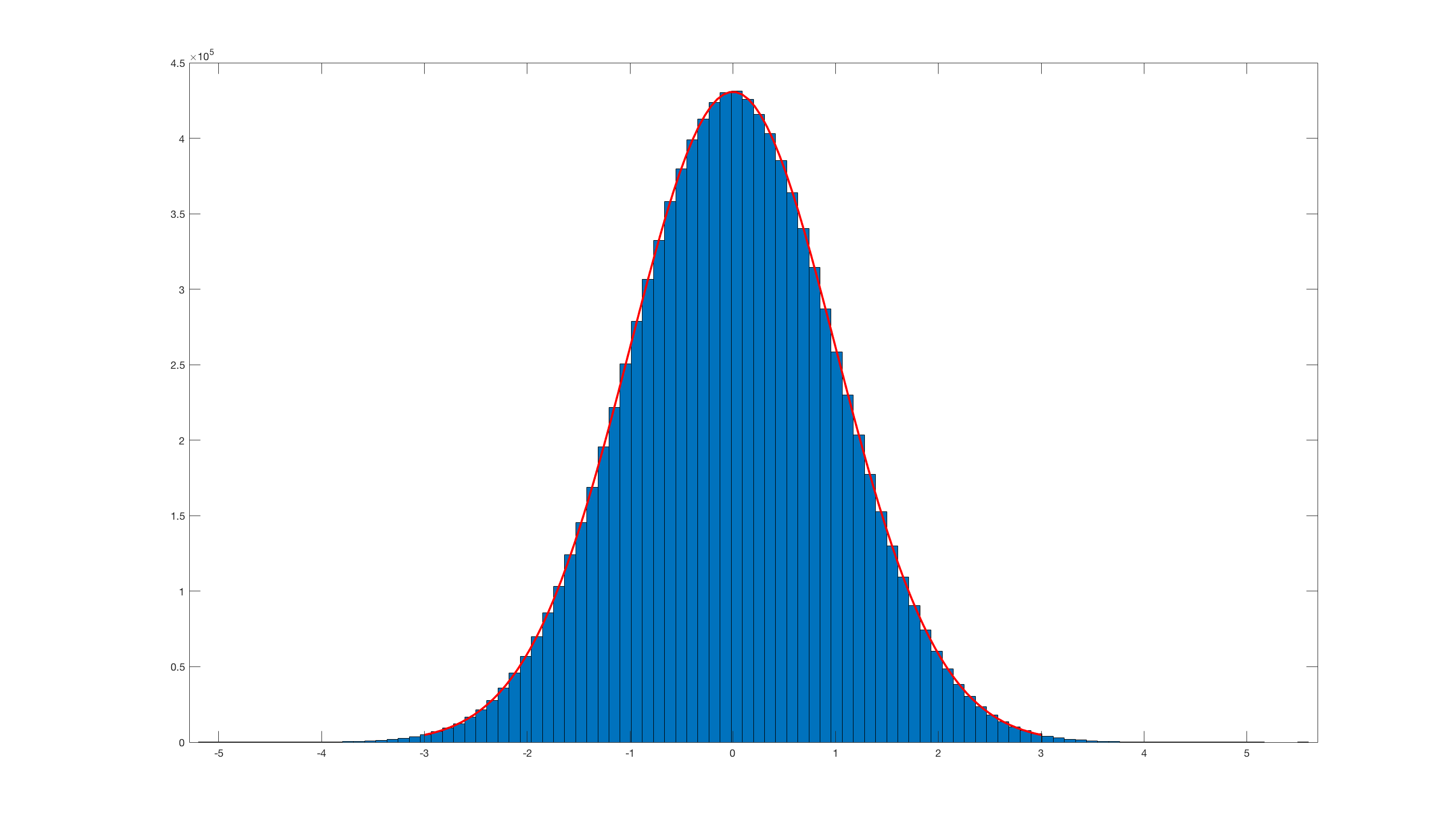

And invoking histfit(randn_box_muller(10000000),100); this is the result:

Obviously it is really inefficient compared with the Matlab built-in randn.

I have the following code which maybe could help:

set.seed(123)

n <- 1000

u <- runif(n) #creates U

x <- -log(u)

y <- runif(n, max=u*sqrt((2*exp(1))/pi)) #create Y

z <- ifelse (y < dnorm(x)/2, -x, NA)

z <- ifelse ((y > dnorm(x)/2) & (y < dnorm(x)), x, z)

z <- z[!is.na(z)]

It is also easier to use the implemented function rnorm() since it is faster than writing a random number generator for the normal distribution. See the following code as prove

n <- length(z)

t0 <- Sys.time()

z <- rnorm(n)

t1 <- Sys.time()

t1-t0

My computer, a WP-34s, is very old, slow, and has limited memory. The following two-instruction sequence works well to generate a normal deviate from a distribution of mean 0 and variance 1, and lifts the stack only one level, but is incredibly slow:

RAND#

Phi-1

I suspect that the reason it is slow is that Phi-1 is actually solving Phi by an iterative process, as mentioned in a previous answer. Possibly this was because the machine designers were running out of memory.

One of the great mysteries of this simplicity is that its execution time is very irregular, possibly depending on the value generated by the RAND# function. But it is very memory frugal, requiring no pre-stored constants or use of other registers.

For greater speed, at the expense of another 16 program memory steps,

RAND# RAND#

RAND# RAND# c-

RAND# RAND# c+

RAND# RAND# c-

RAND# RAND# c+

RAND# RAND# c- +

Note the use of complex addition and subtraction to save a few steps. Also,by alternating addition and subtraction, there is no need to subtract a constant at the end. The mean will be zero, and with 12 uniform randoms, the variance will be 1. No memory other than the four level stack is required. It is hundreds of times faster than the above probability inverse transform method, but it fails at the tails like Box-Muller has been reported to do.

If there is plenty of program memory, I would suggest a rational function approximation to the central part of the inverse CDF, and a recursive solution for the outer 1% tails. Do the slow thing only 1% of the time.

*** Note: the WP-34s is no longer commonly available, but you can download a free emulator for both iPhone and Windows. The iPhone emulator is typically 100 times faster than the original machine that was flashed into a repurposed HP-30 Business Analyst calculator.

function distRandom(){

do{

x=random(DISTRIBUTION_DOMAIN);

}while(random(DISTRIBUTION_RANGE)>=distributionFunction(x));

return x;

}

© 2022 - 2024 — McMap. All rights reserved.