I've got a site that displays data from a game server. The game has different "domains" (which are actually just separate servers) that the users play on.

Right now, I've got 14 cron jobs running at different intervals of the hour every 6 hours. All 14 files that are run are pretty much the same, and each takes around 75 minutes ( an hour and 15 minutes ) to complete it's run.

I had thought about just using 1 file run from cron and looping through each server, but this would just cause that one file run for 18 hours or so. My current VPS is set to only allow 1 vCPU, so I'm trying to accomplish things and stay within my allotted server load.

Seeing that the site needs to have updated data available every 6 hours, this isn't doable.

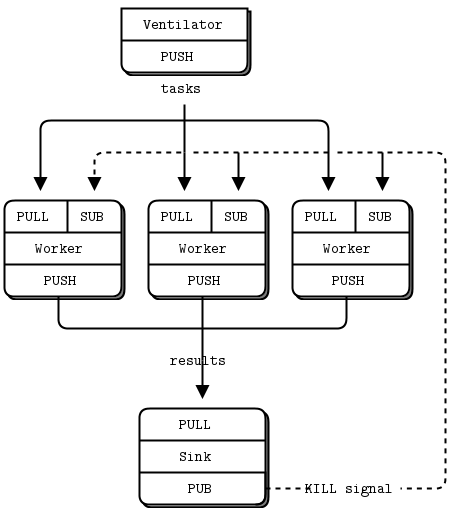

I started looking into message queues and passing some information to a background process that will perform the work in question. I started off trying to use resque and php-resque, but my background worker died as soon as it was started. So, I moved on to ZeroMQ, which seems to be more what I need, anyway.

I've set up ZMQ via Composer, and everything during the installation went fine. In my worker script (which will be called by cron every 6 hours), I've got:

$dataContext = new ZMQContext();

$dataDispatch = new ZMQSocket($dataContext, ZMQ::SOCKET_PUSH);

$dataDispatch->bind("tcp://*:50557");

$dataDispatch->send(0);

foreach($filesToUse as $filePath){

$dataDispatch->send($filePath);

sleep(1);

}

$filesToUse = array();

$blockDirs = array_filter(glob('mapBlocks/*'), 'is_dir');

foreach($blockDirs as $k => $blockDir){

$files = glob($rootPath.$blockDir.'/*.json');

$key = array_rand($files);

$filesToUse[] = $files[$key];

}

$mapContext = new ZMQContext();

$mapDispatch = new ZMQSocket($mapContext, ZMQ::SOCKET_PUSH);

$mapDispatch->bind("tcp://*:50558");

$mapDispatch->send(0);

foreach($filesToUse as $blockPath){

$mapDispatch->send($blockPath);

sleep(1);

}

$filesToUse is an array of files submitted by users that contain information to be used in querying the game server. As you can see, I'm looping through the array and sending each file to the ZeroMQ listener file, which contains:

$startTime = time();

$context = new ZMQContext();

$receiver = new ZMQSocket($context, ZMQ::SOCKET_PULL);

$receiver->connect("tcp://*:50557");

$sender = new ZMQSocket($context, ZMQ::SOCKET_PUSH);

$sender->connect("tcp://*:50559");

while(true){

$file = $receiver->recv();

// -------------------------------------------------- do all work here

// ... ~ 75:00 [min] DATA PROCESSING SECTION foreach .recv()-ed WORK-UNIT

// ----------------------------------------------------------------------

$endTime = time();

$totalTime = $endTime - $startTime;

$sender->send('Processing of domain '.listener::$domain.' competed on '.date('M-j-y', $endTime).' in '.$totalTime.' seconds.');

}

Then, in the final listener file:

$context = new ZMQContext();

$receiver = new ZMQSocket($context, ZMQ::SOCKET_PULL);

$receiver->bind("tcp://*:50559");

while(true){

$log = fopen($rootPath.'logs/sink_'.date('F-jS-Y_h-i-A').'.txt', 'a');

fwrite($log, $receiver->recv());

fclose($log);

}

When the worker script is run from cron, I get no confirmation text in my log.

Q1) is this the most efficient way to do what I'm trying to?

Q2) am I trying to use or implement ZeroMQ incorrectly, here?

And, as it would seem, using cron to call 14 files simultaneously is causing the load to far exceed the allotment. I know I could probably just set the jobs to run at different times throughout the day, but if at all possible, I would like to keep all updates on the same schedule.

UPDATE:

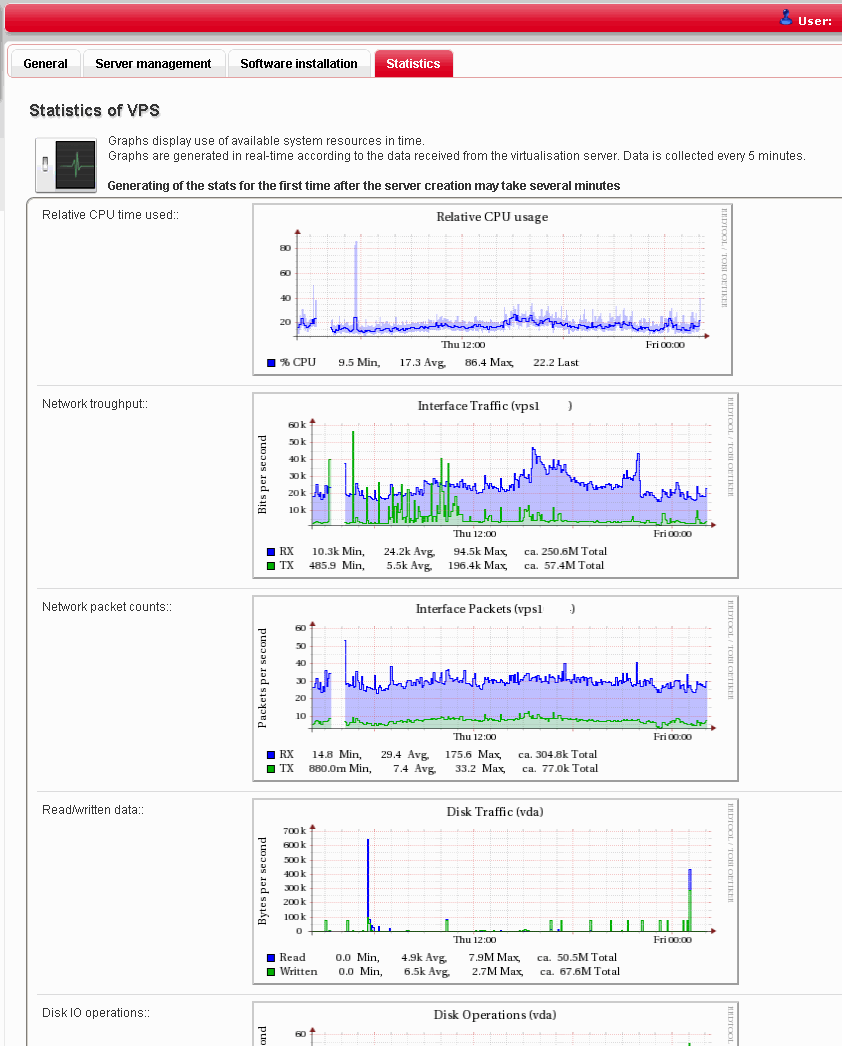

I have since gone ahead and upgraded my VPS to 2 CPU cores, so the load aspect of the question isn't really all that relevant anymore.

The code above has also been changed to the current setup.

I am, after the code-update, getting an email from cron now with the error:

Fatal error: Uncaught exception'ZMQSocketException' with message 'Failed to bind the ZMQ: Address already in use'

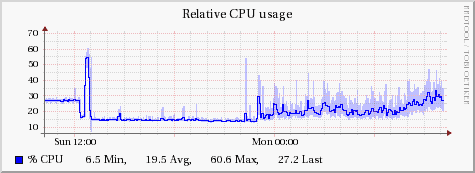

a )what is your quantitative metric for comparing{ a more | a less | the most }-efficient way to do something ( a minimum scope of re-factoring? a minimum software design cost? a shortest time to RTO? minimum externally spent expenses? )b )what is yourvCPUutilisation graph ( collect graphs from VPS-management console for each yourvCPU-vCore/ post this + all following as an update here, right )?c )what is yourvHDDutilisation graph?d )what is yourvLANutilisation graph?e )How many threads can your VPS run (vCPU/HT)? – Barragan.connect()to any localhost URL-exposed port#50558? Could you also update figures / post 24/7 graphs ( well this still makes a pretty sense for the very week where you have both a 1vCPU& 2vCPUmodus-operandi ) / raw printscreens for details about resources utilisation patterns? Thanks for your kind re-considering the importance of quantitative facts for your MCVE issue. – Barragan