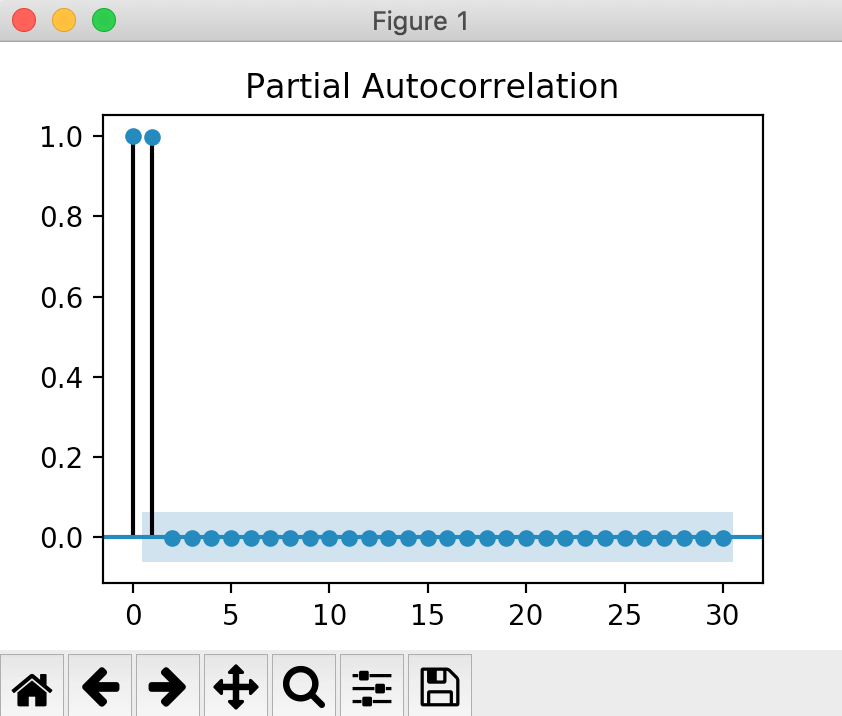

I have a time series that appears to have a significant lag when observing the partial autocorrelation (PACF) plot, i.e. PACF value is greater than the blue confidence interval. I wanted to verify this programmatically but it doesn't seem to work.

I plotted the PACF plot with statsmodels time series api, which showed the first lag was significant. So, I used the PACF estimation to get the PACF values along with the confidence interval at each point, but the confidence intervals between the two don't match up. What's even more odd is the plot function in the source code uses the underlying estimation function so they should both match up.

Example:

import numpy as np

import matplotlib.pyplot as plt

import statsmodels.api as sm

x = np.arange(1000)

sm.graphics.tsa.plot_pacf(x)

plt.show()

Which shows the first lag is quite significant that is ~0.98 and the confidence interval (blue rectangle) is about (-0.06, 0.06) throughout the plot.

Alternatively, when trying to get these exact plot values (only getting first 10 lags for brevity):

sm.tsa.stattools.pacf(x, nlags=10, alpha=0.05)

The resulting PACF values are (which match the above plot):

array([ 1. , 0.997998 , -0.00200201, -0.00200402, -0.00200605,

-0.0020081 , -0.00201015, -0.00201222, -0.0020143 , -0.00201639,

-0.00201849])

And the confidence interval (shown in blue in the above graph), seems off for the first lag:

array([[ 1. , 1. ],

[ 0.93601849, 1.0599775 ],

[-0.06398151, 0.0599775 ],

[-0.06398353, 0.05997548],

[-0.06398556, 0.05997345],

[-0.0639876 , 0.05997141],

[-0.06398965, 0.05996935],

[-0.06399172, 0.05996729],

[-0.0639938 , 0.05996521],

[-0.06399589, 0.05996312],

[-0.06399799, 0.05996101]]))

What's going on?

Api Reference: