I am currently trying to use redmine with a postgres database but I'm running into some issues when setting up the environment.

Lets say I have the following compose file

db-redmine:

image: 'bitnami/postgresql:11'

user: root

container_name: 'orchestrator_redmine_pg'

environment:

- POSTGRESQL_USERNAME=${POSTGRES_USER}

- POSTGRESQL_PASSWORD=${POSTGRES_PASSWORD}

- POSTGRESQL_DATABASE=${POSTGRES_DB}

- BITNAMI_DEBUG=true

volumes:

- 'postgresql_data:/bitnami/postgresql'

redmine:

image: 'bitnami/redmine:4'

container_name: 'orchestrator_redmine'

ports:

- '3000:3000'

environment:

- REDMINE_DB_POSTGRES=orchestrator_redmine_pg

- REDMINE_DB_USERNAME=${POSTGRES_USER}

- REDMINE_DB_PASSWORD=${POSTGRES_PASSWORD}

- REDMINE_DB_NAME=${POSTGRES_DB}

- REDMINE_USERNAME=${REDMINE_USERNAME}

- REDMINE_PASSWORD=${REDMINE_PASSWORD}

volumes:

- 'redmine_data:/bitnami'

depends_on:

- db-redmine

volumes:

postgresql_data:

driver: local

redmine_data:

driver: local

This generates the postgres database for redmine and creates the redmine instance.

Once the containers are up, I enter the redmine instance and configure the application, this means creating custom fields, adding trackers, issue types, etc. Quite a lot of setup is required, so I don't want to do this everytime I want to deploy this kind of containers.

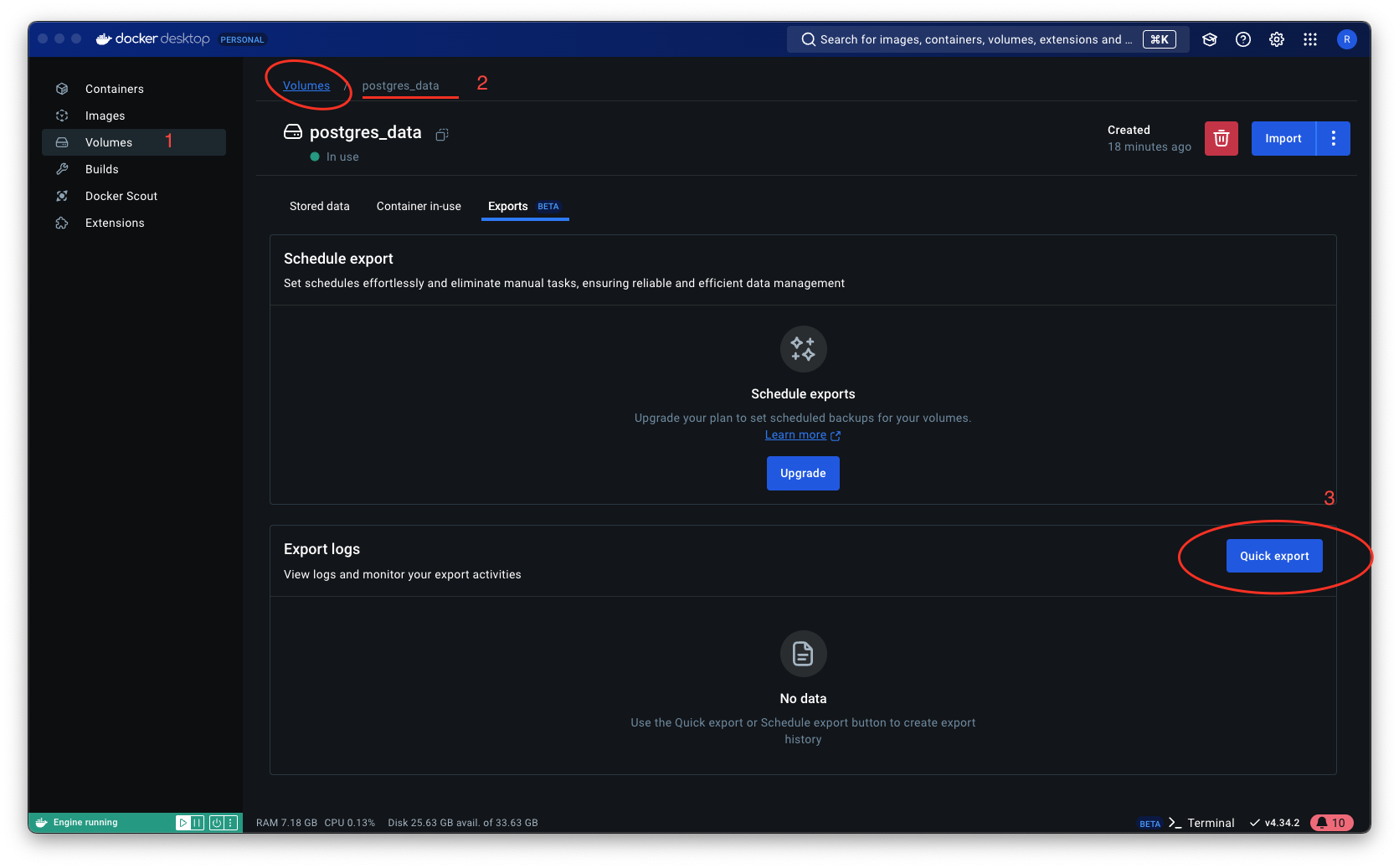

I figured that, because all of the setup data is going to the volumes, I could export such volumes and then, on a new machine, import them. This way, when both apps start on the new machine, they will have all the neccessary information from the previous setup.

This sounded simple enough, but I'm struggling with the export then import phase.

Form what I have seen, I am able to export postgresql_data to a .tar file by doing the following:

docker export postgresql_data --output="postgres_data.tar"

But how can I import the newly generated .tar file on a new machine? If I'm not mistaken, by importing the .tar file to a volume called postgresql_data in the new machine, the data from the template will be used for generating the new container.

Is it there a way to do this? Is this the correct way of duplicating a setup between two hosts?

Is doing something like docker volume create postgresql_data and then copying the files to the volume directory the way to go?

command: pg_restore -d "dbname" /dump-files/dumpwork as a way of doing it automatically? Nevertheless, will probably take this approach even if it needs to be done manually – Allethrin