What is the best way to detect the corners of an invoice/receipt/sheet-of-paper in a photo? This is to be used for subsequent perspective correction, before OCR.

My current approach has been:

RGB > Gray > Canny Edge Detection with thresholding > Dilate(1) > Remove small objects(6) > clear boarder objects > pick larges blog based on Convex Area. > [corner detection - Not implemented]

I can't help but think there must be a more robust 'intelligent'/statistical approach to handle this type of segmentation. I don't have a lot of training examples, but I could probably get 100 images together.

Broader context:

I'm using matlab to prototype, and planning to implement the system in OpenCV and Tesserect-OCR. This is the first of a number of image processing problems I need to solve for this specific application. So I'm looking to roll my own solution and re-familiarize myself with image processing algorithms.

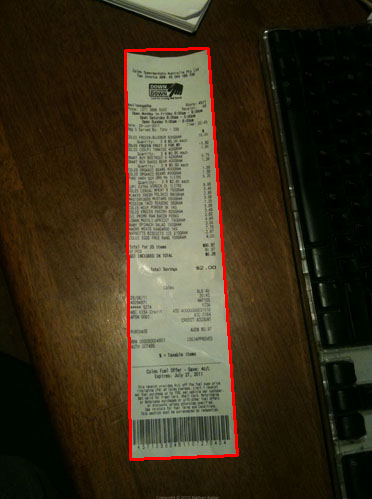

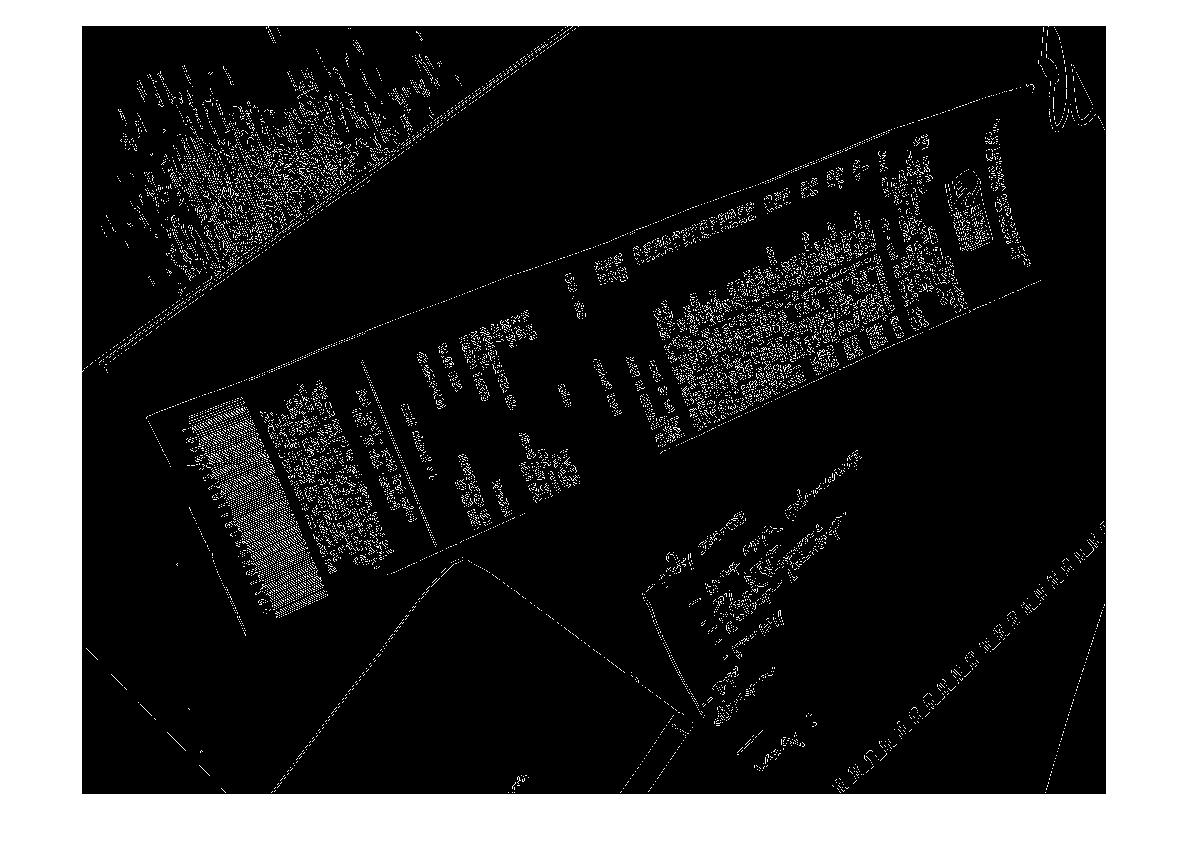

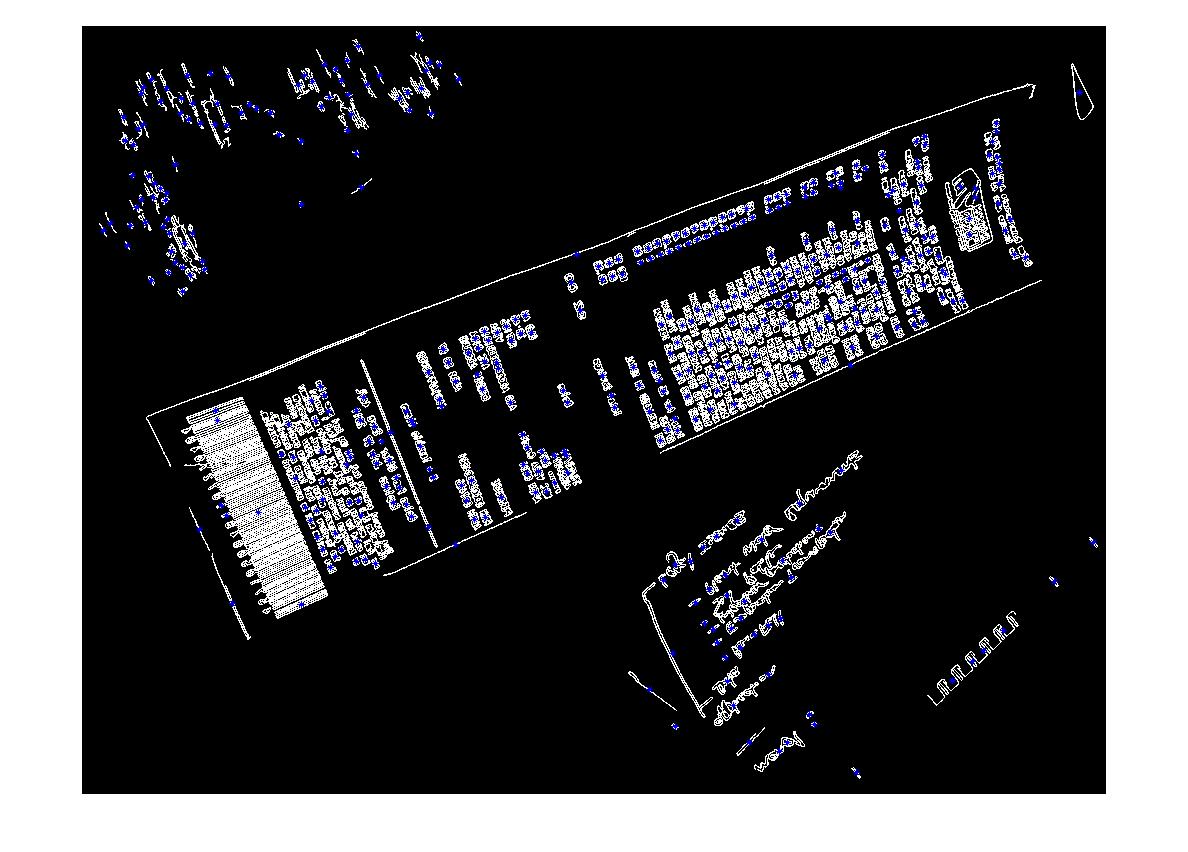

Here are some sample image that I'd like the algorithm to handle: If you'd like to take up the challenge the large images are at http://madteckhead.com/tmp

(source: madteckhead.com)

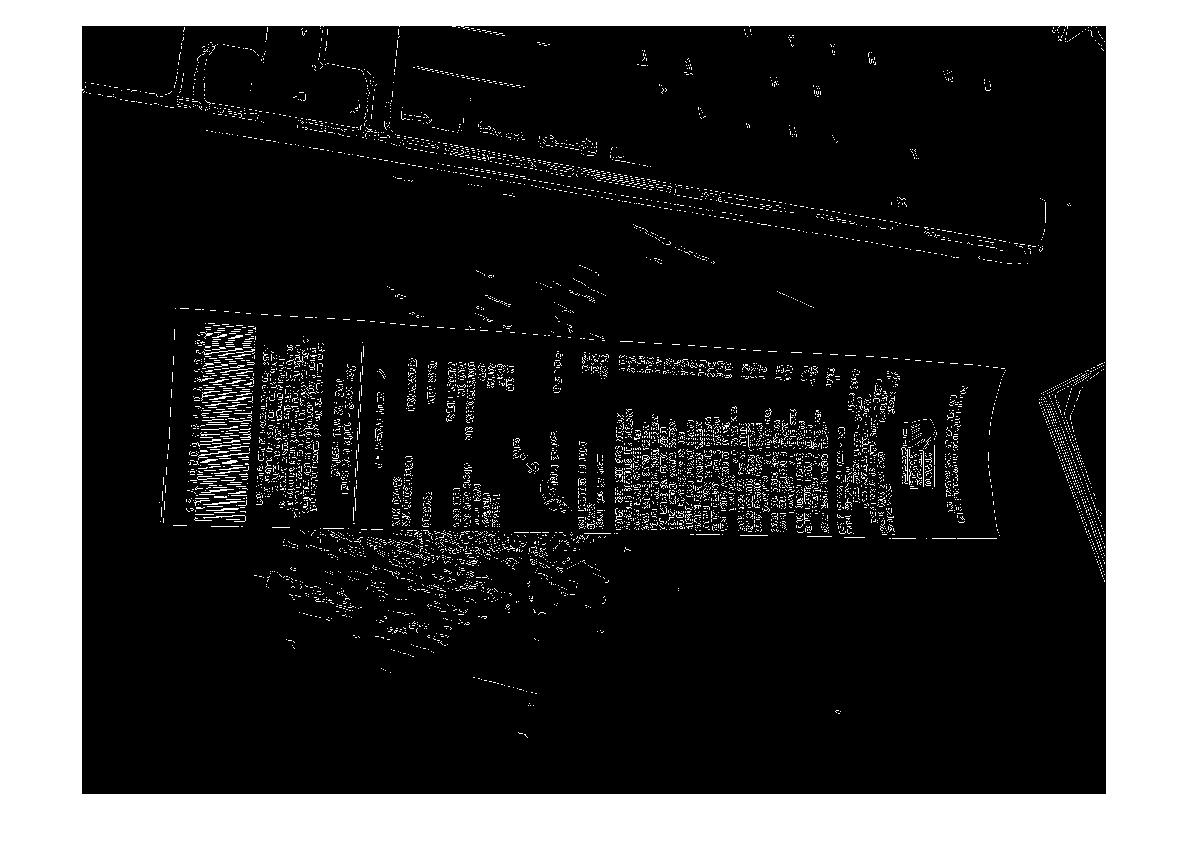

(source: madteckhead.com)

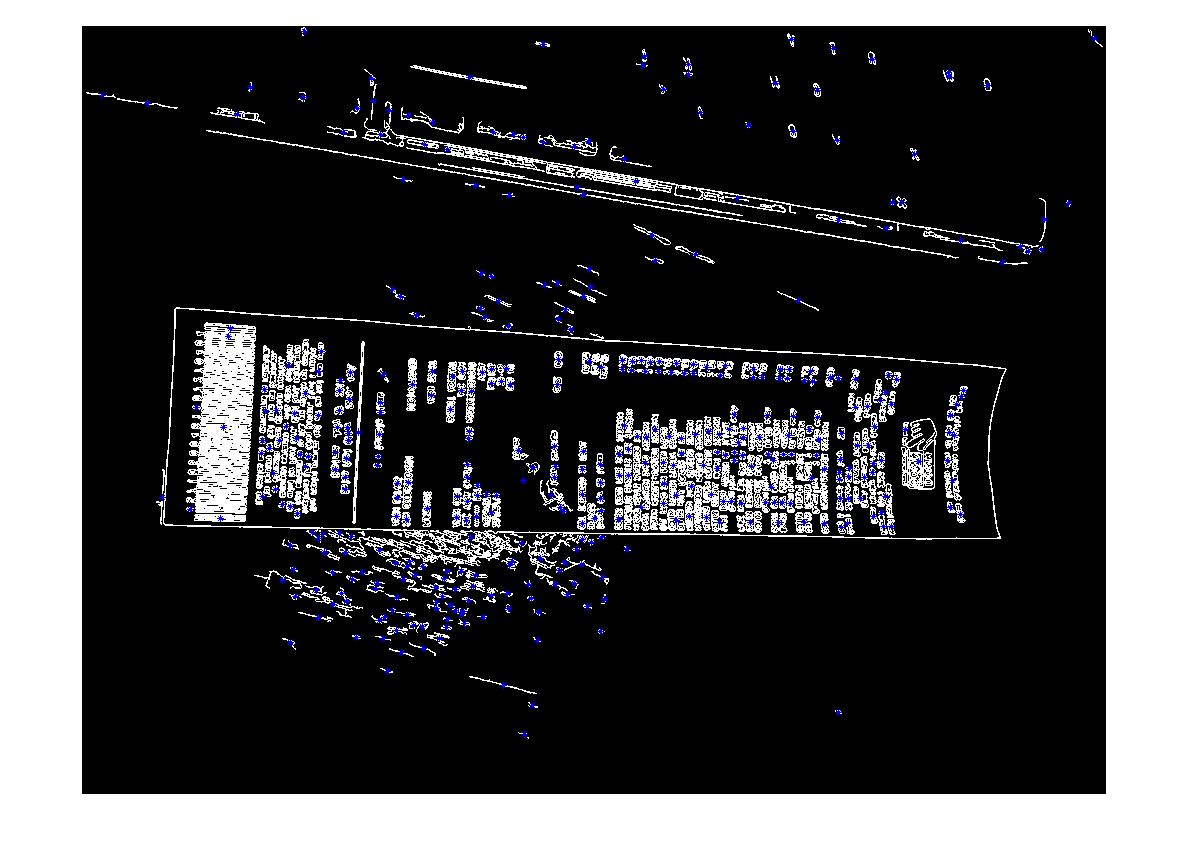

(source: madteckhead.com)

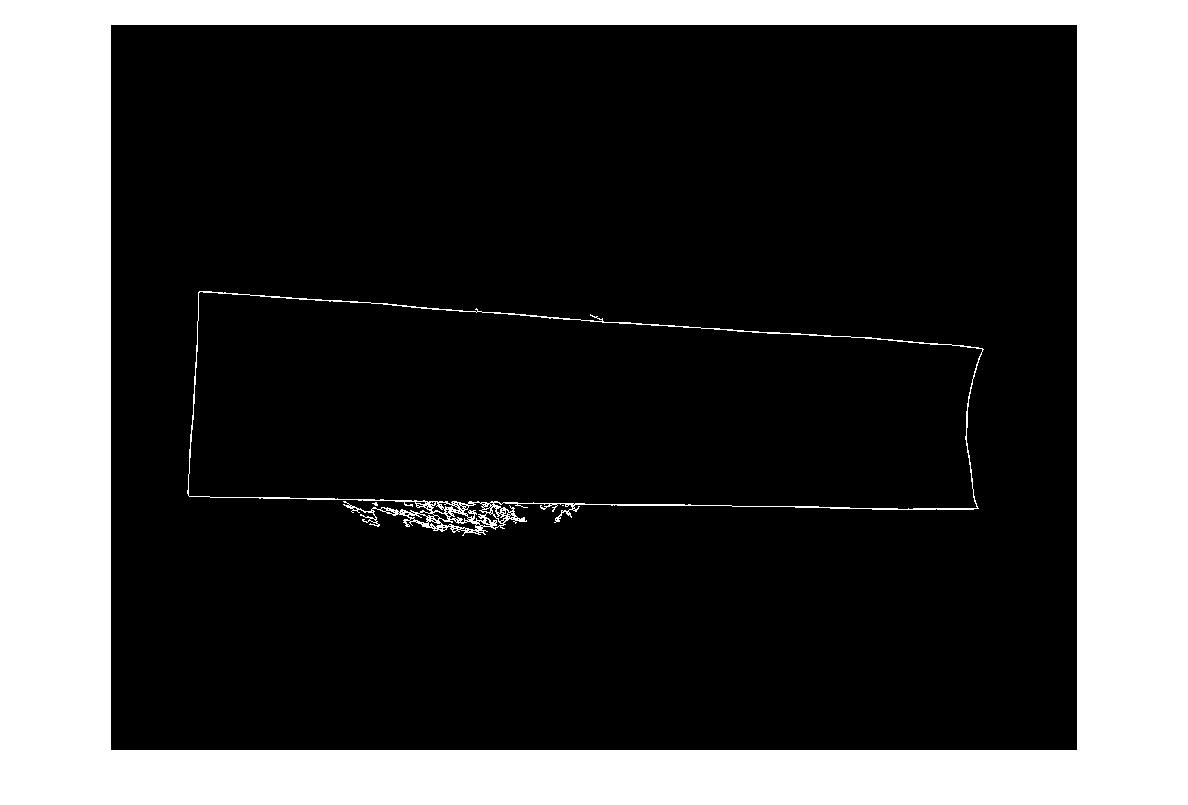

(source: madteckhead.com)

In the best case this gives:

(source: madteckhead.com)

(source: madteckhead.com)

(source: madteckhead.com)

However it fails easily on other cases:

(source: madteckhead.com)

(source: madteckhead.com)

(source: madteckhead.com)

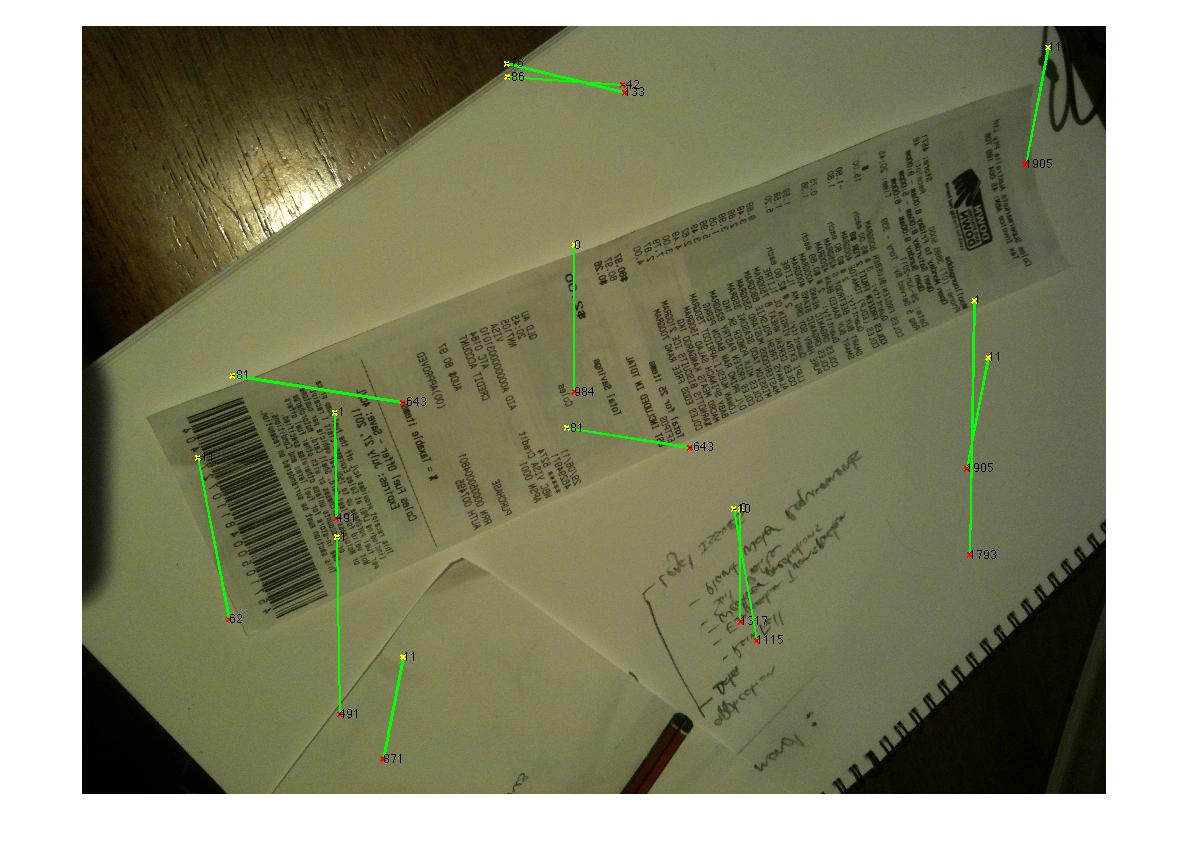

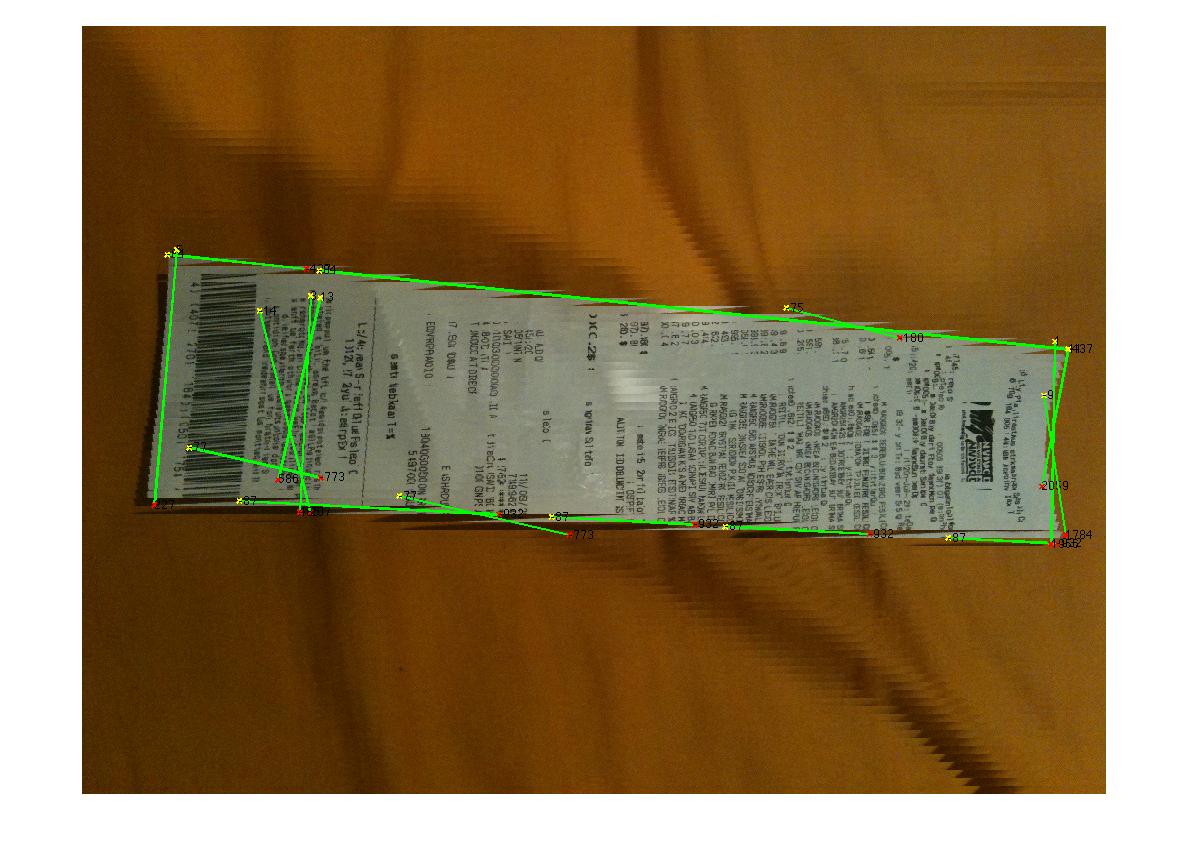

EDIT: Hough Transform Progress

Q: What algorithm would cluster the hough lines to find corners? Following advice from answers I was able to use the Hough Transform, pick lines, and filter them. My current approach is rather crude. I've made the assumption the invoice will always be less than 15deg out of alignment with the image. I end up with reasonable results for lines if this is the case (see below). But am not entirely sure of a suitable algorithm to cluster the lines (or vote) to extrapolate for the corners. The Hough lines are not continuous. And in the noisy images, there can be parallel lines so some form or distance from line origin metrics are required. Any ideas?

(source: madteckhead.com)